[Project Page] [Arxiv] [Weights]

Official repository for the paper VocaLiST: An Audio-Visual Synchronisation Model for Lips and Voices.

The paper has been accepted to Interspeech 2022.

There are 2 datasets involved in this work: i) The AV speech dataset of LRS2, and ii) the AV singing voice dataset of Acappella. The LRS2 dataset can be requested for download here. The Acappella dataset can be downloaded by following the steps mentioned here.

All the models considered in this work operate on audios sampled at 16kHz and videos with 25fps. The preprocessing steps are the same as in https://github.com/Rudrabha/Wav2Lip/blob/master/preprocess.py. The objective of the preprocessing step is to obtain the cropped RGB face frames of size 3x96x96 in the .jpg format and audios of 16kHz sampling rate for each of the video samples in respective datasets.

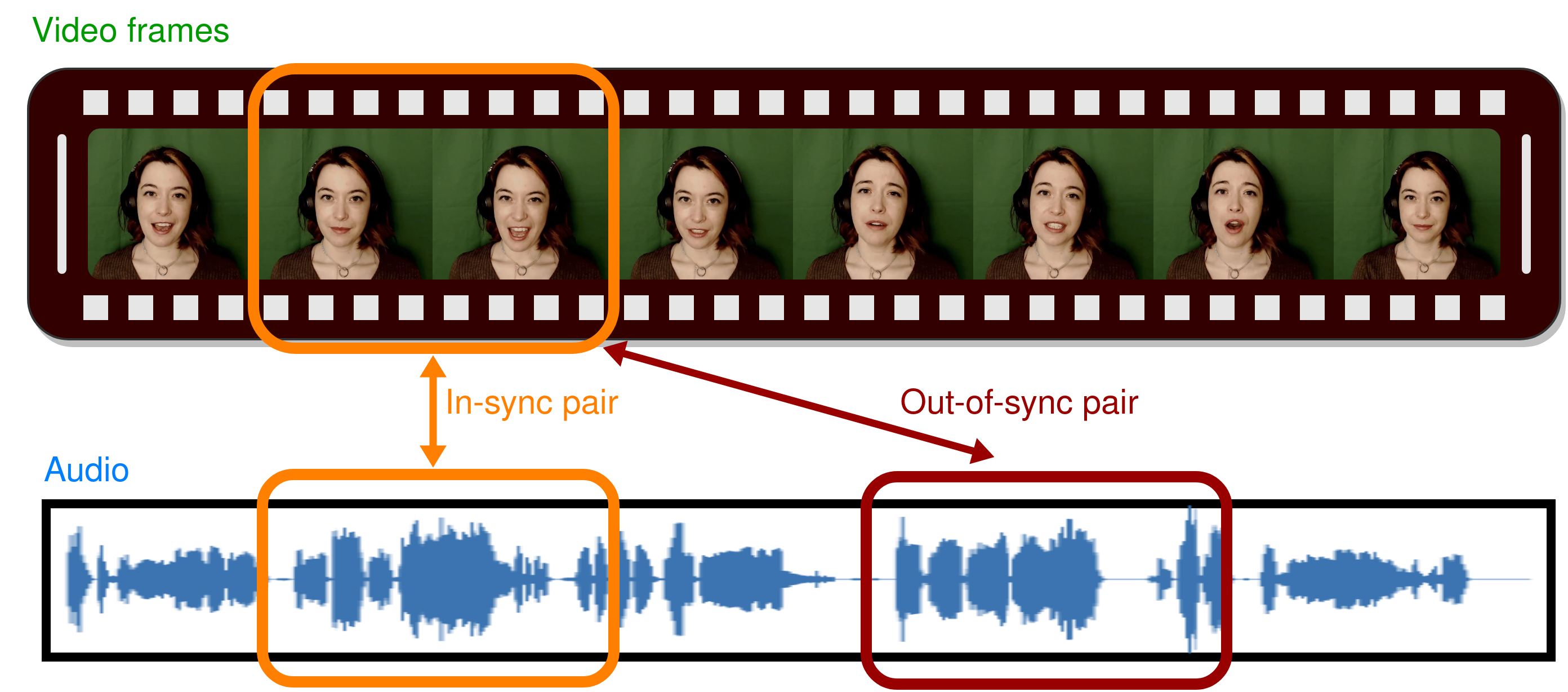

To train the VocaLiST model on LRS2 dataset, run the program train_vocalist_lrs2.py. To train on Acappella dataset, run the program train_vocalist_acappella.py. Please remember to change the paths to save the checkpoint and the dataset related paths accordingly. Note that, by default, models are trained to detect synchronization in a context of 5 frames, which corresponds to audio of 3200 elements. To change the context length, it is also necessary to change the length of audio, accordingly.

To test the models, first download the pretrained model weights from here. To test on LRS2, run the program test_lrs2.py. To test on Acappella dataset, run the program test_acappella.py. If you wish to evaluate on Acappella dataset only for a particular context, run the program test_acappella_specific.py. Please remember to change the paths to the pretrained weights and the dataset related paths accordingly.

The evaluation code is adapted from Out of time: automated lip sync in the wild. The training code and the experiment configuration setup is borrowed or adapted from that of A Lip Sync Expert Is All You Need for Speech to Lip Generation In the Wild. The code for internal components of the transformer block is borrowed from that of the work Multimodal Transformer for Unaligned Multimodal Language Sequences.

This project makes use of source code of other existing works. Only the original source code from this repository is provided under CC-BY-NC license, while the parts of the repository based on the code reused/adapted from elsewhere are available under their respective license terms. The code for evaluation setup is adapted from the source code of the paper ''Out of time: automated lip sync in the wild'' which is made available under https://github.com/joonson/syncnet_python/blob/master/LICENSE.md. The code for the transformer encoder and its internal components are made available under https://github.com/yaohungt/Multimodal-Transformer/blob/master/LICENSE. The code related to reading the experiment configuration (hparams) is adapted from the code of https://github.com/Rudrabha/Wav2Lip/ which is under non-commercial license terms.