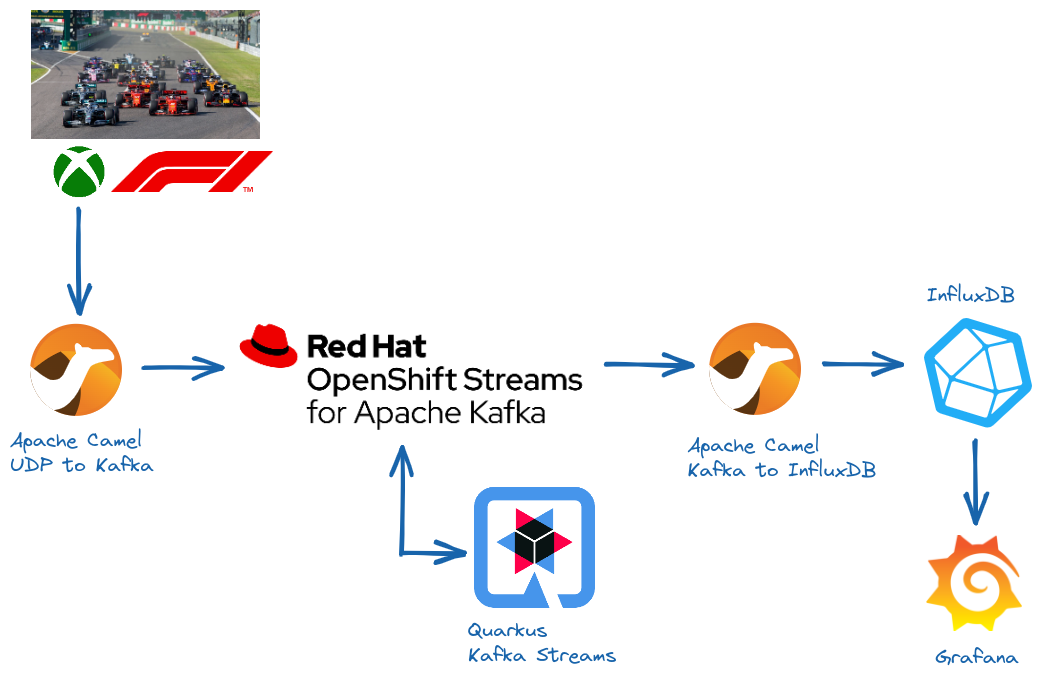

This repository provides a guide to deploy the Formula 1 - Telemetry with Apache Kafka project on a fully managed Kafka instance from the Red Hat OpenShift Streams for Apache Kafka service.

The main pre-requisites are:

- Having an account on Red Hat Hybrid Cloud.

- Having the

rhoasCLI tool (at least 0.38.6) installed by following instructions here. - Logging into your own Red Hat Hybrid Cloud account via

rhoas logincommand by following instructions here. - jq tool.

Create the Apache Kafka instance by running the following command.

rhoas kafka create --name formula1-kafka --waitIt specifies the --name option with the name of the instance and instructs the command to run synchronously by waiting for the instance to be ready using the --wait option.

The command will exit when the instance is ready providing some related information in JSON format like following.

✔️ Kafka instance "formula1-kafka" has been created:

{

"bootstrap_server_host": "formula-j-d-nks-g-f-pqm---fmvg.bf2.kafka.rhcloud.com:443",

"cloud_provider": "aws",

"created_at": "2022-01-23T11:35:47.142502Z",

"href": "/api/kafkas_mgmt/v1/kafkas/c8nkp5i1e9ohm495fnvd",

"id": "c8nkp5i1e9ohm495fnvd",

"instance_type": "eval",

"kind": "Kafka",

"multi_az": true,

"name": "formula1-kafka",

"owner": "ppatiern",

"reauthentication_enabled": true,

"region": "us-east-1",

"status": "ready",

"updated_at": "2022-01-23T11:40:24.253791Z",

"version": "2.8.1"

}To confirm that everything is fine, verify the status of the Kafka instance by running the following command.

rhoas status kafkaThe output will show status and bootstrap URL of the Kafka instance.

Kafka

--------------------------------------------------------------------------------

ID: c8nkp5i1e9ohm495fnvd

Name: formula1-kafka

Status: ready

Bootstrap URL: formula-j-d-nks-g-f-pqm---fmvg.bf2.kafka.rhcloud.com:443The Formula 1 project relies on some topics for storing and analyzing the telemetry data. They can be customized via environment variables across the different applications but by running the following script you can create them with the default names.

./create-topics.shEach command will print the topic configuration. Check that all topics are created by running the following command.

rhoas kafka topic listThe output will show the list of the topics on the Kafka instance.

NAME (5) PARTITIONS RETENTION TIME (MS) RETENTION SIZE (BYTES)

-------------------------------- ------------ --------------------- ------------------------

f1-telemetry-drivers 1 604800000 -1 (Unlimited)

f1-telemetry-drivers-avg-speed 1 604800000 -1 (Unlimited)

f1-telemetry-drivers-laps 1 604800000 -1 (Unlimited)

f1-telemetry-events 1 604800000 -1 (Unlimited)

f1-telemetry-packets 1 604800000 -1 (Unlimited)Create a service account for the UDP to Apache Kafka application by running the following command.

rhoas service-account create --short-description formaula1-udp-kafka --file-format env --output-file ./formula1-udp-kafka.envThis will generate a file containing the credentials for accessing the Kafka instance as environment variables.

## Generated by rhoas cli

RHOAS_SERVICE_ACCOUNT_CLIENT_ID=srvc-acct-adg23480-dsdf-244a-gt65-d4vd65784dsf

RHOAS_SERVICE_ACCOUNT_CLIENT_SECRET=f8g93220-9619-55ed-c23d-a2356c1fds9c

RHOAS_SERVICE_ACCOUNT_OAUTH_TOKEN_URL=https://identity.api.openshift.com/auth/realms/rhoas/protocol/openid-connect/tokenThe UDP to Apache Kafka application needs the rights to write on the f1-telemetry-drivers, f1-telemetry-events and f1-telemetry-packets topics.

To simplify let's grent access as a producer on topics starting with f1- prefix.

source ./formula1-udp-kafka.env

rhoas kafka acl grant-access -y --producer --service-account $RHOAS_SERVICE_ACCOUNT_CLIENT_ID --topic-prefix f1-In this way the following ACLs entries will be created for the corresponding service account.

PRINCIPAL PERMISSION OPERATION DESCRIPTION

------------------------------------------------ ------------ ----------- -------------------------

srvc-acct-adg23480-dsdf-244a-gt65-d4vd65784dsf allow describe topic starts with "f1-"

srvc-acct-adg23480-dsdf-244a-gt65-d4vd65784dsf allow write topic starts with "f1-"

srvc-acct-adg23480-dsdf-244a-gt65-d4vd65784dsf allow create topic starts with "f1-"

srvc-acct-adg23480-dsdf-244a-gt65-d4vd65784dsf allow write transactional-id is "*"

srvc-acct-adg23480-dsdf-244a-gt65-d4vd65784dsf allow describe transactional-id is "*" Create a service account for the Apache Kafka to InfluxDB application by running the following command.

rhoas service-account create --short-description formula1-kafka-influxdb --file-format env --output-file ./formula1-kafka-influxdb.envThis will generate a file containing the credentials for accessing the Kafka instance as environment variables.

## Generated by rhoas cli

RHOAS_SERVICE_ACCOUNT_CLIENT_ID=srvc-acct-abc1234-dsdf-244a-gt65-d4vd65784dsf

RHOAS_SERVICE_ACCOUNT_CLIENT_SECRET=g6d12345-9619-55ed-c23d-a2356c1fds9c

RHOAS_SERVICE_ACCOUNT_OAUTH_TOKEN_URL=https://identity.api.openshift.com/auth/realms/rhoas/protocol/openid-connect/tokenThe Apache Kafka to InfluxDB application needs the rights to read from the f1-telemetry-drivers, f1-telemetry-events and f1-telemetry-drivers-avg-speed topics.

To simplify let's grent access as a consumer on topics starting with f1- prefix.

source ./formula1-kafka-influxdb.env

rhoas kafka acl grant-access -y --consumer --service-account $RHOAS_SERVICE_ACCOUNT_CLIENT_ID --topic-prefix f1- --group allIn this way the following ACLs entries will be created for the corresponding service account.

PRINCIPAL PERMISSION OPERATION DESCRIPTION

------------------------------------------------ ------------ ----------- -------------------------

srvc-acct-abc1234-dsdf-244a-gt65-d4vd65784dsfa allow describe topic starts with "f1-"

srvc-acct-abc1234-dsdf-244a-gt65-d4vd65784dsfa allow read topic starts with "f1-"

srvc-acct-abc1234-dsdf-244a-gt65-d4vd65784dsfa allow read group is "*"Create a service account for the Apache Kafka Straems applications by running the following command.

rhoas service-account create --short-description formula1-kafka-streams --file-format env --output-file ./formula1-kafka-streams.envThis will generate a file containing the credentials for accessing the Kafka instance as environment variables.

## Generated by rhoas cli

RHOAS_SERVICE_ACCOUNT_CLIENT_ID=srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae

RHOAS_SERVICE_ACCOUNT_CLIENT_SECRET=5d614086-5562-4f19-9f83-e5e616e6e045

RHOAS_SERVICE_ACCOUNT_OAUTH_TOKEN_URL=https://identity.api.openshift.com/auth/realms/rhoas/protocol/openid-connect/tokenThe Apache Kafka Streams applications need the rights to read from and write to multiple topics as f1-telemetry-drivers, f1-telemetry-drivers-avg-speed and f1-telemetry-drivers-laps.

To simplify let's grent access as a producer and consumer on topics starting with f1- prefix.

source ./formula1-kafka-streams.env

rhoas kafka acl grant-access -y --producer --consumer --service-account $RHOAS_SERVICE_ACCOUNT_CLIENT_ID --topic-prefix f1- --group allIn this way the following ACLs entries will be created for the corresponding service account.

PRINCIPAL (7) PERMISSION OPERATION DESCRIPTION

------------------------------------------------ ------------ ----------- -------------------------

srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae allow describe topic starts with "f1-"

srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae allow read topic starts with "f1-"

srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae allow read group is "*"

srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae allow write topic starts with "f1-"

srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae allow create topic starts with "f1-"

srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae allow write transactional-id is "*"

srvc-acct-93a071fc-7709-43ad-b0bd-431ce4f640ae allow describe transactional-id is "*" Start the UDP to Apache Kafka application by running the following command.

./formula1-run.sh \

./formula1-udp-kafka.env \

$(rhoas kafka describe --name formula1-kafka | jq -r .bootstrap_server_host) \

<PATH_TO_JAR>The <PATH_TO_JAR> is the path to the application JAR (i.e. /home/ppatiern/github/formula1-telemetry-kafka/udp-kafka/target/f1-telemetry-udp-kafka-1.0-SNAPSHOT-jar-with-dependencies.jar)

Start the Apache Kafka to InfluxDB application by running the following command.

./formula1-run.sh \

./formula1-kafka-influxdb.env \

$(rhoas kafka describe --name formula1-kafka | jq -r .bootstrap_server_host) \

<PATH_TO_JAR>The <PATH_TO_JAR> is the path to the application JAR (i.e. /home/ppatiern/github/formula1-telemetry-kafka/kafka-influxdb/target/f1-telemetry-kafka-influxdb-1.0-SNAPSHOT-jar-with-dependencies.jar)

Start one of the Apache Kafka Streams based applications by running the following command.

export F1_STREAMS_INTERNAL_REPLICATION_FACTOR=3

./formula1-run.sh \

./formula1-kafka-streams.env \

$(rhoas kafka describe --name formula1-kafka | jq -r .bootstrap_server_host) \

<PATH_TO_JAR>The <PATH_TO_JAR> is the path to the application JAR (i.e. /home/ppatiern/github/formula1-telemetry-kafka/streams-avg-speed/target/f1-telemetry-streams-avg-speed-1.0-SNAPSHOT-jar-with-dependencies.jar)

The Quarkus based Apache Kafka Streams application uses a different set of configuration parameters and can be started by running the following command.

source ./formula1-kafka-streams.env

export KAFKA_BOOTSTRAP_SERVERS=$(rhoas kafka describe --name formula1-kafka | jq -r .bootstrap_server_host)

export QUARKUS_KAFKA_STREAMS_SASL_MECHANISM=PLAIN

export QUARKUS_KAFKA_STREAMS_SECURITY_PROTOCOL=SASL_SSL

export QUARKUS_KAFKA_STREAMS_SASL_JAAS_CONFIG="org.apache.kafka.common.security.plain.PlainLoginModule required username=\"${RHOAS_SERVICE_ACCOUNT_CLIENT_ID}\" password=\"${RHOAS_SERVICE_ACCOUNT_CLIENT_SECRET}\";"

java -jar <PATH_TO_JAR>The <PATH_TO_JAR> is the path to the quarkus-run.jar (i.e. /home/ppatiern/github/formula1-telemetry-kafka/quarkus-streams-avg-speed/target/quarkus-app/quarkus-run.jar)

In order to clean the deployment, you can run the following script to delete the service accounts and the local env files.

./delete-service-accounts.shNext step deleting the topics.

./delete-topics.shThen finally delete the Apache Kafka instance.

rhoas kafka delete -y --name formula1-kafka