This is the offical implementation of the paper Training-Free Efficient Video Generation via Dynamic Token Carving

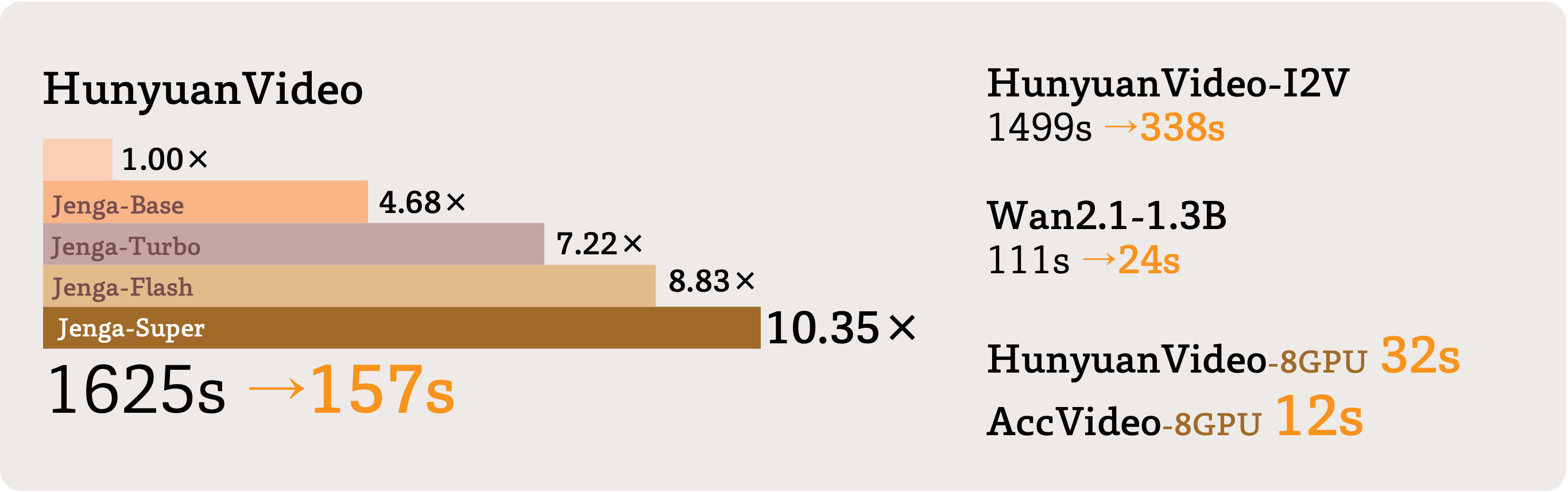

Jenga can generate videos with 4.68-10.35 times faster on single GPU.

Please visit the project page for more video results.

- Model Adaptation

- HunyuanVideo Inference

- Multi-gpus Parallel inference (Faster inference speed on more gpus)

- HunyuanVideo-I2V Inference

- Wan2.1

- Engineering Optimization

- Quantization

- ComfyUI

- RoPE & Norm Kernel

- FA3 Adaptation

Following the installation as in HunyuanVideo:

# 1. Create conda environment

conda create -n Jenga python==3.10.9

# 2. Activate the environment

conda activate Jenga

# 3. Install PyTorch and other dependencies using conda

# For CUDA 12.4

conda install pytorch==2.4.0 torchvision==0.19.0 torchaudio==2.4.0 pytorch-cuda=12.4 -c pytorch -c nvidia

# 4. Install pip dependencies

python -m pip install -r hy_requirements.txt

# 5. Install flash attention v2 for acceleration (requires CUDA 11.8 or above)

python -m pip install ninja

python -m pip install git+https://github.com/Dao-AILab/flash-attention.git@v2.6.3

# 6. Install xDiT for parallel inference (we test on H800, cuda124)

python -m pip install xfuser==0.4.3.post3

python -m pip install yunchang==0.6.3.post1Please following the instruction in model_down_hy.md.

bash scripts/hyvideo_jenga_base.sh # Jenga Base (Opt. 310s)

# bash scripts/hyvideo_jenga_turbo.sh # Jenga Turbo

# bash scripts/hyvideo_jenga_flash.sh # Jenga Flash

# bash scripts/hyvideo_jenga_3stage.sh # Jenga 3Stage Inference time for different settings (DiT time, single H800, after warmup):

| HunyuanVideo | Jenga-Base | Jenga-Turbo | Jenga-Flash | Jenga-3Stage |

|---|---|---|---|---|

| 1625s | 310s (5.24x) | 225s (7.22x) | 184s (8.82x) | 157s (10.35x) |

If you want to type your prompt directly, just change the --prompt. Following command (for Jenga-Turbo)

If you encounters OOM issue, try to add

--use-cpu-offload.

CUDA_VISIBLE_DEVICES=0 python3 -u ./jenga_hyvideo.py \

--video-size 720 1280 \

--video-length 125 \

--infer-steps 50 \

--prompt "A cat walks on the grass, realistic style." \

--seed 42 \

--embedded-cfg-scale 6.0 \

--flow-shift 7.0 \

--flow-reverse \

--sa-drop-rates 0.7 0.8 \

--p-remain-rates 0.3 \

--post-fix "Jenga_Turbo" \

--save-path ./results/hyvideo \

--res-rate-list 0.75 1.0 \

--step-rate-list 0.5 1.0 \

--scheduler-shift-list 7 9We provide set of 8GPU runnable scripts (further 5-6x compared with single GPU):

bash scripts/hyvide_multigpu_jenga_base.sh

# bash scripts/hyvide_multigpu_jenga_turbo.sh

# bash scripts/hyvide_multigpu_jenga_flash.sh

# bash scripts/hyvide_multigpu_jenga_3stage.sh For customizing (Jenga-Turbo as example):

export NPROC_PER_NODE=8

export ULYSSES_DEGREE=8 # number of GPU

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 torchrun --nproc_per_node=$NPROC_PER_NODE ./jenga_hyvideo_multigpu.py \

--video-size 720 1280 \

--video-length 125 \

--infer-steps 50 \

--prompt "The camera rotates around a large stack of vintage televisions all showing different programs -- 1950s sci-fi movies, horror movies, news, static, a 1970s sitcom, etc, set inside a large New York museum gallery." \

--seed 42 \

--embedded-cfg-scale 6.0 \

--flow-shift 7.0 \

--flow-reverse \

--sa-drop-rates 0.75 0.85 \

--p-remain-rates 0.3 \

--post-fix "Jenga_Turbo" \

--save-path ./results/hyvideo_multigpu \

--res-rate-list 0.75 1.0 \

--step-rate-list 0.5 1.0 \

--ulysses-degree $ULYSSES_DEGREE \

--scheduler-shift-list 7 9Inference time for different settings (DiT time, 8xH800, after warmup):

| HunyuanVideo | Jenga-Base | Jenga-Turbo | Jenga-Flash | Jenga-3Stage |

|---|---|---|---|---|

| 225s | 55s (4.09x) | 40s (5.62x) | 38s (5.92x) | 32s (7.03x) |

Due to the constant time of VAE, we recommend allocating each prompt to a single card for batch sampling. Please check the sample script in Jenga-Turbo.

bash ./scripts/hyvideo_batched_sample.shThe general pipeline is the same, just download weight from Huggingface to ckpts/AccVideo

Then run the script

bash ./scripts/accvideo_jenga.shThe general idea of Jenga is to reduce token interactions in Diffusion Transformers (DiTs). Following is an overview.

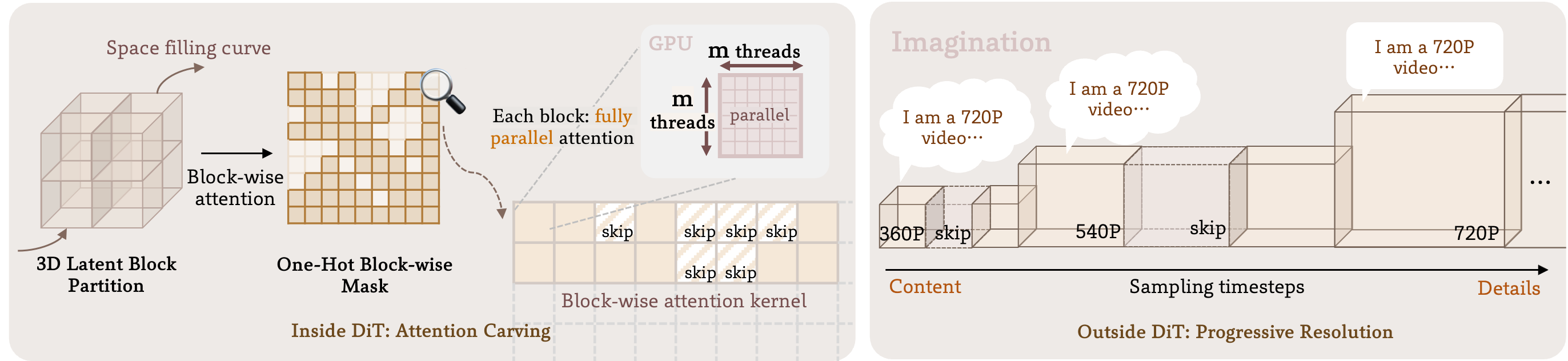

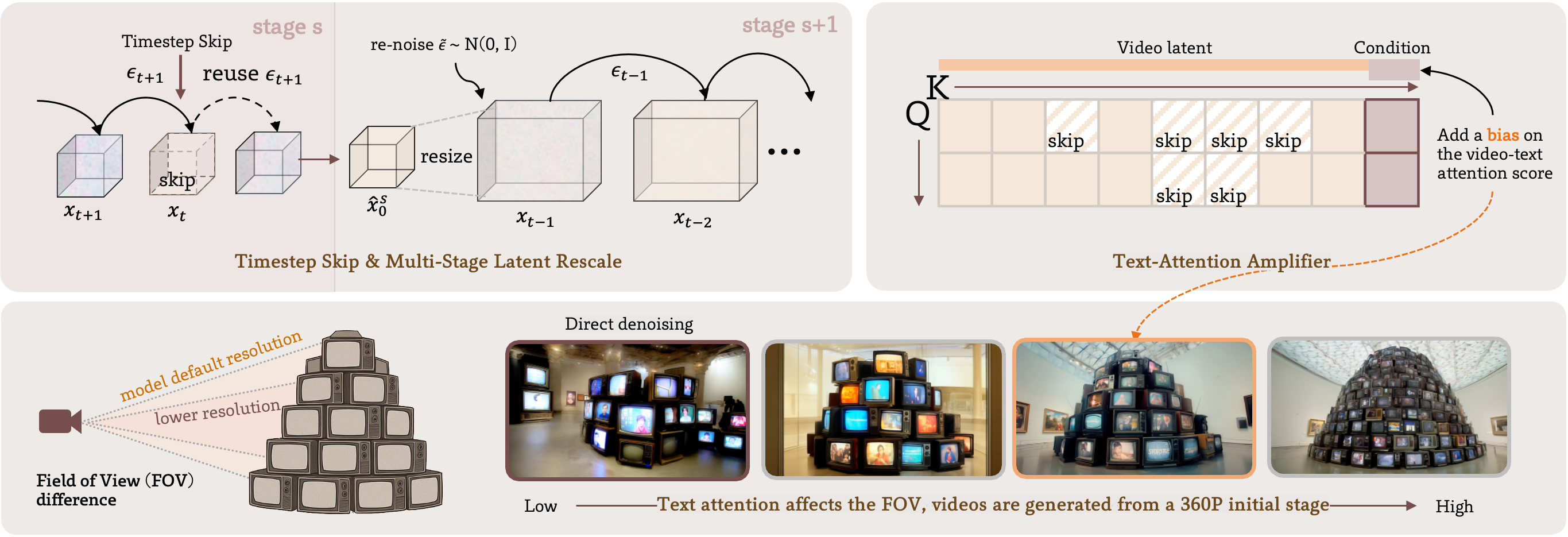

The left part illustrates the attention carving. A 3D video latent is partitioned into local blocks before being passed to the Transformer layers. A block-wise attention is processed to get a head-aware sparse block-selection masks. In each selected block, dense parallel attention is performed. The right part illustrates the Progressive Resolution strategy. The number of tokens and timesteps is compressed to ensure an efficient generation.

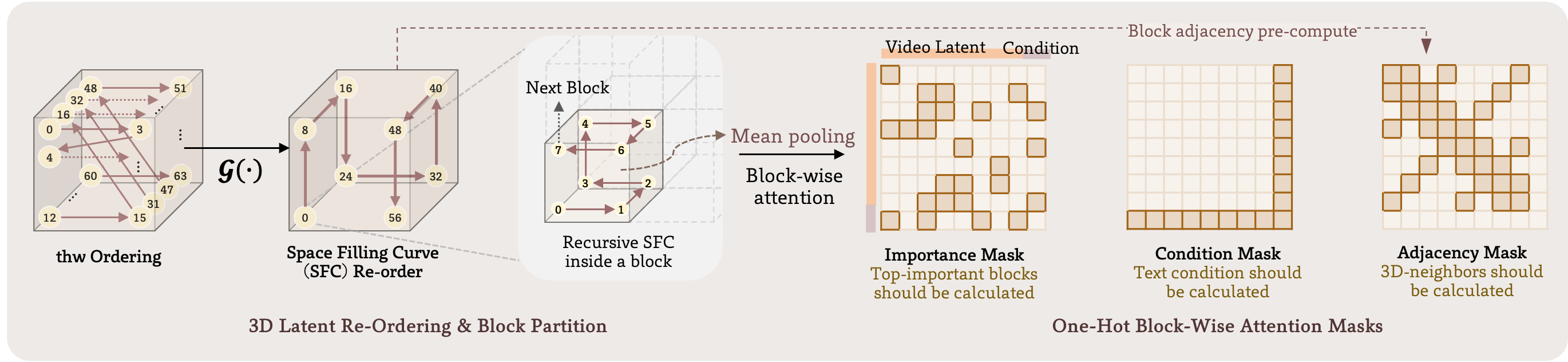

Attention Carving (AttenCarve). Here we illustrate a toy example of a 4x4x4 latent, where m=8 latent items form a block. Left: The latent 3D re-ordering and block partition via space filling curves (SFC). Right: After the block-wise attention, we can construct the Importance Mask, combined with the pre-computed Condition Mask and Adjacency Mask, a block-wise dense attention mask is passed to the customized kernel for device-efficient attention.

Progressive Resolusion (ProRes). Left: A brief illustration of stage switch and timestep skip. Before the rescale in stage s, we revert the latent to a clean state

If you find Jenga useful for your research and applications, please cite using this BibTeX:

@article{zhang2025trainingfreeefficientvideogeneration,

title={Training-Free Efficient Video Generation via Dynamic Token Carving},

author={Yuechen Zhang and Jinbo Xing and Bin Xia and Shaoteng Liu and Bohao Peng and Xin Tao and Pengfei Wan and Eric Lo and Jiaya Jia},

journal={arXiv preprint arXiv:2505.16864},

year={2025}

}We would like to thank the contributors to the HunyuanVideo, HunyuanVideo-I2V, Wan2.1, AccVideo, MInference, Gilbert and HuggingFace repositories, for their open research and exploration. Additionally, we also thank the Tencent Hunyuan Multimodal team for their help with the text encoder.