Pytorch version of Parallel Actor Critic

Tried to solve the MinecraftBasic-v0 task using A2C. Gym environment code are copied and modified from https://github.com/openai/baselines.

Requirements

- gym: https://github.com/openai/gym

- minecraft-py: https://github.com/tambetm/minecraft-py

- gym_minecraft: https://github.com/tambetm/gym-minecraft

- pytorch: https://github.com/pytorch/pytorch

python 2.7 is preferred if we want to use minecraft-py.

Run

-

Minecraft environment:

python run_minecraft.py -

LunarLander environment:

python run_lunarlander.py

The parameters can be adjusted like number of processes, gamma value, learning rate, etc.

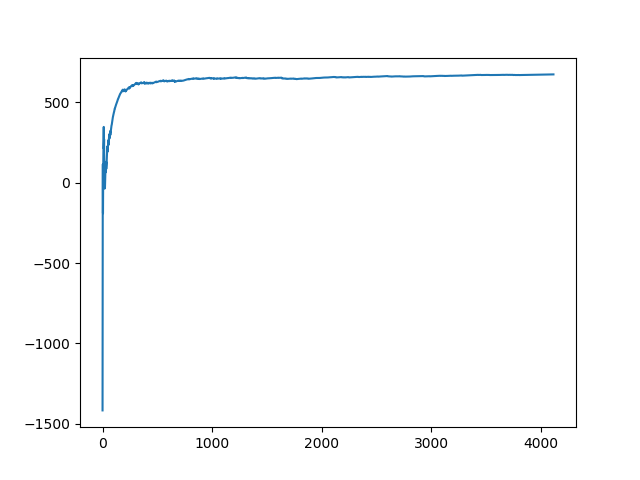

Results

- Minecraft:

The recorded results from episode 0(failed), 400, 800, 1200, 1600, 2000, 2400, 2800, 3200, 3600. As we can see, the last episode can quickly navigate to the goal. However, the result is not so promising, the agent still needs more than 20 steps to reach the goal. After the second iteration, the agent soon found a sub-optimal policy.

- LunarLander: In progress....

Issues:

Unlike Atari or other openai environments, Minecraft will not stop and wait for the agent to execute an action. Therefore, agents are missing lots of observations if opening too many processes. This will degrade the performance as discussed in tambetm/gym-minecraft#3

We can set the tick to a higher value in task markdown.