This repository is the official implementation of the following paper:

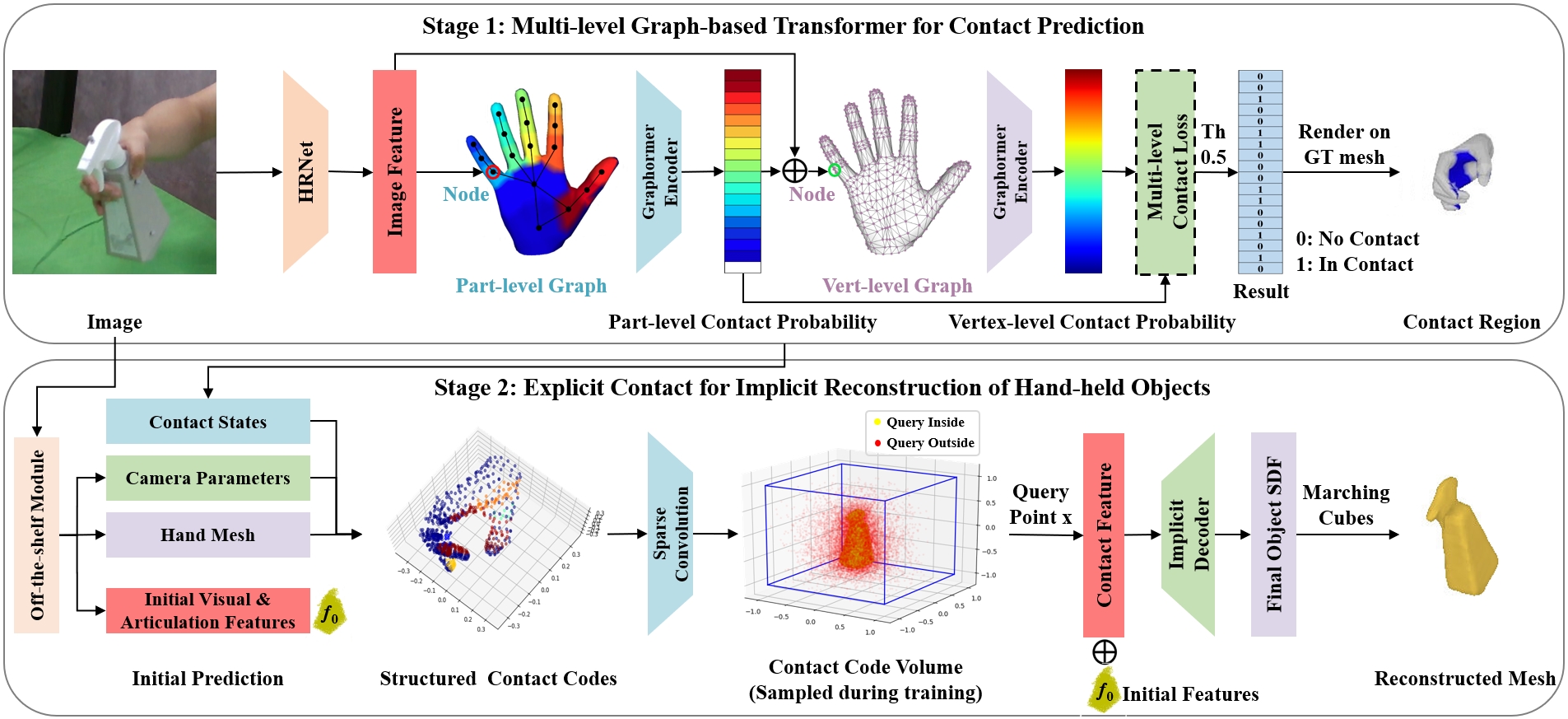

Learning Explicit Contact for Implicit Reconstruction of Hand-held Objects from Monocular Images

Junxing Hu, Hongwen Zhang, Zerui Chen, Mengcheng Li, Yunlong Wang, Yebin Liu, Zhenan Sun

AAAI, 2024

- Python 3.8

conda create --no-default-packages -n choi python=3.8

conda activate choi

- PyTorch is tested on version 1.8.0

conda install pytorch==1.8.0 torchvision==0.9.0 cudatoolkit=11.1.1 -c pytorch -c conda-forge

- Other packages are listed in

requirements.txt

pip install -r requirements.txt

-

Unzip

weights.zipand the pre-trained model is placed in the./weights/ho3d/checkpointsdirectory -

Unzip

data.zipand the processed data and corresponding SDF files are placed in the./datadirectory -

Download the HO3D dataset and put it into the

./data/ho3ddirectory -

Download the MANO model

MANO_RIGHT.pkland put it into the./externals/manodirectory

- To evaluate my model on HO3D, run:

python -m models.choi --config-file experiments/ho3d.yaml --ckpt weights/ho3d/checkpoints/ho3d_weight.ckpt

- The resulting file is generated in the

./outputdirectory

Our method achieves the following performance on the HO3D test set:

| Method | F@5mm | F@10mm | Chamfer Distance (mm) |

|---|---|---|---|

| CHOI (Ours) | 0.393 | 0.633 | 0.646 |

For video inputs from the OakInk dataset:

The video is reconstructed frame-by-frame without post-processing. The objects are unseen during the training.

More results: Project Page

If you find our work useful in your research, please consider citing:

@inproceedings{hu2024learning,

title={Learning Explicit Contact for Implicit Reconstruction of Hand-held Objects from Monocular Images},

author={Hu, Junxing and Zhang, Hongwen and Chen, Zerui and Li, Mengcheng and Wang, Yunlong and Liu, Yebin and Sun, Zhenan},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

year={2024}

}Part of the code is borrowed from IHOI, Neural Body, and MeshGraphormer. Many thanks for their contributions.