- Pytorch

- gym

- matplotlib

AWAC class only supports discrete action space (Dec 30th, 2020)

(Disclaimer) I developed the codes in this repository based on the original AWAC paper. Hence, the code may contain not correct implementation of what the actual paper intended.

class AWAC(nn.Module):

def __init__(self,

critic: nn.Module, # Q(s,a)

critic_target: nn.Module,

actor: nn.Module, # pi(a|s)

lam: float = 0.3, # Lagrangian parameter

tau: float = 5 * 1e-3,

gamma: float = 0.9,

num_action_samples: int = 1,

critic_lr: float = 3 * 1e-4,

actor_lr: float = 3 * 1e-4,

use_adv: bool = False):critic: State-action value function Q(s,a)critic_target: the target network of Q(s,a)actor: An discrete actor. Note that the output ofactoris logit.lam: Lagrangian parameter of the AWAC actor loss. Assume to be a strictly positive value.tau: the Polyak parameter for updating the target network.gamma: The discount factor of target MDPnum_action_samples: Number of action samples for updating critic.critic_lr: Learning rate of criticactor_lr: Learning rate of actoruse_adv: IfTrue, use advantage value for updating actor. Else use Q(s,a) for updating actor.

You can find the running example of AWAC on gym cartpole-v1 environment from AWAC-example.ipynb.

It is confirmed that AWAC can learn better policy than its behavior policy in offline mode.

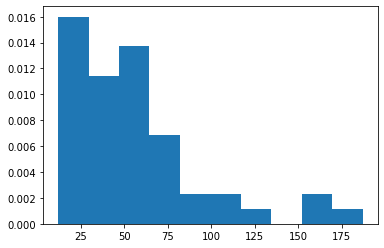

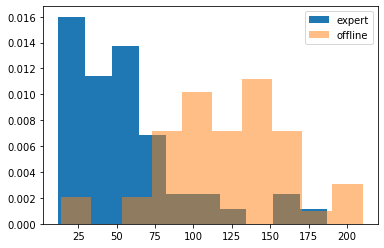

- Prepare the offline dataset by using a good enough DQN (well trained but with a 40% chance act randomly)

- 50 independent

cartpole-v1trial were made.

- The values on the x-axis show how long each episode was. (Longer is better)

- The values on the y-axis indicate the frequency of the episode lengths.

- After training 8000 gradient steps with 1024 sized mini-batch,

AWACwas able to learn a policy better than the one ofgood enough DQN.

- The blue distribution shows the performance distribution of the

good enough DQN. - The orange distribution shows the performance distribution of the offline trained

AWAC.

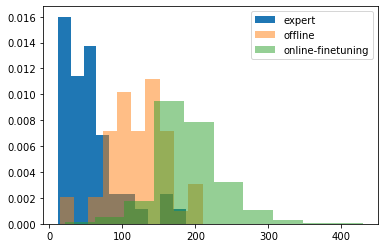

- After

AWACtrained on 600 episode amount of online training,AWACshows even better control performance. (offline trainedAWAC+ 600 ep online training)

- Moreover, the

AWACdidn't show the 'dip', a phenomenon that indicates sudden performance drop right after online training

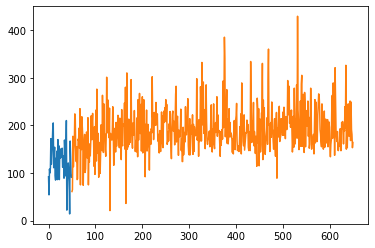

-

The blue curve shows the performance of

AWACtrained on the offline dataset only -

The orange curve shows the performance of

AWACtrained in online mode. i.e., the samples from replay memory would contain some amount of distributional shift. -

Also confirmed that even we clear the memory right before start to online training, the

AWACstill can learn well.