Please refer to INSTALL.md for installation instructions.

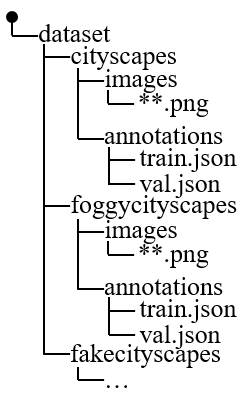

You can download dataset from: Cityscapes and Foggy Cityscapes、BDD100K、sim10k

After downloading, it needs to be converted into coco dataset format. The folder structure for our experiment should look like this:

The source code used for the CycleGAN model was made publicly available by here.

Below script gives you an example of training a model with pre-trained model.

python main.py ctdet --source_dataset fake_cityscapes --target_dataset foggy_cityscapes --exp_id grl_C2F --batch_size 32 --data_dir /root/dataset/ --load_model ./pre-trained-model/ctdet_coco_dla_2x.pth

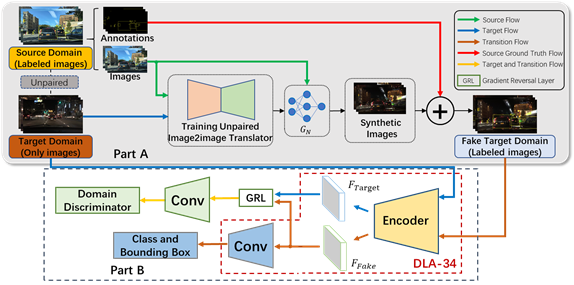

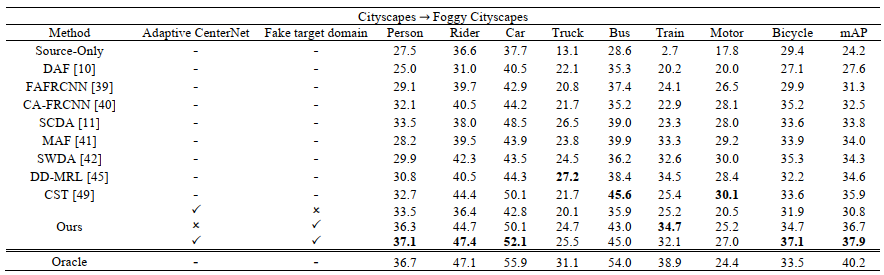

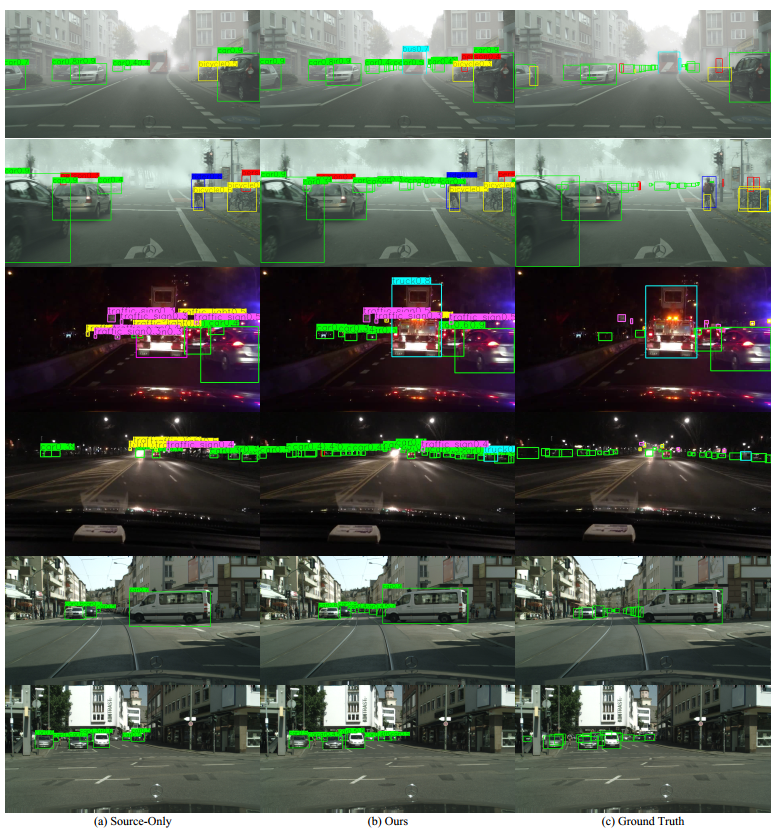

Our proposed method is evaluated in domain shift scenarios based on the driving datasets.

You can download the checkpoint and do prediction or evaluation.

python test.py ctdet --exp_id checkout --source_dataset foggy_cityscapes --not_prefetch_test --data_dir /root/dataset/ --load_model ./sda_save.pth

The results show that our method is superior to the state-of-the-art methods and is effective for object detection in domain shift scenarios.

The image detection results can be viewed with the following commands.

python demo.py ctdet --demo ./images --load_model ./sda_save.pth