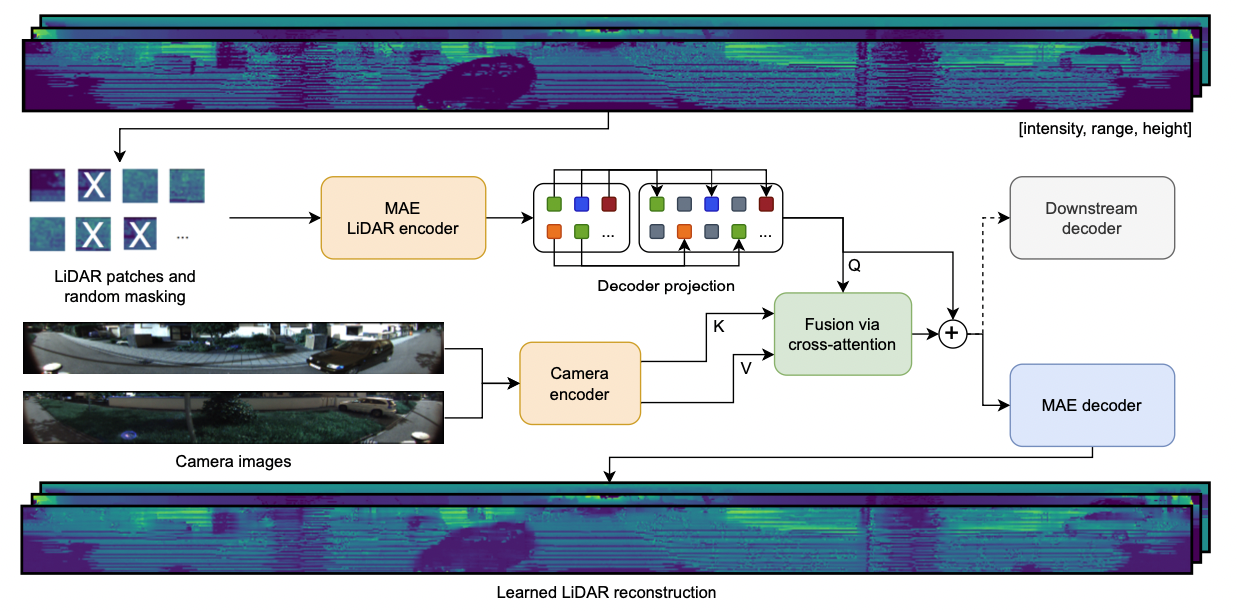

MaskedFusion360: Reconstruct LiDAR Data by Querying Camera Features

TL;DR: Self-supervised pre-training method to fuse LiDAR and camera features for self-driving applications.

Reconstruct LiDAR data by querying camera features. Spherical projections of LiDAR data are transformed into patches, afterwards, randomly selected patches are removed and a MAE encoder is applied to the unmasked patches. The encoder output tokens are fused with camera features via cross-attention. Finally, a MAE decoder reconstructs the spherical LiDAR projections.

Getting started

Coming soon...

Demo with data from our test vehicle Joy

Acknowledgement

The masked autoencoder (He et al., 2022) and CrossViT (Chen et al., 2021) implementations are based on lucidrain's vit_pytorch library.