This repository contains the source codes and instructions for the project Detection and pose estimation of unseen objects.

Note: The following section contains the setup instructions as described in [1] for a local installation. The setup instructions for google colab are found in the next section.

- Download source codes and checkpoints

git clone https://github.com/gist-ailab/uoais.git

cd uoais

mkdir output

-

Download checkpoints at GDrive

-

Move the

R50_depth_mlc_occatmask_hom_concatandR50_rgbdconcat_mlc_occatmask_hom_concatto theoutputfolder. -

Move the

rgbd_fg.pthto theforeground_segmentationfolder -

Set up a python environment

conda create -n uoais python=3.8

conda activate uoais

pip install torch torchvision

pip install shapely torchfile opencv-python pyfastnoisesimd rapidfuzz termcolor

-

Install detectron2

-

Build custom AdelaiDet

python setup.py build develop

In case of updates please refer to the compatibility list of Tensorflow, Python, CUDA, and cuDNN here and update the sections accordingly.

!pip uninstall --yes torch torchvision torchaudio torchtext

!pip install torch==1.8.1+cu102 torchvision==0.9.1+cu102 torchaudio==0.8.1 -f https://download.pytorch.org/whl/torch_stable.html

!pip install shapely torchfile opencv-python pyfastnoisesimd rapidfuzz termcolor

Installing detectron2:

!git clone https://github.com/facebookresearch/detectron2.git

!python -m pip install -e detectron2

!rm -rf build/ **/*.so

!python -m pip install 'git+https://github.com/facebookresearch/detectron2.git@5aeb252b194b93dc2879b4ac34bc51a31b5aee13'

Installing AdelaiDet:

!git clone https://github.com/aim-uofa/AdelaiDet.git

%cd AdelaiDet

!python setup.py build develop

%cd /content/uoais

!python setup.py build develop

- Download

UOAIS-Sim.zipandOSD-Amodal-annotations.zipat GDrive - Download

OSD-0.2-depth.zipat OSD. [2] - Extract the downloaded datasets and organize the folders as follows

uoais

├── output

└── datasets

├── OSD-0.20-depth # for evaluation on tabletop scenes

│ └──amodal_annotation # OSD-amodal

│ └──annotation

│ └──disparity

│ └──image_color

│ └──occlusion_annotation # OSD-amodal

└── UOAIS-Sim # for training

└──annotations

└──train

└──val

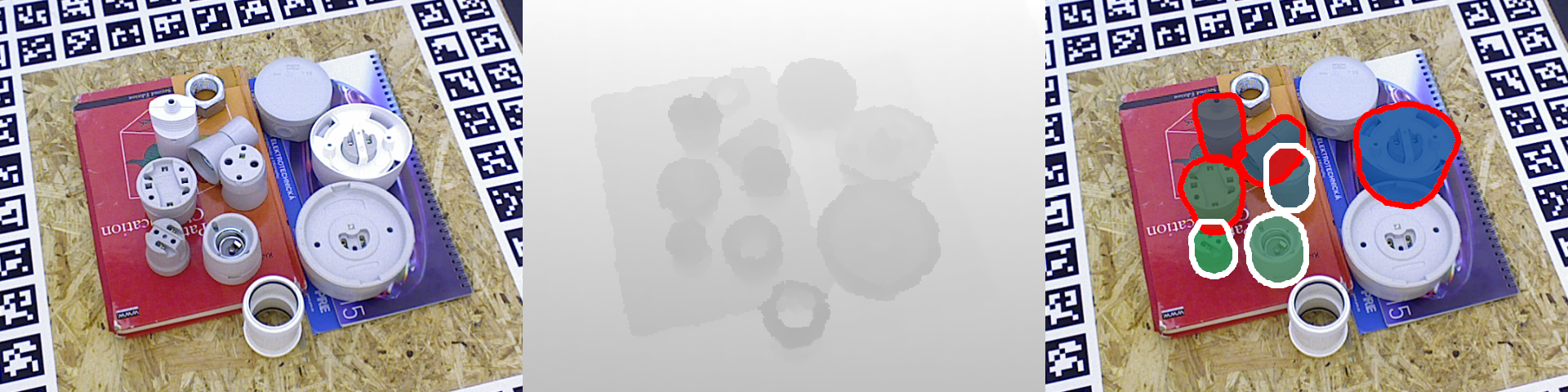

# UOAIS-Net (RGB-D) + CG-Net (foreground segmentation)

python tools/run_on_OSD.py --use-cgnet --dataset-path ./sample_data --config-file configs/R50_rgbdconcat_mlc_occatmask_hom_concat.yaml

# UOAIS-Net (depth) + CG-Net (foreground segmentation)

python tools/run_on_OSD.py --use-cgnet --dataset-path ./sample_data --config-file configs/R50_depth_mlc_occatmask_hom_concat.yaml

# UOAIS-Net (RGB-D)

python tools/run_on_OSD.py --dataset-path ./sample_data --config-file configs/R50_rgbdconcat_mlc_occatmask_hom_concat.yaml

# UOAIS-Net (depth)

python tools/run_on_OSD.py --dataset-path ./sample_data --config-file configs/R50_depth_mlc_occatmask_hom_concat.yaml

The BOP T-Less dataset can be obtained from here

!export SRC=https://bop.felk.cvut.cz/media/data/bop_datasets

!wget --no-check-certificate "https://bop.felk.cvut.cz/media/data/bop_datasets/tless_train_pbr.zip" # PBR training images (rendered with BlenderProc4BOP).

!unzip tless_train_pbr.zip -d tless

Generate occlusions masks

python scripts/toOccludedMask.py

Restructure files to the UOAIS-SIM format

python scripts/moveFull.py

Generate appropriate annotations for UOAIS with:

python scripts/generate_uoais_annotation.py

Train UOAIS-Net with the new dataset (RGB-D) and config:

Replace config file in "uoais/configs/R50_rgbdconcat_mlc_occatmask_hom_concat.yaml" with the updated file from /configs

python train_net.py --config-file configs/R50_rgbdconcat_mlc_occatmask_hom_concat.yaml

Insert an arbitrary scene in: /sample_data

python tools/run_on_Tless.py --use-cgnet --dataset-path ./sample_data --config-file configs/R50_rgbdconcat_mlc_occatmask_hom_concat.yaml

This project used the BOP T-Less dataset which can be obtained from here. However, other BOP datasets are compatible as well.

The models folder contains investigated subgroup of element. The CAD models contains the bas SOLIDWORKS parts as well as the respective .PLY files.

Note: Do not use ASCII encoding.

Any file variation with regard to the reference frame can be generated manually in Blender or in case of renders be generated with BlenderProc.

Object detection and segmentation output based on MaskRCNN. [3] The required COCO annotations of the dataset can be converted with the BOP toolkit. The used COCO images can be downloaded from here.

The detection sample output for both the full and reduced BOP T-Less test set masks are provided in MASKS.

The following provided script can be used the re-annotate existing segmentations to an arbitrary object subset. In case of varying reference frame for objects which are to be approximated by other / general objects this script is to be embedded in the evaluation section. By setting makeGTruth=True ground truth masks can be created.

python reAnnotate.py

The following content is based on [2].

Requirements:

sudo apt-get install libglfw3-dev libglfw3

sudo apt-get install libassimp-dev

pip install --user --pre --upgrade PyOpenGL PyOpenGL_accelerate

pip install --user cython

pip install --user cyglfw3

pip install --user pyassimp==3.3

pip install --user imgaug

pip install --user progressbar

Detailed instructions can be found here.

!pip install tensorflow==2.6.0

!pip install opencv-python

!pip install --user --pre --upgrade PyOpenGL PyOpenGL_accelerate

!pip install --user cython

!pip install --user cyglfw3

!pip install --user pyassimp==3.3

!pip install --user imgaug

!pip install --user progressbar

%env PYOPENGL_PLATFORM='egl' #include if headless rendering is desired - currently limited colab support

!pip install ez_setup

!pip install unroll

!easy_install -U setuptools

#clone https://github.com/DLR-RM/AugmentedAutoencoder.git

!pip install --user .

!export AE_WORKSPACE_PATH=/path/to/autoencoder_ws

!mkdir $AE_WORKSPACE_PATH

!cd $AE_WORKSPACE_PATH

!ae_init_workspace

- Insert the 3D models

- Insert background images e.g. PascalVOC

- Replace config in

$AE_WORKSPACE_PATH/cfg/exp_group/my_mpencoder.cfgwithconfigs/my_mpencoder.cfgand modify if training setup differs. - Update paths of 3D models and background images if applicable in config file.

- Start training with

ae_train exp_group/my_mpencoder

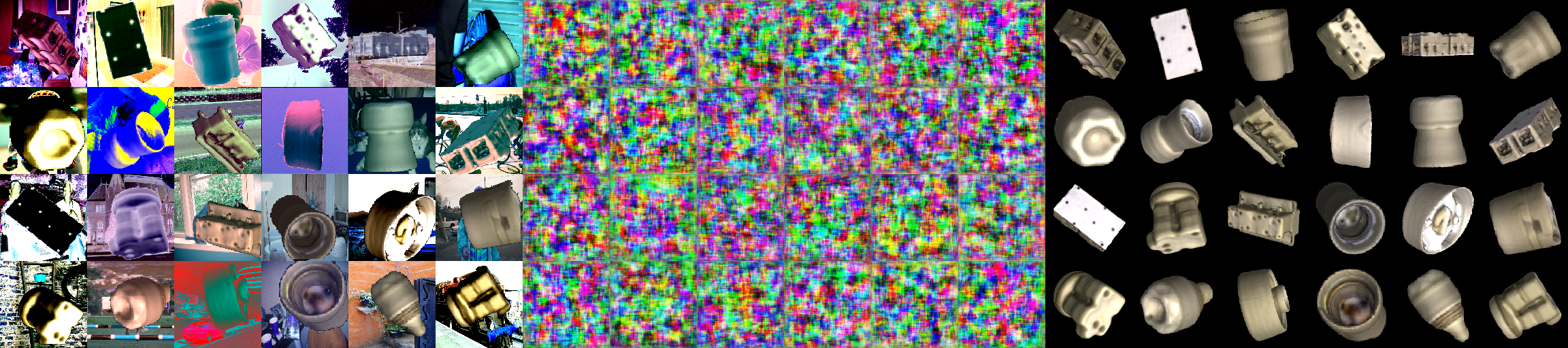

The generated training images can be viewed from the directory $AE_WORKSPACE_PATH/experiments/exp_group/my_mpencoder/train_figures

A sample training image, where the middle part of the image visualises the reconstruction:

The embeddings / codebooks are created with:

ae_embed_multi exp_group/my_mpencoder --model_path '.ply file path'

The BOP Toolkit is required.

Move the trained encoder to $AE_WORKSPACE_PATH/experiments/exp_group/NAMEOFExp_Group

Replace the config file in auto_pose/ae/cfg_m3vision/m3_config_lmo_mp.cfg with configs/m3_config_tless.cfg.

Run the evaluation with:

python auto_pose/m3_interface/compute_bop_results_m3.py auto_pose/ae/cfg_m3vision/m3_config_tless.cfg

--eval_name test

--dataset_name=lmo

--datasets_path=/path/to/bop/datasets

--result_folder /folder/to/results

-vis

[1] S. Back et al., “Unseen Object Amodal Instance Segmentation via Hierarchical Occlusion Modeling,” in 2022 International Conference on Robotics and Automation (ICRA), May 2022, pp. 5085–5092. doi: 10.1109/ICRA46639.2022.9811646.

[2] M. Sundermeyer et al., “Multi-path Learning for Object Pose Estimation Across Domains,” Aug. 2019, doi: 10.48550/arXiv.1908.00151.

[3] K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask R-CNN,” Mar. 2017, doi: 10.48550/arXiv.1703.06870.