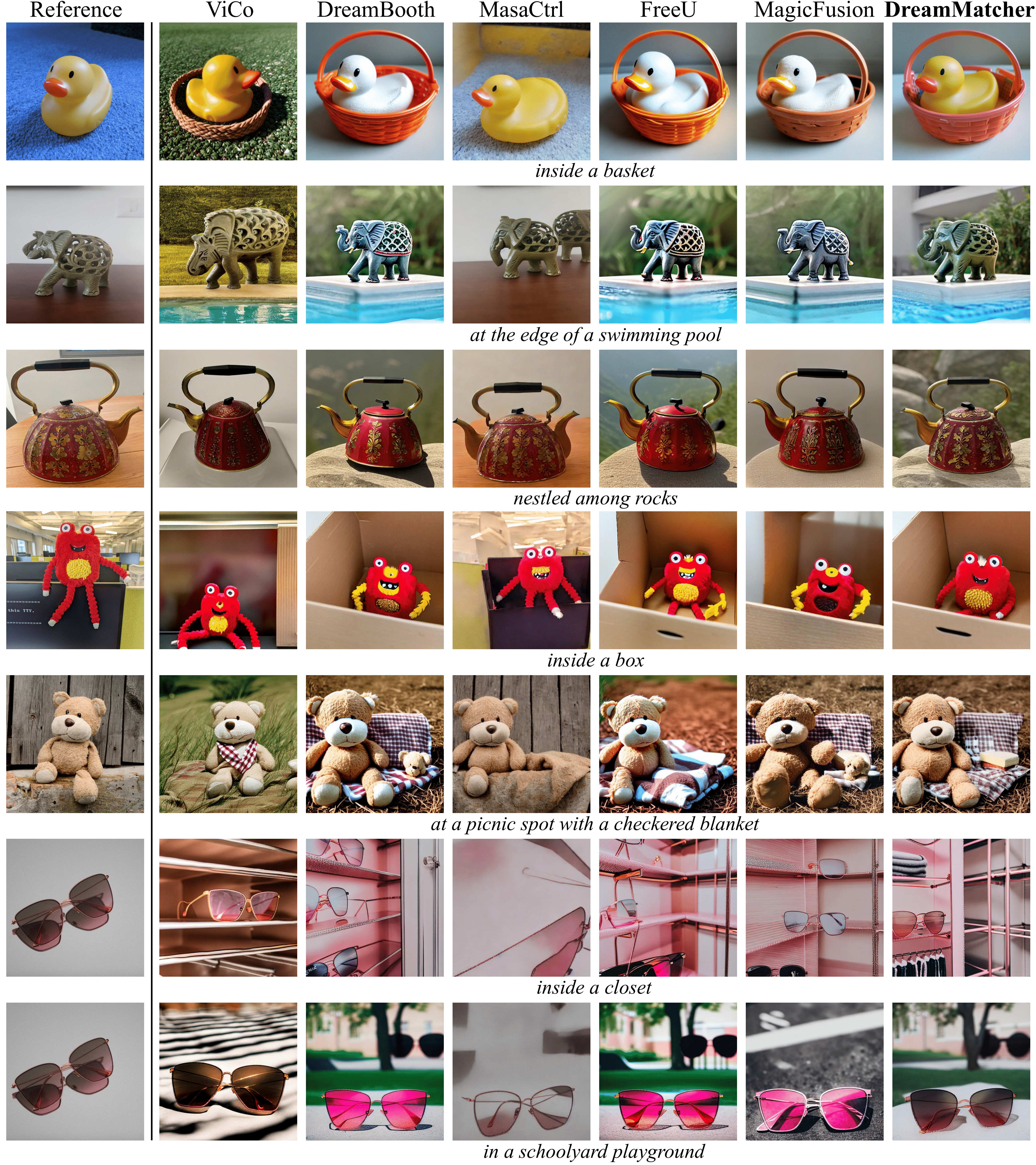

DreamMatcher: Appearance Matching Self-Attention for Semantically-Consistent Text-to-Image Personalization (CVPR'24)

This is the official implementation of the paper "DreamMatcher: Appearance Matching Self-Attention for Semantically-Consistent Text-to-Image Personalization" by Jisu Nam, Heesu Kim, DongJae Lee, Siyoon Jin, Seungryong Kim†, and Seunggyu Chang†.

For more information, check out the [project page].

git clone https://github.com/KU-CVLAB/DreamMatcher.git

cd DreamMatcher

conda env create -f environment.yml

conda activate dreammatcher

pip install -r requirements.txt

cd diffusers

pip install -e .

You can run DreamMatcher with any off-the-shelf personalized models. We provide pre-trained personalized models of Textual Inversion, DreamBooth, and CustomDiffusion on the ViCo dataset. You can find the pre-trained weights on Link.

For a fair and unbiased evaluation, we used the ViCo image and prompt dataset gathered from Textual Inversion, DreamBooth, and CustomDiffusion. The dataset comprises 16 concepts, including 5 live objects and 11 non-live objects. In the ./inputs folder, you can see 4-12 images of each of the 16 concepts.

We provide the ViCo prompt dataset for live objects in ./inputs/prompts_live_objects.txt and for non-live objects in ./inputs/prompts_nonlive_objects.txt.

Additionally, for evaluation in more complex scenarios, we propose the challenging prompt dataset, which is available in ./inputs/prompts_live_objects_challenging.txt and ./inputs/prompts_nonlive_objects_challenging.txt.

To run DreamMatcher, select a personalized model from "ti", "dreambooth", or "custom_diffusion" as the baseline. Below, we provide example code using "dreambooth" as the baseline, with 8 samples. Output images for both the baseline and DreamMatcher will be saved in the result directory.

Run DreamMatcher on the ViCo prompt dataset:

python run_dreammatcher.py --models "dreambooth" --result_dir "./results/dreambooth/test" --num_samples 8 --num_device 0 --mode "normal"

Run DreamMatcher on the proposed challenging prompt dataset:

python run_dreammatcher.py --models "dreambooth" --result_dir "./results/dreambooth/test" --num_samples 8 --num_device 0 --mode "challenging"

To evaluate DreamMatcher, specify the result directory containing the result images from both the baseline and DreamMatcher. ICLIP, IDINO, and TCLIP metrics will be calculated.

Evaluation on the ViCo prompt dataset :

python evaluate.py --result_dir "./results/dreambooth/test" --mode "normal"

Evaluation on the proposed challenging prompt dataset :

python evaluate.py --result_dir "./results/dreambooth/test" --mode "challenging"

Collect evaluation results for every concept in the result directory:

python collect_results.py --result_dir "./results/dreambooth/test"

We have mainly borrowed code from the public project MasaCtrl. A huge thank you to the authors for their valuable contributions.

If you find this research useful, please consider citing:

@misc{nam2024dreammatcher,

title={DreamMatcher: Appearance Matching Self-Attention for Semantically-Consistent Text-to-Image Personalization},

author={Jisu Nam and Heesu Kim and DongJae Lee and Siyoon Jin and Seungryong Kim and Seunggyu Chang},

year={2024},

eprint={2402.09812},

archivePrefix={arXiv},

primaryClass={cs.CV}

}