This repository contains the implementation of the paper:

GeoDiffusion: Text-Prompted Geometric Control for Object Detection Data Generation

Kai Chen, Enze Xie, Zhe Chen, Yibo Wang, Lanqing Hong, Zhenguo Li, Dit-Yan Yeung

International Conference on Learning Representations (ICLR), 2024.

Clone this repo and create the GeoDiffusion environment with conda. We test the code under python==3.7.16, pytorch==1.12.1, cuda=10.2 on Tesla V100 GPU servers. Other versions might be available as well.

-

Initialize the conda environment:

git clone https://github.com/KaiChen1998/GeoDiffusion.git conda create -n geodiffusion python=3.7 -y conda activate geodiffusion

-

Install the required packages:

cd GeoDiffusion # when running training pip install -r requirements/train.txt # only when running inference with DPM-Solver++ pip install -r requirements/dev.txt

| Dataset | Image Resolution | Grid Size | Download |

|---|---|---|---|

| nuImages | 256x256 | 256x256 | HF Hub |

| nuImages | 512x512 | 512x512 | HF Hub |

| nuImages_time_weather | 512x512 | 512x512 | HF Hub |

| COCO-Stuff | 256x256 | 256x256 | HF Hub |

| COCO-Stuff | 512x512 | 256x256 | HF Hub |

Download the pre-trained models and put them under the root directory. Run the following commands to run detection data generation with GeoDiffusion. For simplicity, we embed the layout definition process in the file run_layout_to_image.py directly. Check here for detailed definition.

python run_layout_to_image.py $CKPT_PATH --output_dir ./results/We primarily use the nuImages and COCO-Stuff datasets for training GeoDiffusion. Download the image files from the official websites. For better training performance, we follow mmdetection3d to convert the nuImages dataset into COCO format (you can also download our converted annotations via HuggingFace), while the converted annotation file for COCO-Stuff can be download via HuggingFace. The data structure should be as follows after all files are downloaded.

├── data

│ ├── coco

│ │ │── coco_stuff_annotations

│ │ │ │── train

│ │ │ │ │── instances_stuff_train2017.json

│ │ │ │── val

│ │ │ │ │── instances_stuff_val2017.json

│ │ │── train2017

│ │ │── val2017

│ ├── nuimages

│ │ │── annotation

│ │ │ │── train

│ │ │ │ │── nuimages_v1.0-train.json

│ │ │ │── val

│ │ │ │ │── nuimages_v1.0-val.json

│ │ │── samples

We use Accelerate to launch efficient distributed training (with 8 x V100 GPUs by default). We encourage readers to check the official documents for personalized training settings. We provide the default training parameters in this script, and to change the training dataset, we can directly change the dataset_config_name argument.

# COCO-Stuff

bash tools/dist_train.sh \

--dataset_config_name configs/data/coco_stuff_256x256.py \

--output_dir work_dirs/geodiffusion_coco_stuff

# nuImages

bash tools/dist_train.sh \

--dataset_config_name configs/data/nuimage_256x256.py \

--output_dir work_dirs/geodiffusion_nuimagesWe also support continuing fine-tuning a pre-trained GeoDiffusion checkpoint on downstream tasks to support more geometric controls in the Textural Inversion manner by only training the newly added tokens. We encourage readers to check here and here for more details.

bash tools/dist_train.sh \

--dataset_config_name configs/data/coco_stuff_256x256.py \

--train_text_encoder_params added_embedding \

--output_dir work_dirs/geodiffusion_coco_stuff_continueDifferent from the more user-friendly inference demo provided here, in this section we provide the scripts to run batch inference throughout a dataset. Note that the inference settings might differ for different checkpoints. We encourage readers to check the generation_config.json file under each pre-trained checkpoint in the Model Zoo for more details.

# COCO-Stuff

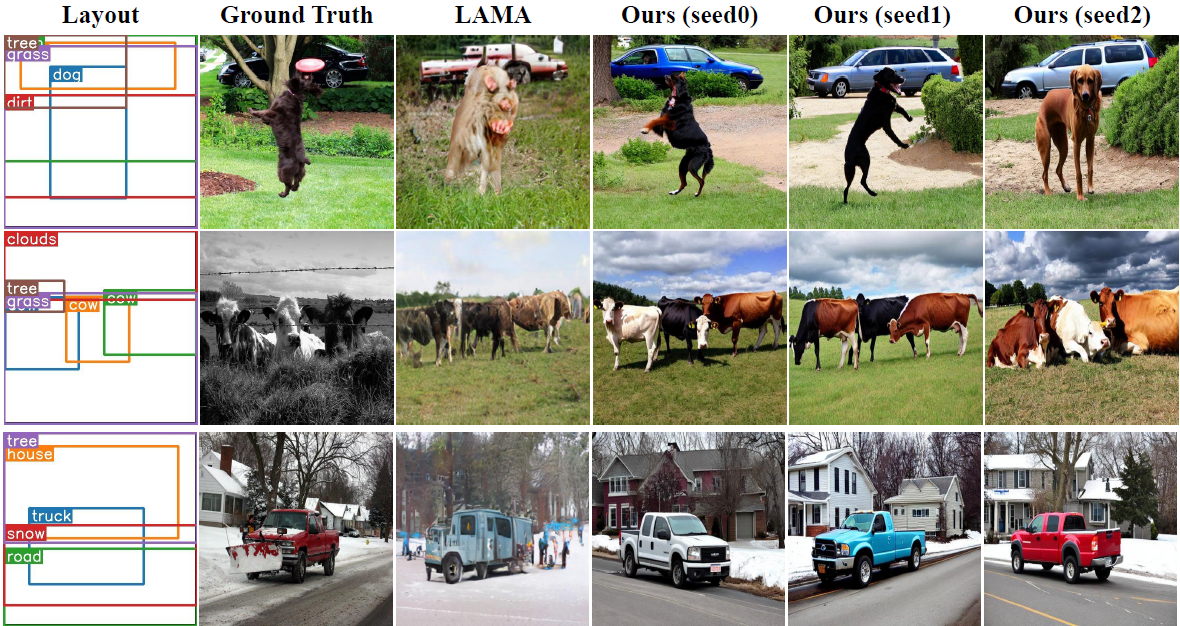

# We encourage readers to check https://github.com/ZejianLi/LAMA?tab=readme-ov-file#testing

# to report quantitative results on COCO-Stuff L2I benchmark.

bash tools/dist_test.sh PATH_TO_CKPT \

--dataset_config_name configs/data/coco_stuff_256x256.py

# nuImages

bash tools/dist_test.sh PATH_TO_CKPT \

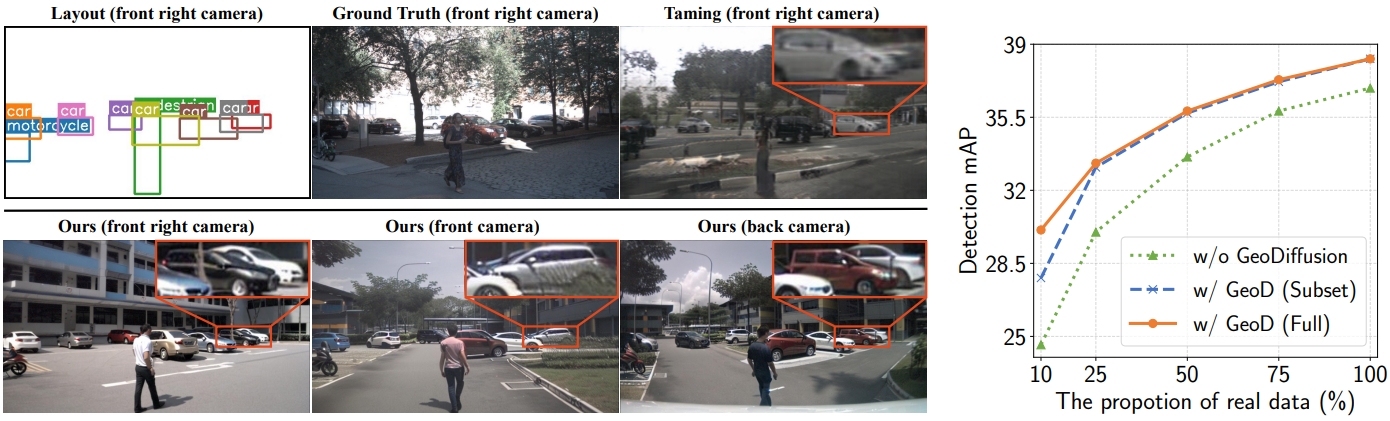

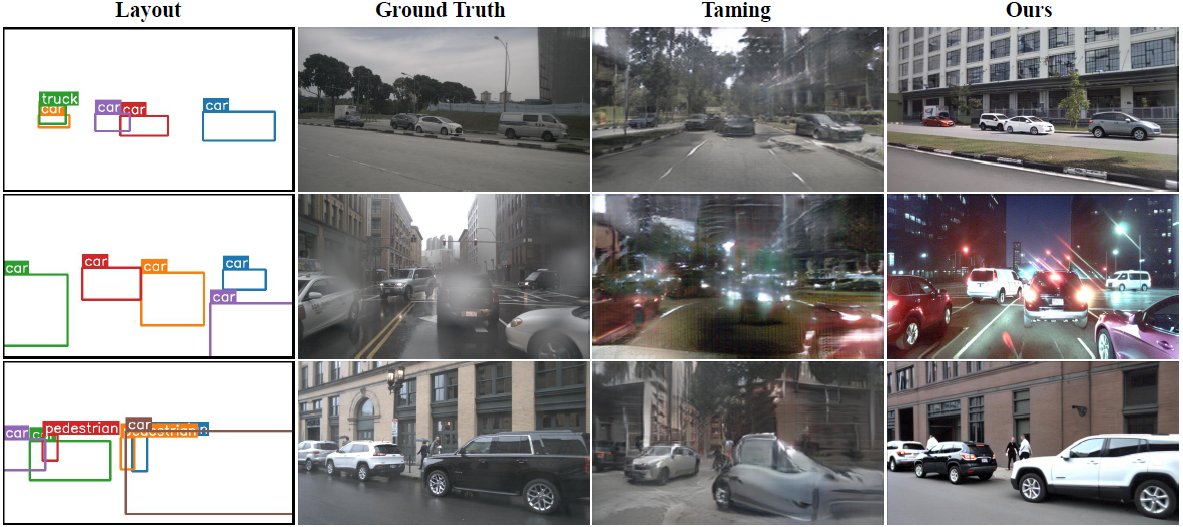

--dataset_config_name configs/data/nuimage_256x256.pyMore results can be found in the main paper.

We aim to construct a controllable and flexible pipeline for perception data corner case generation and visual world modeling! Check our latest works:

- GeoDiffusion: text-prompted geometric controls for 2D object detection.

- MagicDrive: multi-view street scene generation for 3D object detection.

- TrackDiffusion: multi-object video generation for MOT tracking.

- DetDiffusion: customized corner case generation.

- Geom-Erasing: geometric controls for implicit concept removal.

@article{chen2023integrating,

author = {Chen, Kai and Xie, Enze and Chen, Zhe and Hong, Lanqing and Li, Zhenguo and Yeung, Dit-Yan},

title = {Integrating Geometric Control into Text-to-Image Diffusion Models for High-Quality Detection Data Generation via Text Prompt},

journal = {arXiv: 2306.04607},

year = {2023},

}We adopt the following open-sourced projects:

- diffusers: basic codebase to train Stable Diffusion models.

- mmdetection: dataloader to handle images with various geometric conditions.

- mmdetection3d & LAMA: data pre-processing of the training datasets.