Some older versions may work. But we used the following:

- cuda 10.1 (batch size depends on GPU memory)

- Python 3.6.9

- Pytorch 1.6.0

- progress 1.5

- Tensorboard

Human3.6m in exponential map can be downloaded from here.

AMASS was obtained from the repo, you need to make an account.

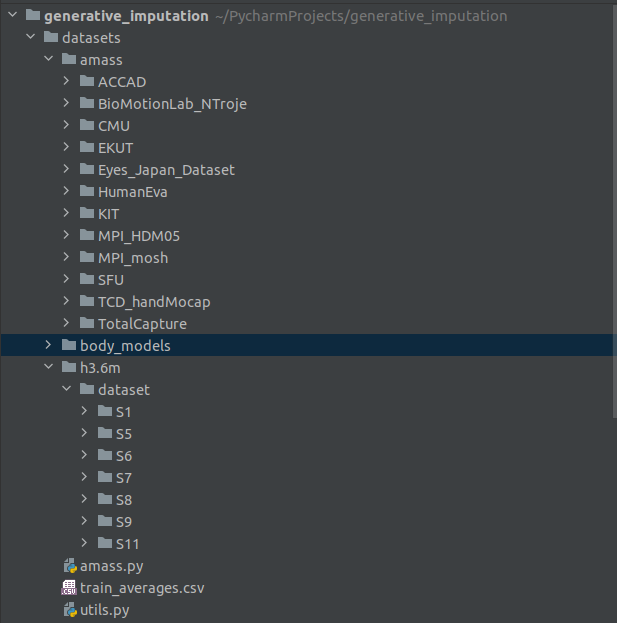

Once downloaded the datasets should be added to the datasets folder, example below.

It is necessary to also add a saved_models folder as each trained model will produce a lot of checkpoints and data as it trains. If training several models it is cleaner to have a separate folder for each of these sub-folders, so saving checkpoints to folders within a saved_models folder is hardcoded.

To train HG-VAE as in the paper:

python3 main.py --name "HGVAE" --lr 0.0001 --warmup_time 200 --beta 0.0001 --n_epochs 500 --variational --output_variance --train_batch_size 800 --test_batch_size 800see opt.py for all training options. By default checkpoints are saved every 10 epochs. Training may be stop, and resumed by using --start_epoch flag, for example

python3 main.py --start_epoch 31 --name "HGVAE" --lr 0.0001 --warmup_time 200 --beta 0.0001 --n_epochs 500 --variational --output_variance --train_batch_size 800 --test_batch_size 800will start retraining from the checkpoint saved after epoch 30. We also use the start_epoch flag to select the checkpoint to use when using the trained model.

MIT

If you use our code, please cite:

@misc{bourached2022hierarchical,

title={Hierarchical Graph-Convolutional Variational AutoEncoding for Generative Modelling of Human Motion},

author={Anthony Bourached and Robert Gray and Ryan-Rhys Griffiths and Ashwani Jha and Parashkev Nachev},

year={2022},

eprint={2111.12602},

archivePrefix={arXiv},

primaryClass={cs.CV}

}