This paper was accepted at AAAI 2022 SA poster session. [pdf]

[09/06/2022] Demo has been released on Try it now!

[06/17/2022] Now, fast inference mode offers a salient object result with the mask.

We have improved a result quality of salient object as follows.

You can get the more clear salient object by tuning the threshold.

We will release initializing TRACER with a version of pre-trained TE-x.

We will release initializing TRACER with a version of pre-trained TE-x.

[04/20/2022] We update a pipeline for custom dataset inference w/o measuring.

- Run main.py scripts.

TRACER

├── data

│ ├── custom_dataset

│ │ ├── sample_image1.png

│ │ ├── sample_image2.png

.

.

.

# For testing TRACER with pre-trained model (e.g.)

python main.py inference --dataset custom_dataset/ --arch 7 --img_size 640 --save_map True

All datasets are available in public.

- Download the DUTS-TR and DUTS-TE from Here

- Download the DUT-OMRON from Here

- Download the HKU-IS from Here

- Download the ECSSD from Here

- Download the PASCAL-S from Here

- Download the edge GT from Here.

TRACER

├── data

│ ├── DUTS

│ │ ├── Train

│ │ │ ├── images

│ │ │ ├── masks

│ │ │ ├── edges

│ │ ├── Test

│ │ │ ├── images

│ │ │ ├── masks

│ ├── DUT-O

│ │ ├── Test

│ │ │ ├── images

│ │ │ ├── masks

│ ├── HKU-IS

│ │ ├── Test

│ │ │ ├── images

│ │ │ ├── masks

.

.

.

- Python >= 3.7.x

- Pytorch >= 1.8.0

- albumentations >= 0.5.1

- tqdm >=4.54.0

- scikit-learn >= 0.23.2

- Run main.py scripts.

# For training TRACER-TE0 (e.g.)

python main.py train --arch 0 --img_size 320

# For testing TRACER with pre-trained model (e.g.)

python main.py test --exp_num 0 --arch 0 --img_size 320

- Pre-trained models of TRACER are available at here

- Change the model name as 'best_model.pth' and put the weights to the path 'results/DUTS/TEx_0/best_model.pth'

(here, the x means the model scale e.g., 0 to 7). - Input image sizes for each model are listed belows.

--arch: EfficientNet backbone scale: TE0 to TE7.

--frequency_radius: High-pass filter radius in the MEAM.

--gamma: channel confidence ratio \gamma in the UAM.

--denoise: Denoising ratio d in the OAM.

--RFB_aggregated_channel: # of channels in receptive field blocks.

--multi_gpu: Multi-GPU learning options.

--img_size: Input image resolution.

--save_map: Options saving predicted mask.

| Model | Img size |

|---|---|

| TRACER-Efficient-0 ~ 1 | 320 |

| TRACER-Efficient-2 | 352 |

| TRACER-Efficient-3 | 384 |

| TRACER-Efficient-4 | 448 |

| TRACER-Efficient-5 | 512 |

| TRACER-Efficient-6 | 576 |

| TRACER-Efficient-7 | 640 |

@article{lee2021tracer,

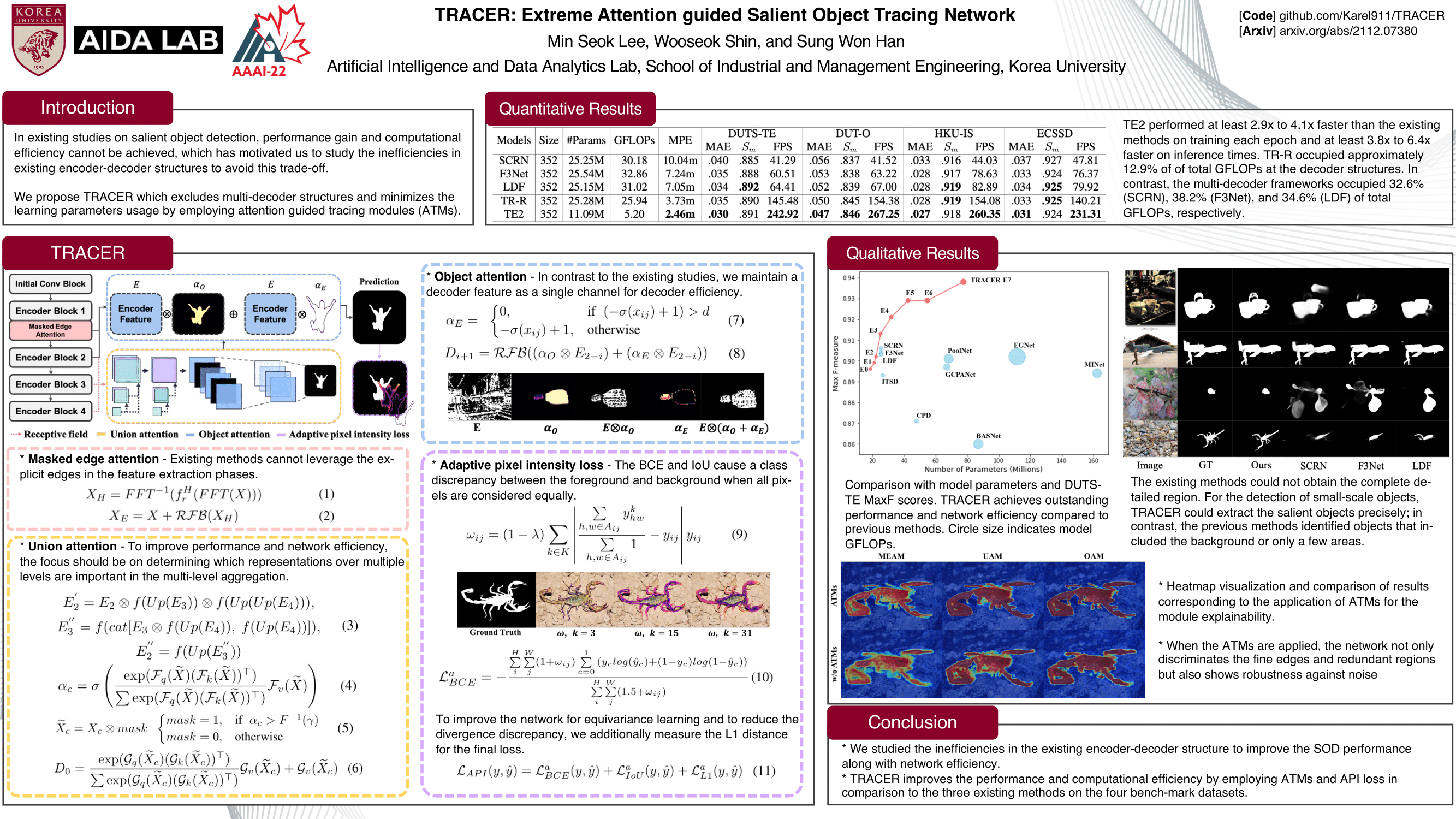

title={TRACER: Extreme Attention Guided Salient Object Tracing Network},

author={Lee, Min Seok and Shin, WooSeok and Han, Sung Won},

journal={arXiv preprint arXiv:2112.07380},

year={2021}

}