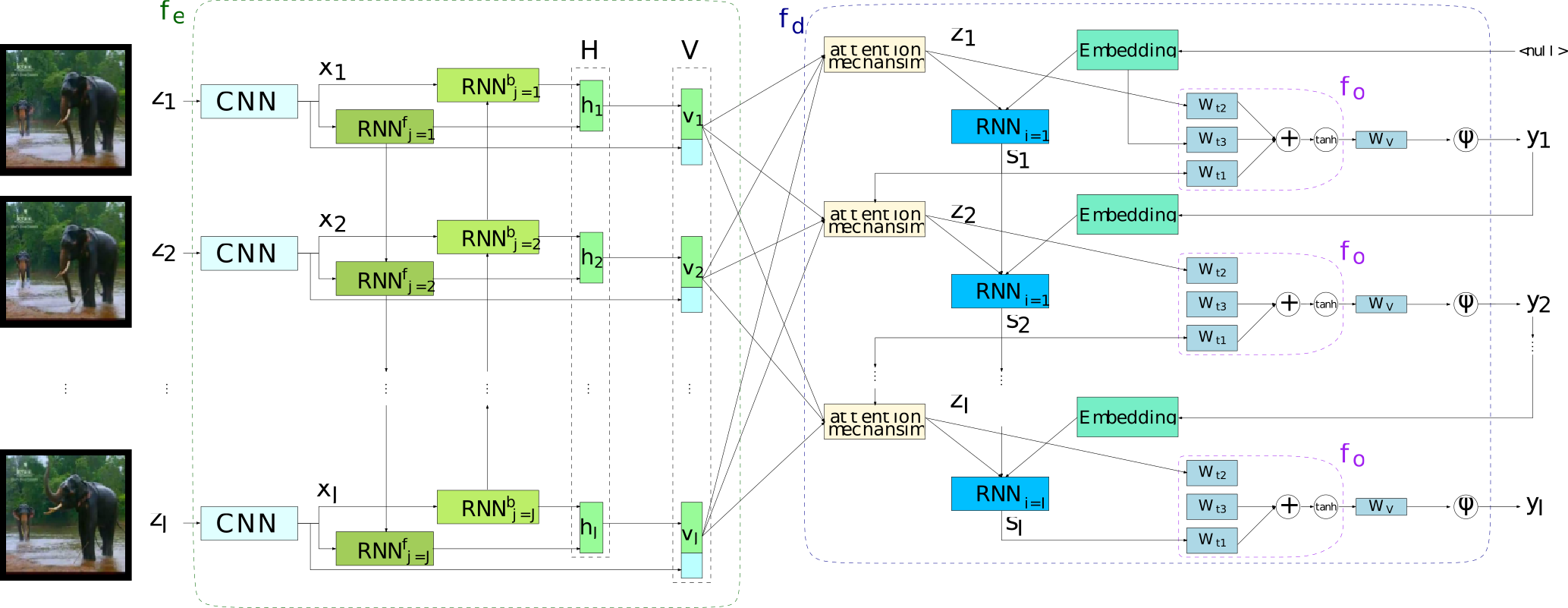

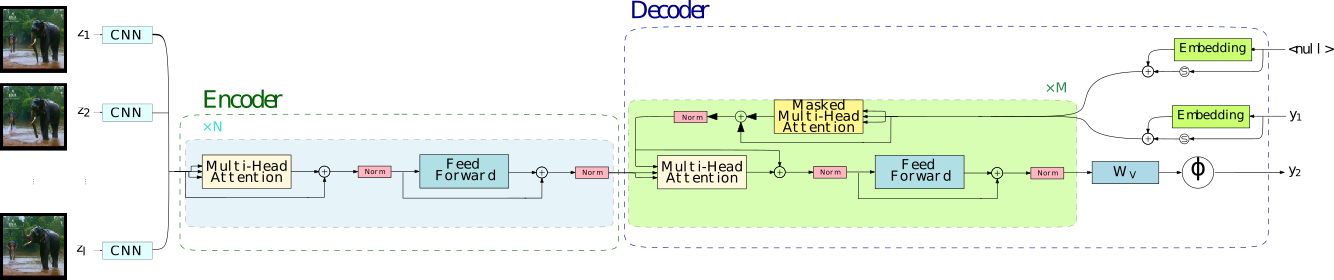

Interactive multimedia captioning with Keras (Theano and Tensorflow). Given an input image or video, we describe its content.

Documentation: https://interactive-keras-captioning.readthedocs.io

Interactive-predictive pattern recognition is a collaborative human-machine framework for obtaining high-quality predictions while minimizing the human effort spent during the process.

It consists in an iterative prediction-correction process: each time the user introduces a correction to a hypothesis, the system reacts offering an alternative, considering the user feedback.

For further reading about this framework, please refer to Interactive Neural Machine Translation, Online Learning for Effort Reduction in Interactive Neural Machine Translation and Active Learning for Interactive Neural Machine Translation of Data Streams.

- Transformer model.

- Support for GRU/LSTM networks:

- Regular GRU/LSTM units.

- Conditional GRU/LSTM units in the decoder.

- Multilayered residual GRU/LSTM networks (and their Conditional version).

- Peeked decoder: The previously generated word is an input of the current timestep.

- Online learning and Interactive-predictive correction.

- Attention model over the input sequence (image or video).

- Supporting Bahdanau (Add) Luong (Dot) attention mechanisms.

- Also supports double stochastic attention (Eq. 14 from arXiv:1502.03044)

- Beam search decoding.

- Ensemble decoding (caption.py).

- Featuring length and source coverage normalization (reference).

- Model averaging (utils/model_average.py).

- Label smoothing.

- N-best list generation (as byproduct of the beam search process).

- Use of pretrained (Glove or Word2Vec) word embedding vectors.

- Client-server architecture for web demos:

Assuming that you have pip installed, run:

git clone https://github.com/lvapeab/interactive-keras-captioning

cd interactive-keras-captioning

pip install -r requirements.txtfor obtaining the required packages for running this library.

Interactive Keras Captioning requires the following libraries:

- Our version of Keras (Recommended release 2.2.4.2 or newer).

- Multimodal Keras Wrapper (Interactive_NMT branch).

- Coco-caption evaluation package (Only required to perform evaluation). This package requires

java(version 1.8.0 or newer).

For accelerating the training and decoding on CUDA GPUs, you can optionally install:

The instructions for data preprocessing (image or videos) are here.

-

Set a training configuration in the

config.pyscript. Each parameter is commented. You can also specify the parameters when calling themain.pyscript following the syntaxKey=Value -

Train!:

python main.py

Once we have our model trained, we can translate new text using the caption.py script.

In short, if we want to use evaluate the test set from a the dataset MSVD with an ensemble of two models, we should run something like:

python caption.py

--models trained_models/epoch_1 \

trained_models/epoch_2 \

--dataset datasets/Dataset_MSVD.pkl \

--splits testThis library is strongly based on NMT-Keras. Much of the library has been developed together with Marc Bolaños (web page) for other sequence-to-sequence problems.

To see other projects following the same philosophy and style of Interactive Keras Captioning, take a look to:

NMT-Keras: Neural Machine Translation.

TMA: Egocentric captioning based on temporally-linked sequences.

VIBIKNet: Visual question answering.

Sentence SelectioNN: Sentence classification and selection.

DeepQuest: State-of-the-art models for multi-level Quality Estimation.

There is a known issue with the Theano backend. When running main.py with this backend, it will show the following message:

[...]

raise theano.gof.InconsistencyError("Trying to reintroduce a removed node")

InconsistencyError: Trying to reintroduce a removed node

It is not a critical error, the model keeps working and it is safe to ignore it. However, if you want the message to be gone, use the Theano flag optimizer_excluding=scanOp_pushout_output.

Álvaro Peris (web page): lvapeab@prhlt.upv.es