This repo contains a basic event processing example. The idea was to play around with Bytewax for event processing from Kafka. Bytewax is a lightweight, easy to install, and easy to deploy tool for real-time pipelines.

Expand

Making real-time data pipelines is really very challenging, since we have to deal with data volume changing, latency, data quality, many data sources, and so on. Another challenge we usually face is related to the processing tools: some of them can't process event-by-event, some of them are too expensive in a real fast scenario, and the deployment of some of them are really challenging. So, in order to better make decisions about our data stack, we have to properly understand the main options availables for storing and processing events.

In this way, the purpose of this repo is to get in touch with some stream processing capabilities of Bytewax. To achieve this goal, we ran a Kafka cluster in Docker and then produced fake ride (something like Uber's ride) data to a topic. Then, a Bytewax dataflow consumed those records, performed some transformations, and then saved the refined data into another topic.

In order to be more business-like, we created a fake business context.

Our company KAB Rideshare is a company that provides ride-hailing services. Due to a recent increase in the number of users, KAB Rideshare will have to improve their data architecture and start processing the rides events for analytical purposes. So, our Data Team was given the task of implementing an event processing step. The company already stores all the events in a Kafka cluster, so our task is just to choose and implement the processing tool.

After some time of brainstorming, we decided to start with a MVP using a lightweight, but very powerful tool: Bytewax. In this, way, we can get the results quickly so the analytical team can do their job. Once we have gained this time, we can better evaluate other solutions.

- Implement the Data Gen

- Deploy a Kafka cluster on Docker for testing purposes

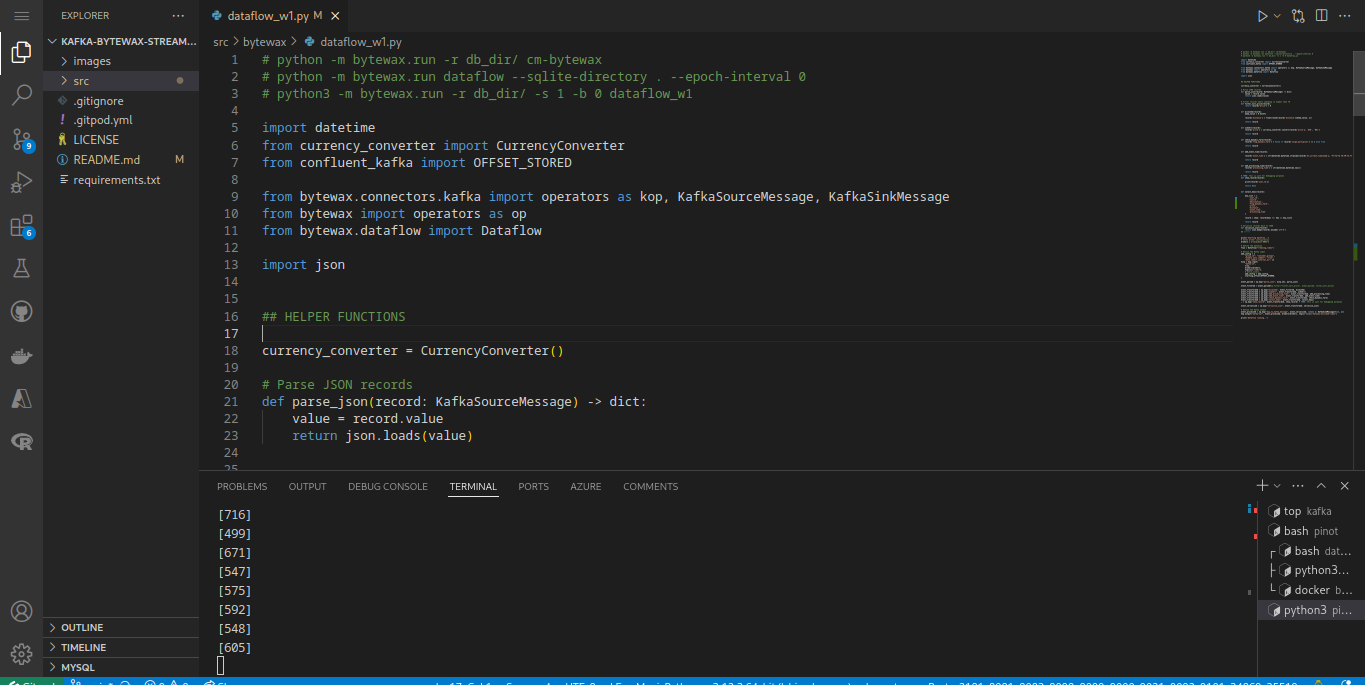

- Implement the Bytewax Dataflow

- Python 3.12.3

- Apache Kafka: for event streaming

- Bytewax: for event processing

- Apache Pinot: perform some simples analytical queries

We'll shortelly describe the workflow so you can better understand it.

First of all, we needed some data. In this way, we developed a simple data generator using Python that loads data into a Kafka topic. This generator was based on a more complex one we developed in Engenharia de Dados Academy. The data contains some columns about source, destination, ride price, user id and stuff like that.

The next step was the resource provisioning. Using Docker Compose, we ran a Kafka cluster and Apache Pinot locally. Finally, we developed the bytewax processing. It performs the following computations:

- miles to kilometers convertion

- USD to BRL convertion

- add processing time

- add event time (convert timestamp to datetime string)

- add dynamic fare flag

- filter desired columns

As an aditional step we ran queries on pinot for checking the processed events from Bytewax.

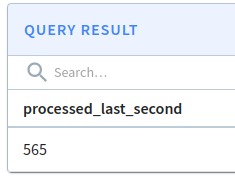

Bytewax seems very interesting. With a simple worker running in pure Python, it was capable of processing an average of 500 events per second, as shows the image bellow:

Although we implemented a very simple example here, Bytewax's processing capacity is actually quite interesting.

From now on, we'll deploy both Kafka, bytewax, and the data generator to a Kubernetes cluster so we can better explore real world scenarios and the Bytewax scaling capabilities.

You'll find in this section all the steps needed to reproduce this solution.

I'm using Gitpod to work on this project. Gitpod provides an initialized workspace integrated with some code repository, such as Github. In short, it is basically a VScode we access through the browser and it already has Python, Docker, and some other stuff installed, so we don't need to install things in our machine. They offer a free plan with up to 50 hour/mo (no credit card required), which is more than enough for practical projects (at least for me).

For other Python's dependencies, please refer to the `requirements.txt`, there we have all dependencies we needed.

-

Clone the repo:

git clone https://github.com/KattsonBastos/kafka-bytewax-streaming.git

-

Create a Python's virtual environment for Bytewax (I'm using virtualenv for simplicity) and activate it:

python3 -m virtualenv env source env/bin/activate -

Install Python's packages with the following command:

pip install -r requirements.txt

-

Provision the resources (Kafka and Pinot):

cd src/build/ docker compose up -d -

Create Kafka Topics:

We'll need two topics: `input-raw-rides`, for events from the data generator, and another for refined events `output-bytewax-enriched-rides`. First, we need to access the container:

docker exec -it broker bashThen, create both topics:

kafka-topics --create --topic=input-raw-rides --bootstrap-server=localhost:9092 --partitions=3 --replication-factor=1 kafka-topics --create --topic=output-bytewax-enriched-rides --bootstrap-server=localhost:9092 --partitions=3 --replication-factor=1 -

Start the data generator:

cd src/data-gen-topic/ bash run.sh input-raw-ridesRemember that `input-raw-rides` is the the topic we want to save the events into.

-

Start the Bytewax dataflow (remember to activate the env created in the second step):

python3 -m bytewax.run dataflow

If you want more workers, run the following command (refer to the Bytewax docs for more):

python3 -m -w bytewax.run dataflow

Here you can play with Bytewax for checking its performance.

-

Check the refined events Here you can do one or many (it's up on you):

-

go to the kafka Control Center (port 9021) and take a look at the topic;

-

access the container and run the following command:

kafka-console-consumer --bootstrap-server=localhost:9092 --topic=output-bytewax-enriched-rides

-

Or you can create a table in apache pinot and play with the data. To do so, just run the script `src/pinot/run.sh` and then check the table in Pinot Controller (port 9000). If you prefer, you can also run the python script `src/pinot/query.py`. Make sure to replace the query with yours.

-

Distributed under the MIT License. See LICENSE.txt for more information.

- Use-Case: Analisando Corridas do Uber em Tempo-Real com Kafka, Faust, Pinot e SuperSet | Live #80: all the data transformations and the general idea of this repo was based on the referred live stream. The main difference is that here we changed the processing tool from Faust to Bytewax.

- Official bytewax examples: they present amazing examples so we can easily get in touch with the tool.