main

Universium task project.

To run with docker with local configuration (-f production.yml for production)

docker-compose -f local.yml upTo migrate inside docker and create super user, run

docker-compose -f local.yml run --rm django python manage.py migrate

docker-compose -f local.yml run --rm django python manage.py createsuperuserWith same commands, you can run other django commands. For example importing movie data from csv files.

docker-compose -f local.yml run --rm django python manage.py import_movie_data --data-dir=dataFor running without docker:

- Create virtual env and activate it

- Install requirements

- Run migrations

- Create super user

- Run server

Performance improvements

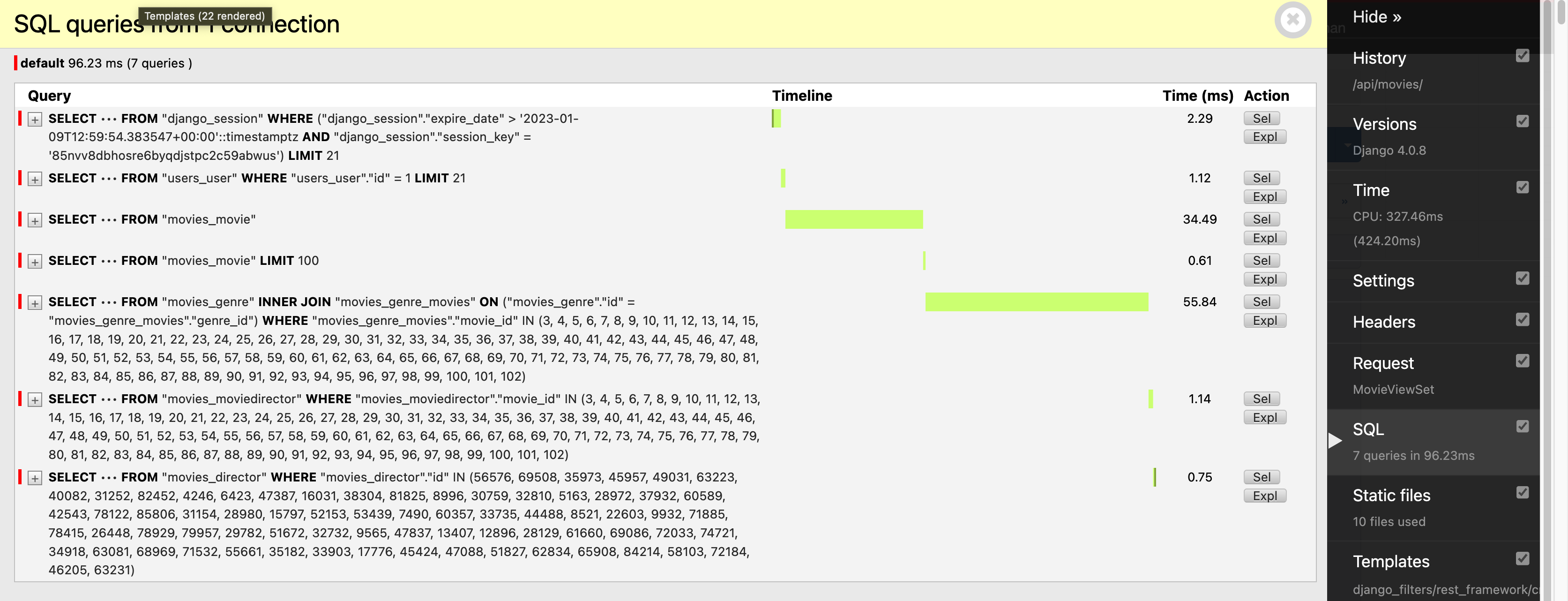

You will detect certain select_related and prefetch_related in the code. This is done to improve performance of the queries. For example, in the MovieViewSet class, you will see the following code:

class MovieViewSet(viewsets.ModelViewSet):

queryset = Movie.objects.all().select_related("director").prefetch_related("actors")

serializer_class = MovieSerializerThis is done to avoid N+1 queries. For example, if you don't use select_related and prefetch_related, you will see the following queries:

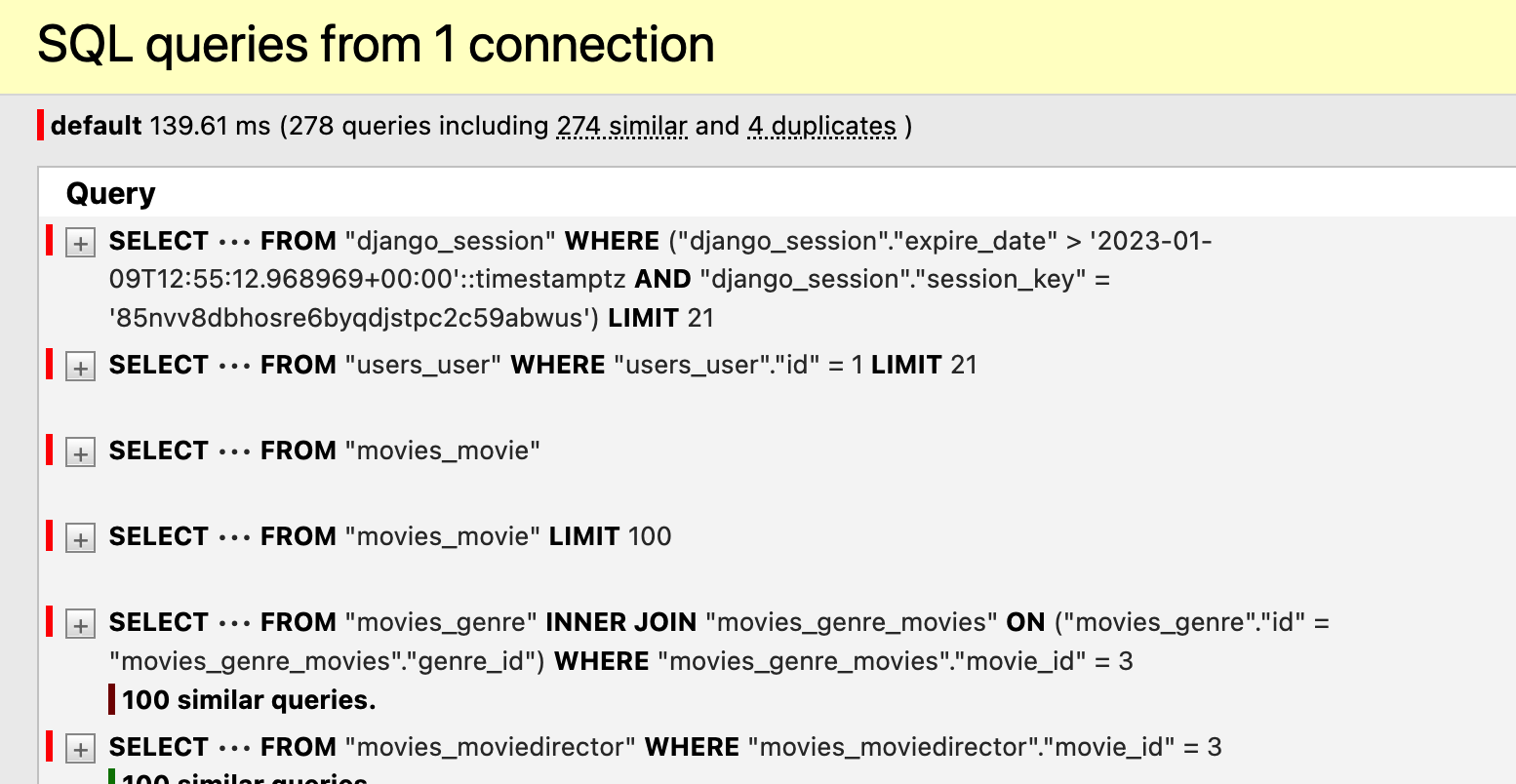

Previously, with duplicate queries (N+1)

After using select_related and prefetch_related

Additional potential improvements

Inside import_movie_data script, I am reading objects from csv and inserting per object

There is a possible improvement to read all the objects from csv and then insert them in bulk. This will reduce the number of queries and will improve the performance.

Additionally, you have probably noticed that I am using get_or_create(). This is done to avoid duplicate objects. For example, if you have a movie with the same name and director, you will not create a new movie. Instead, you will get the existing movie and update the actors.

Well, there is no get_or_create in bulk that comes with django currently, it is probably possible to implement but for this project scope, I don't think it was needed, I just wanted to demonstrate that we can improve things.

If you are sure that you will run import script one time, it could make sense to use bulk create.

License: MIT

Settings

Moved to settings.

Basic Commands

Setting Up Your Users

-

To create a normal user account, just go to Sign Up and fill out the form. Once you submit it, you'll see a "Verify Your E-mail Address" page. Go to your console to see a simulated email verification message. Copy the link into your browser. Now the user's email should be verified and ready to go.

-

To create a superuser account, use this command:

$ python manage.py createsuperuser

For convenience, you can keep your normal user logged in on Chrome and your superuser logged in on Firefox (or similar), so that you can see how the site behaves for both kinds of users.

Type checks

Running type checks with mypy:

$ mypy main

Test coverage

To run the tests, check your test coverage, and generate an HTML coverage report:

$ coverage run -m pytest

$ coverage html

$ open htmlcov/index.html

Running tests with pytest

$ pytest

Live reloading and Sass CSS compilation

Moved to Live reloading and SASS compilation.

Deployment

The following details how to deploy this application.

Docker

See detailed cookiecutter-django Docker documentation.