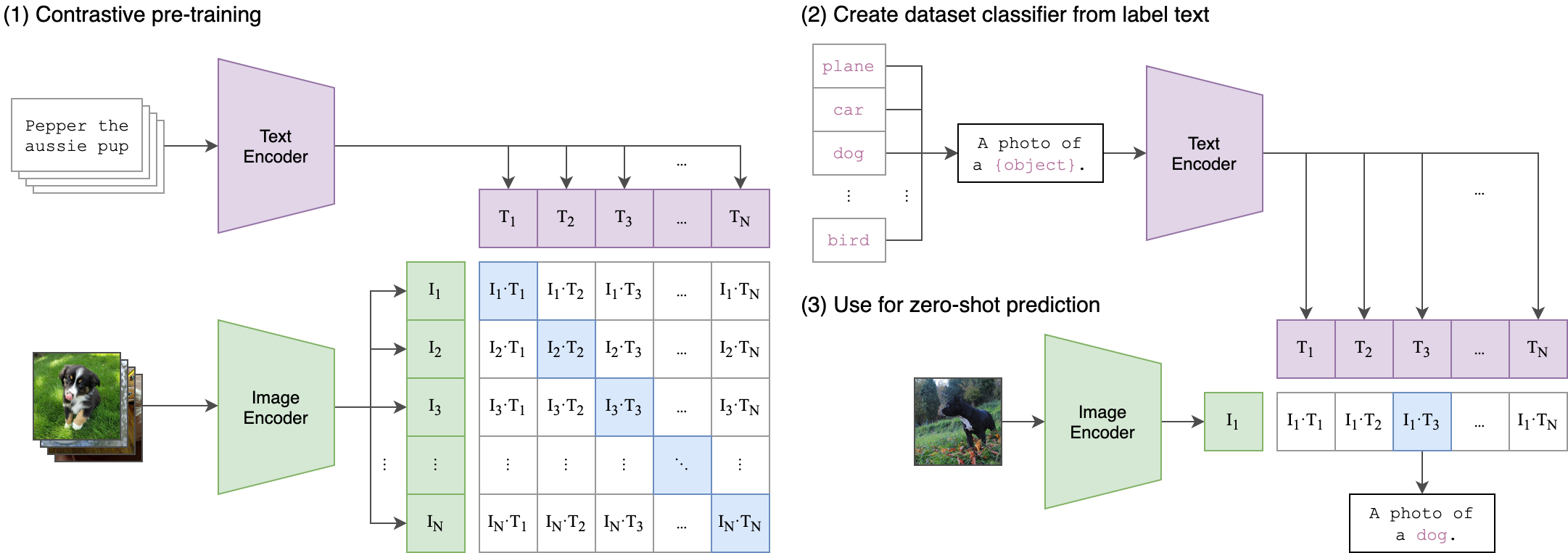

This repository provides a comprehensive implementation of a zero-shot image classification utilizing the CLIP (Contrastive Language-Image Pre-training) architecture. The model is trained on the Flickr30k dataset and is capable of performing image-text matching tasks effectively.

CLIP, developed by OpenAI in 2021, is a sophisticated vision-and-language model designed to bridge the gap between images and their textual descriptions.

To set up the environment, clone the repository and install the necessary packages:

conda create --name clip_training_env

conda activate clip_training_env

pip3 install ipykernel

python3 -m ipykernel install --user --name clip_training_env --display-name clip_training_env

pip3 install -r requirements.txt

This repository uses the Flickr30k dataset for training the model. To download the dataset, execute the following commands:

./download_flickr_dataset.shTo run the image classification script, use the following command:

python3 ./clip_classification.pyThe script will save the trained model weights and visualize image-text pairs.

Note: This is a demonstration. For higher accuracy, please customize the training strategy.

The CLIP model architecture includes:

- Image Encoder

- Text Encoder

- Projection heads for both image and text embeddings

The training process involves:

- Data preparation and splitting

- Model initialization

- Training loop with validation

- Saving the best model checkpoint

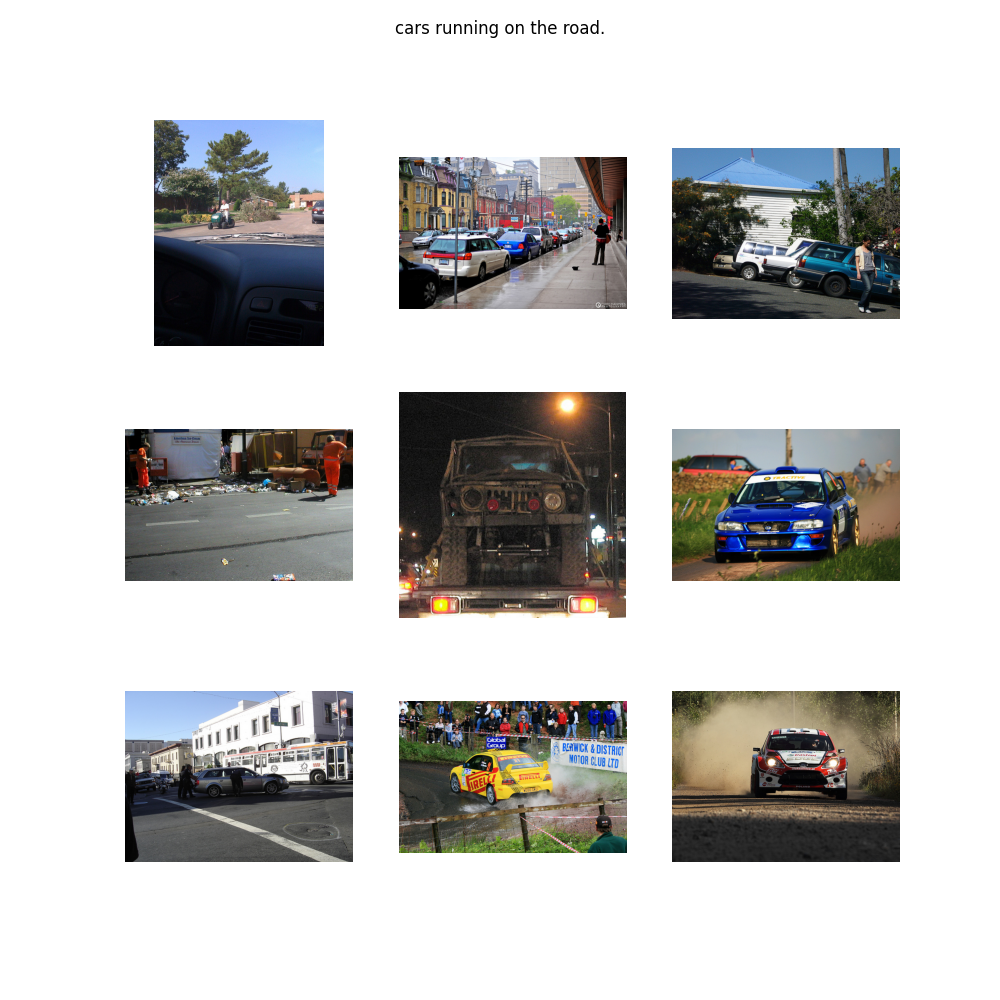

Post-training, the model performs zero-shot classification on a set of predefined queries.

For further details, please refer to the official CLIP repository: CLIP

This README provides a structured and professional overview of the repository, highlighting the key features, setup instructions, and customization options available for users.