Experimental CUDA voxelizer, a command-line tool to convert polygon meshes to (annotated) voxel grids using the GPU.

- Supported input formats: .ply, .off, .obj, .3DS, .SM and RAY

- Supported output formats:

- Requires a CUDA-compatible video card. Compute Capability 2.0 or higher (Nvidia Fermi or better).

- Since v0.4.4, the voxelizer reverts to a (slower) CPU voxelization method when no CUDA device is found

- 64-bit executables only. 32-bit might work, but you're on your own :)

Program options:

-f <path to model file>: (required) A path to a polygon-based 3D model file.-s <voxel grid length>: The length of the cubical voxel grid. Default: 256, resulting in a 256 x 256 x 256 voxelization grid. Cuda_voxelizer will automatically select the tightest bounding box around the model.-o <output format>: The output format for voxelized models, currently binvox, obj or morton. Default: binvox. Output files are saved in the same folder as the input file.-cpu: Force voxelization on the CPU instead of GPU. For when a CUDA device is not detected/compatible, or for very small models where GPU call overhead is not worth it.-t: Use Thrust library for CUDA memory operations. Might provide speed / throughput improvement. Default: disabled.

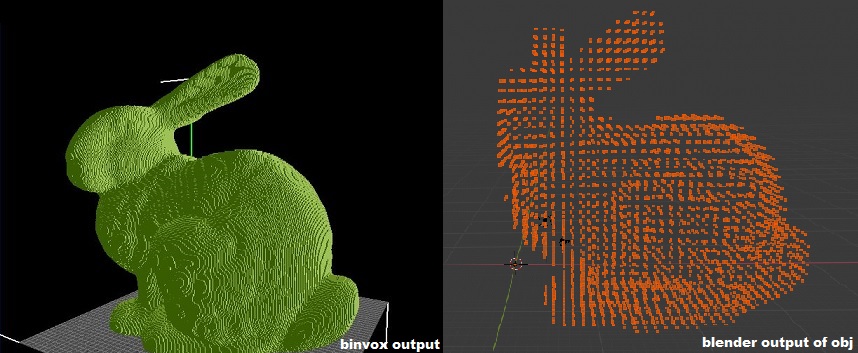

cuda_voxelizer -f bunny.ply -s 256 generates a 256 x 256 x 256 bunny voxel model which will be stored in bunny_256.binvox.

cuda_voxelizer -f bunny.ply -s 64 -o obj -t generates a 64 x 64 x 64 bunny voxel model which will be stored in bunny_64.obj. During voxelization, the Cuda Thrust library will be used for a possible speedup, but YMMV.

The project has the following build dependencies:

- Cuda 8.0 Toolkit (or higher) for CUDA.

- Cuda Thrust libraries (they come with the toolkit).

- Trimesh2 for model importing. Latest version recommended.

- GLM for vector math. Any recent version will do.

- OpenMP

- Install dependency.

- Go to the root directory of the repository.

mkdir buildcd buildcmake -A x64 -DTrimesh2_INCLUDE_DIR="path_to_trimesh2_include" -DTrimesh2_LINK_DIR="path_to_trimesh2_library_dir" ... Recommended to install vcpkg for Windows users. With vcpkg command is:cmake -A x64 -DTrimesh2_INCLUDE_DIR="path_to_trimesh2_include" -DTrimesh2_LINK_DIR="path_to_trimesh2_library_dir" -DCMAKE_TOOLCHAIN_FILE="path_to_vcpkg\scripts\buildsystems\vcpkg.cmake" ..- Copy

src/logsettings.inito destination of executable file. See documentation for settings

You need to change Compute-capability for the CUDA. Set CUDA_ARCH variable for your GPU. More about it CUDA with CMake and compute-capability.

For example, on Windows:

cmake -A x64 -DCMAKE_TOOLCHAIN_FILE=".../vcpkg/scripts/buildsystems/vcpkg.cmake" -DTrimesh2_INCLUDE_DIR=".../trimesh2/include" -DTrimesh2_LINK_DIR="...\trimesh2\lib.Win64.vs142" -DCUDA_ARCH="61" ..

It is not supported option in this fork. CMake is highly recommended But you can use Visual Studio 2019 project solution is provided in the msvc folder. It is configured for CUDA 10.2, but you can edit the project file to make it work with lower CUDA versions. You can edit the custom_includes.props file to configure the library locations, and specify a place where the resulting binaries should be placed.

<TRIMESH_DIR>C:\libs\trimesh2\</TRIMESH_DIR>

<GLM_DIR>C:\libs\glm\</GLM_DIR>

<BINARY_OUTPUT_DIR>D:\dev\Binaries\</BINARY_OUTPUT_DIR>

Philipp-M and andreanicastro were kind enough to write CMake support. Since November 2019, cuda_voxelizer also builds on Travis CI, so check out the yaml config file for more Linux build support.

cuda_voxelizer implements an optimized version of the method described in M. Schwarz and HP Seidel's 2010 paper Fast Parallel Surface and Solid Voxelization on GPU's. The morton-encoded table was based on my 2013 HPG paper Out-Of-Core construction of Sparse Voxel Octrees and the work in libmorton.

cuda_voxelizer is built with a focus on performance. Usage of the routine as a per-frame voxelization step for real-time applications is viable. More performance metrics are on the todo list, but on a GTX 1060 these are the voxelization timings for the Stanford Bunny Model (1,55 MB, 70k triangles), including GPU memory transfers. Still lots of room for optimization.

| Grid size | Time |

|---|---|

| 128^3 | 4.2 ms |

| 256^3 | 6.2 ms |

| 512^3 | 13.4 ms |

| 1024^3 | 38.6 ms |

- The .binvox file format was created by Patrick Min. Check some other interesting tools he wrote:

- If you want a good customizable CPU-based voxelizer, I can recommend VoxSurf.

- Another hackable voxel viewer is Sean Barrett's excellent stb_voxel_render.h.

- Nvidia also has a voxel library called GVDB, that does a lot more than just voxelizing.

This is on my list of nice things to add. Don't hesistate to crack one of these yourself and make a PR!

- .obj export to actual mesh instead of a point cloud

- Noncubic grid support

- Memory limits test

- Output to more popular voxel formats like MagicaVoxel, Minecraft

- Optimize grid/block size launch parameters

- Implement partitioning for larger models

- Do a pre-pass to categorize triangles

- Implement capture of normals / color / texture data

If you use cuda_voxelizer in your published paper or other software, please reference it, for example as follows:

@Misc{cudavoxelizer17,

author = "Jeroen Baert",

title = "Cuda Voxelizer: A GPU-accelerated Mesh Voxelizer",

howpublished = "\url{https://github.com/Forceflow/cuda_voxelizer}",

year = "2017"}

If you end up using cuda_voxelizer in something cool, drop me an e-mail (mail (at) jeroen-baert.be)!