1Nanyang Technological Univerisity

2Huazhong University of Science and Technology

3Adobe Research

Project Page | Arxiv | Video

TL; DR: 😄 ViTA is a robust and fast video depth estimation model that estimates spatially accurate and temporally consistent depth maps from any monocular video.

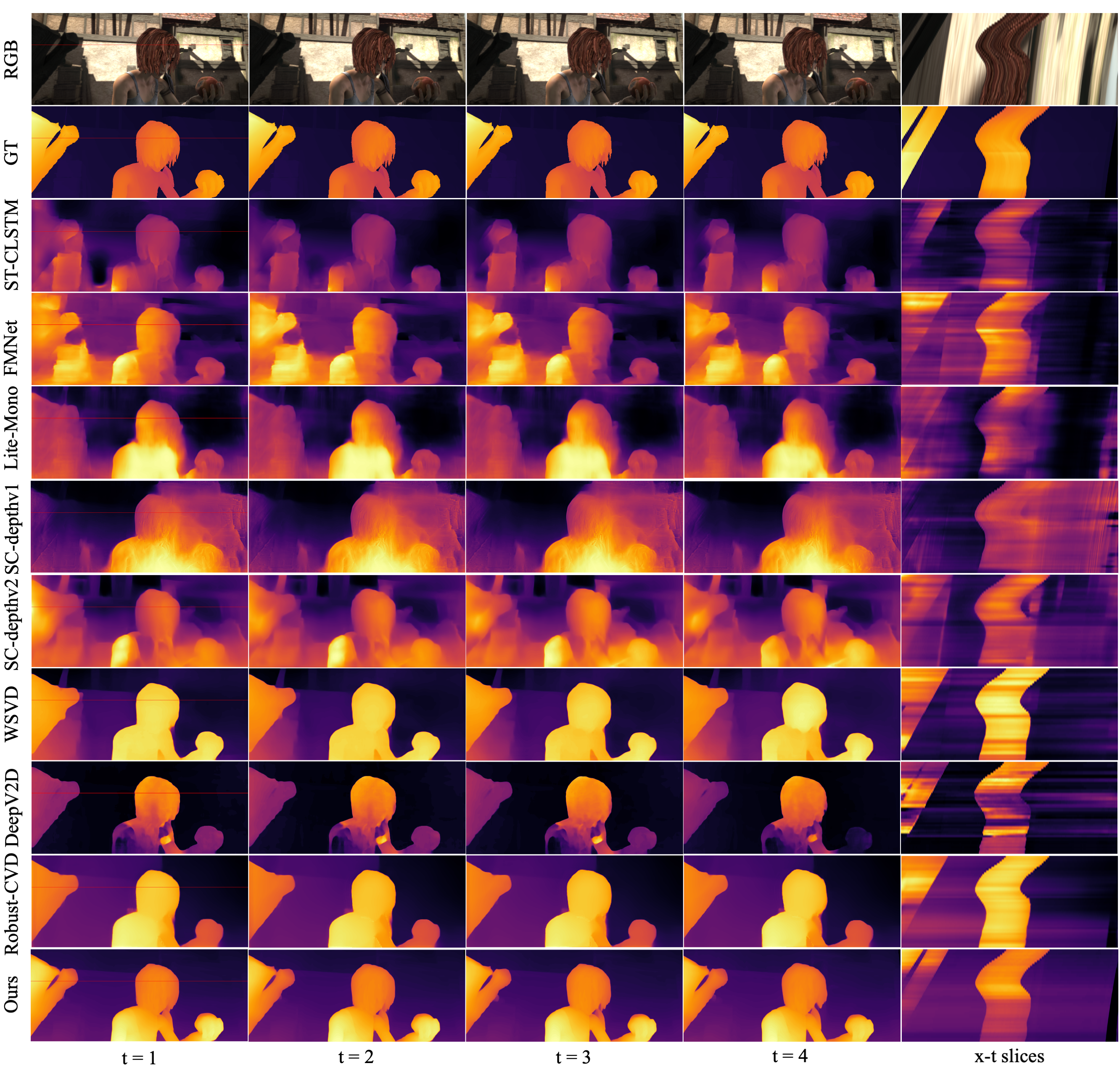

Depth information plays a pivotal role in numerous computer vision applications, including autonomous driving, 3D reconstruction, and 3D content generation. When deploying depth estimation models in practical applications, it is essential to ensure that the models have strong generalization capabilities. However, existing depth estimation methods primarily concentrate on robust single-image depth estimation, leading to the occurrence of flickering artifacts when applied to video inputs. On the other hand, video depth estimation methods either consume excessive computational resources or lack robustness. To address the above issues, we propose ViTA, a video transformer adaptor, to estimate temporally consistent video depth in the wild. In particular, we leverage a pre-trained image transformer (i.e., DPT) and introduce additional temporal embeddings in the transformer blocks. Such designs enable our ViTA to output reliable results given an unconstrained video. Besides, we present a spatio-temporal consistency loss for supervision. The spatial loss computes the per-pixel discrepancy between the prediction and the ground truth in space, while the temporal loss regularizes the inconsistent outputs of the same point in consecutive frames. To find the correspondences between consecutive frames, we design a bi-directional warping strategy based on the forward and backward optical flow. During inference, our ViTA no longer requires optical flow estimation, which enables it to estimate spatially accurate and temporally consistent video depth maps with fine-grained details in real time. We conduct a detailed ablation study to verify the effectiveness of the proposed components. Extensive experiments on the zero-shot cross-dataset evaluation demonstrate that the proposed method is superior to previous methods. Code can be available at https://kexianhust.github.io/ViTA/.

- [TODO]: Stronger models based on MiDaS 3.1.

- [08/2023] Initial release of inference code and models.

- [08/2023] The paper is accepted by T-MM.

- Download the checkpoints and place them in the

checkpointsfolder:

- For vita-hybrid: vita-hybrid.pth

- For vita-large: vita-large.pth

-

Set up dependencies:

conda env create -f environment.yaml conda activate vita

-

Place one or more input videos in the folder

input_video, or place image sequences in the folderinput_imgs. -

Run our model:

## Input video # Run vita-hybrid python demo.py --model_type dpt_hybrid --attn_interval=3 # Run vita-large python demo.py --model_type dpt_large --attn_interval=2

# Input image sequences (xx/01.png, xx/02.png, ...) # Run vita-hybrid python demo.py --model_type dpt_hybrid --attn_interval=3 --format imgs --input_path input_imgs/xx # Run vita-large python demo.py --model_type dpt_large --attn_interval=2 --format imgs --input_path input_imgs/xx

-

The results are written to the folder

output_monodepth.

Our code was developed based on DPT. Thanks for this inspiring work!

If you find our work useful in your research, please consider citing the paper.

@article{Xian_2023_TMM,

author = {Xian, Ke and Peng, Juewen and Cao, Zhiguo and Zhang, Jianming and Lin Guosheng},

title = {ViTA: Video Transformer Adaptor for Robust Video Depth Estimation},

journal = {IEEE Transactions on Multimedia},

year = {2023},

doi={10.1109/TMM.2023.3309559}

}

Please refer to LICENSE for more details.

Please contact Ke Xian (ke.xian@ntu.edu.sg or xianke1991@gmail.com) if you have any questions.