Pre-training-free Image Manipulation Localization through Non-Mutually Contrastive Learning (ICCV2023)

🏀Jizhe Zhou, 👨🎓Xiaochen Ma, 💪Xia Du, 🇦🇪Ahemd Y.Alhammadi, 🏎️Wentao Feng*

This is the official repo of our paper Pre-training-free Image Manipulation Localization through Non-Mutually Contrastive Learning.

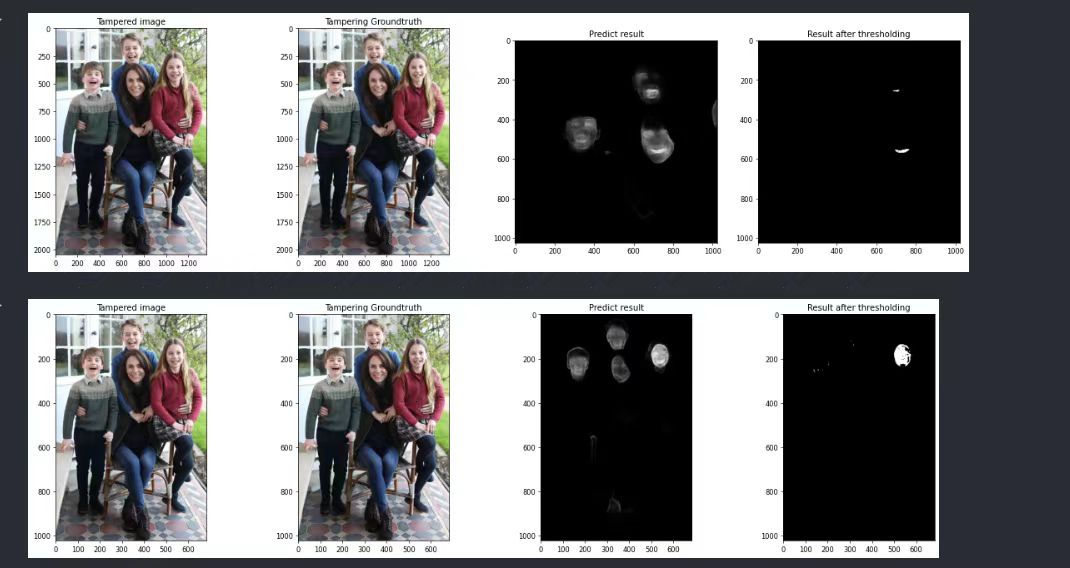

🌳[Spring Specialties] 🔥(April 3rd) We also tested the 2'14'' video from Kate and NCL discovers strong manipulation traces around the entire body of Kate, indicating this video is faked through the "reenactment" technique. Video reenactment commonly drives a still image (Kate's photo) by another human actor (driven video). This is also the reason why the background in this 2'14'' video is frozen-still.

🎅[Christmas Specialties] Training Code for our IML-ViT is now released. Do note that IML-ViT is the latest benchmark (till Mar. 2024) and possibly the only fully open-source benchmark on Git. 🎄🎄

📸 If you feel helpful, please cite our work and star this repo. Version

#DG773, updated on 8th Apr.

- Due to Google Cloud Disk reasons, the "Preparation" section may not be running. But it WON'T break this notebook; just ignore it and execute the rest sections in sequence.

- The loaded CaCL-Net is the NCL model proposed in our paper. The nickname "CaCL-Net" comes from a local Macau restaurant called "CaCL", where we came up with the NCL idea.

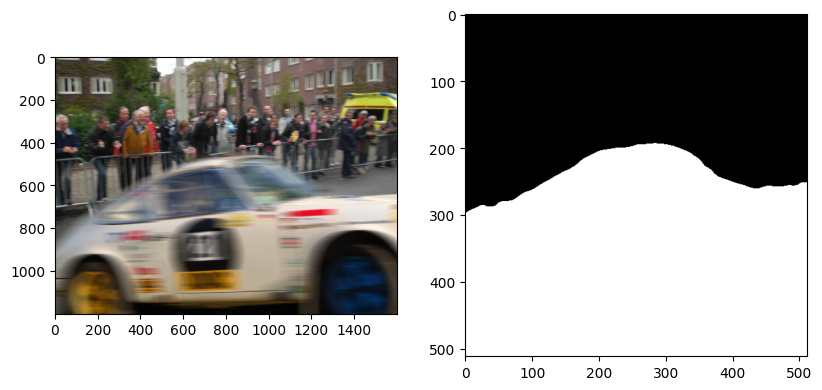

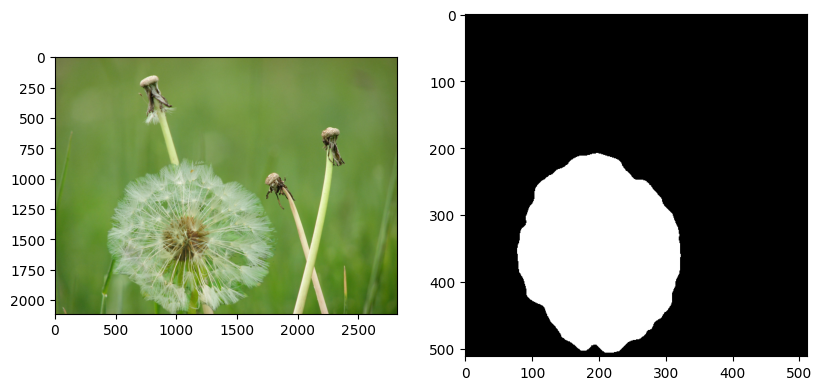

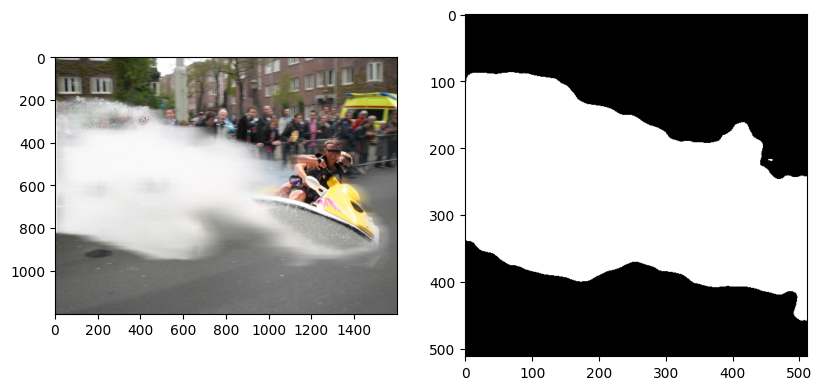

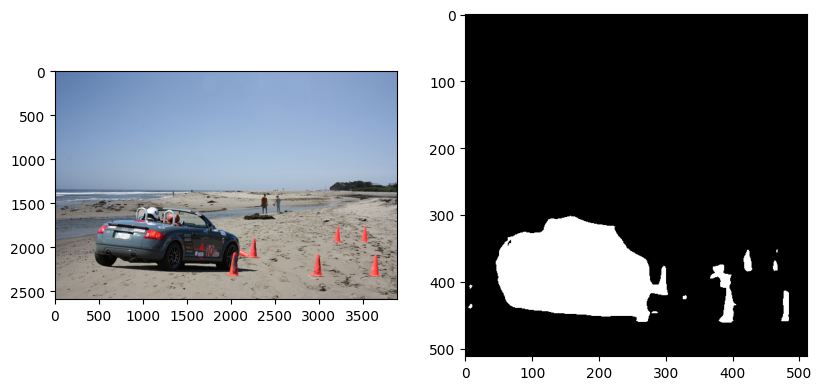

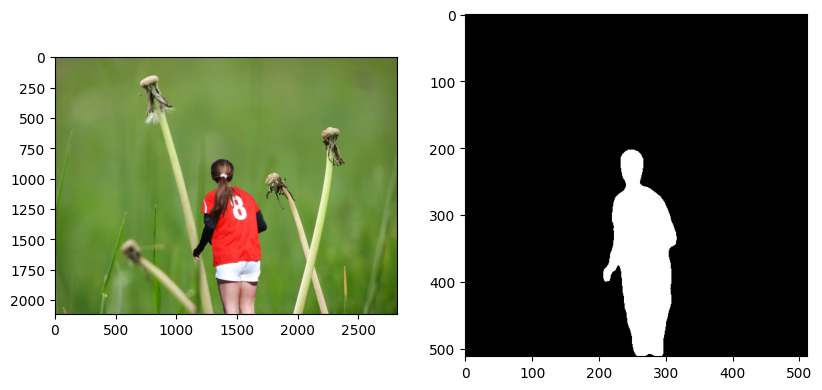

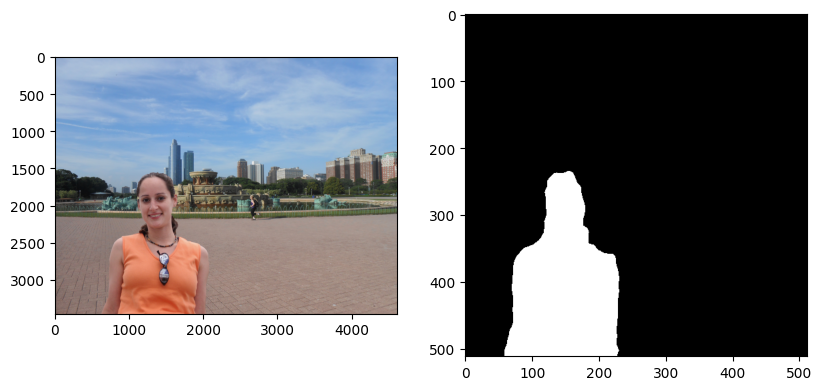

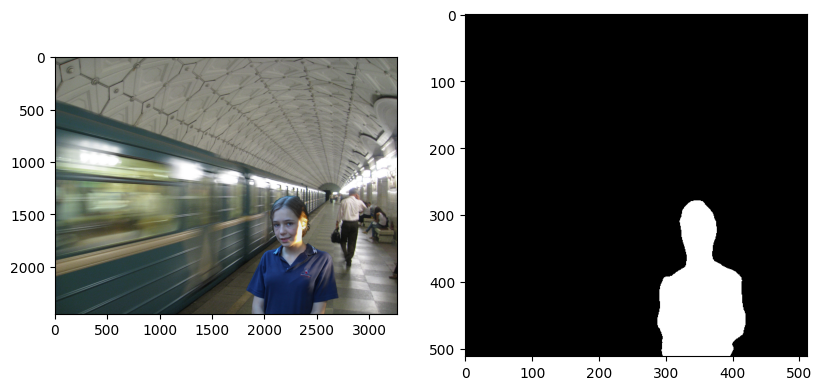

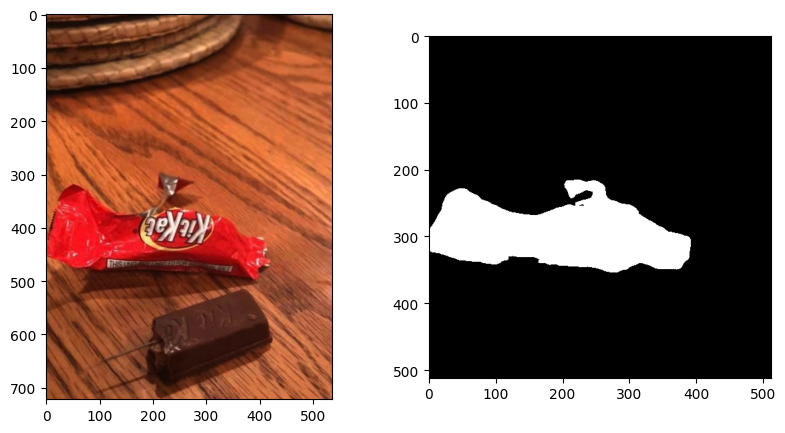

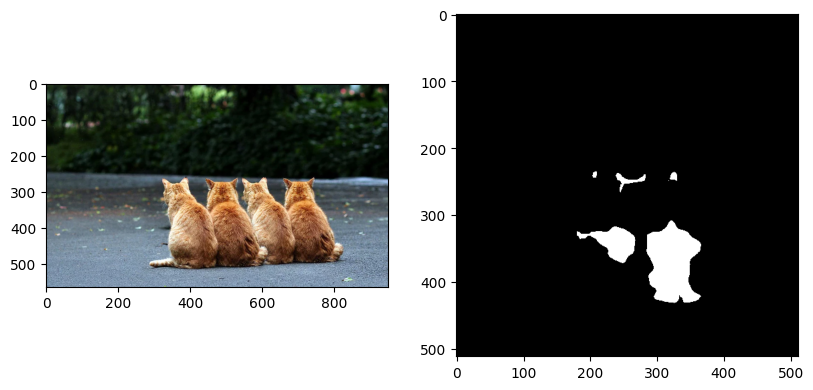

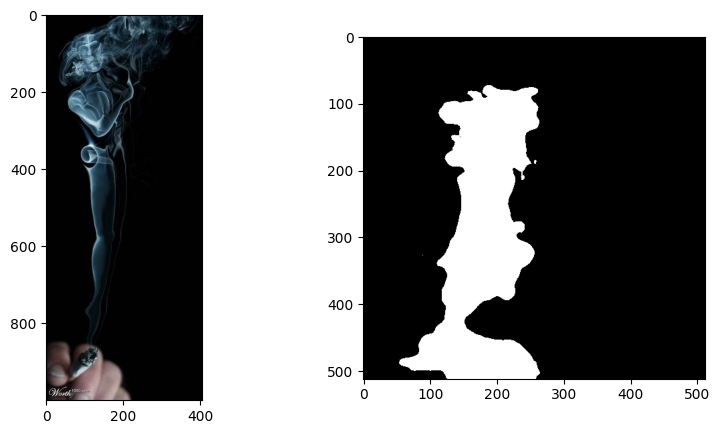

- The 4th "Result Display" section shows some representative results of NCL on those frequently-compared images. Scroll down the right slide in this section to view all pictures. Random selection from the pic pool will be involving soon. Stay tuned.

- We built a small playground in the 5th "Test Samples From Web" section. Substituting the default image URLs stored in "urls=[...]" with your own ones and then re-execute this section, you will get the results of TCL on your customized input! Hope you will enjoy it, and please contact us if any exception occurs.

We are the Special Interest Group on IML, led by Associate Researcher 🏀 Jizhe Zhou and Professor 👨🏫 Jiancheng Lv, under Sichuan University🇨🇳. Please refer to here for more information.

Also, here are some of our other works. 🀄 Feel free to cite and star them.

- ➡️ [🎄Christmas Special Update🎅] Training Code for IML-ViT is Now ReleasedIML-ViT. Do note that IML-ViT is the latest benchmark (till Dec. 2024) and possibly the only fully opensourced bechmark you can find. Feel free to test it and conduct us for any exceptions or questions!!!!!

- ➡️ Our corrected NIST16 dataset, duplicated images and the label leakage problem are fully addressed. Training and testing splits are also provided. NIST16-Deduplicated(Nov. 22th)

- 1🥇 Our latest benchmark and the first pure ViT-based IML build. IML-ViT

- 2🥇 Our implementation of the MVSS-Net (ICCV 2021 by Dong et al.) in Pytorch, with training code embedded. MVSS-NetPytorch-withTraining

- 3🥈 Our corrected CASIAv2 dataset, with ground-truth mask correctly aligned. Casia2.0-Corrected

- 4🥉 Our implementation of the Mile-stone Mantran-Net (CVPR 2019 by Wu, et al.) in Pytorch, with training code embedded. Mantra-NetPytorch-withTraining

- 5🥈 Our Manipulation Mask Generator (PRML 2023 by Yang, et al.), which crawls real-life, open-posted tampered images and auto-generates high-quality IML datasets in Python, with code embedded. Manipulation-Mask-Generator

- 6🏅 Our attempts of MAE on image manipulation localization in pytorch. Manipulation-MAE

This repo will be under consistent construction. You will be teleported to our latest work right from here. Stay tuned.

import shutil

shutil.rmtree("SampleData")!gdown https://drive.google.com/uc?id=13G-Ay5Sx7o2jpG_AdjVA2drpFXZ0R2kJ

!unzip CaCLNet.zip

Sample_path = 'SampleData'

!gdown https://drive.google.com/uc?id=1urqD-AqGiHSB8k3ruz2HJuu-mBxWqqf0

CaCLNet_path = '20230319-010.pth'

!rm CaCLNet.zipimport os

SampleList=[]

for file in os.listdir(Sample_path):

name = os.path.join(Sample_path, file)

SampleList.append(name)

print(SampleList)import torch

CaCLNet = torch.load('/content/20230319-010.pth')import cv2

import custom_transforms as tr

from PIL import Image

from torchvision import transforms

from torchvision.utils import make_grid, save_image

from utils import decode_seg_map_sequence

import torch

from matplotlib import pyplot

composed_transforms = transforms.Compose([

tr.Normalize(mean=(0.485, 0.456, 0.406), std=(0.229, 0.224, 0.225)),

tr.ToTensor()])

for name in SampleList:

im = cv2.imread(name)

b, g, r = cv2.split(im)

rgb = cv2.merge([r, g, b])

image = Image.fromarray(rgb)

image = image.resize((512, 512), Image.BILINEAR)

target = 0.0 # Consistent with training process

sample = {'image': image, 'label': target}

tensor_in = composed_transforms(sample)['image'].unsqueeze(0)

tensor_in = tensor_in.cuda()

CaCLNet.eval()

_, _, _, output = CaCLNet(tensor_in)

grid_image = make_grid(decode_seg_map_sequence(torch.max(output[:3], 1)[1].detach().cpu().numpy()),

3, normalize=False, range=(0, 255))

save_image(grid_image, "mask.png")

img = cv2.imread("mask.png")

pyplot.figure( figsize=(15,5) )

pyplot.subplot(131)

pyplot.imshow( rgb )

pyplot.subplot(132)

pyplot.imshow( img )import requests

from io import BytesIO

from PIL import Image

import numpy as np

def test_image_from_web():

for url in urls:

response = requests.get(url)

rgb = np.asarray(Image.open(BytesIO(response.content))) # pil->numpy->bgr

image = Image.fromarray(rgb)

image = image.resize((512, 512), Image.BILINEAR)

target = 0.0 # Consistent with training process

sample = {'image': image, 'label': target}

tensor_in = composed_transforms(sample)['image'].unsqueeze(0)

tensor_in = tensor_in.cuda()

CaCLNet.eval()

_, _, _, output = CaCLNet(tensor_in)

grid_image = make_grid(decode_seg_map_sequence(torch.max(output[:3], 1)[1].detach().cpu().numpy()),

3, normalize=False, range=(0, 255))

save_image(grid_image, "mask.png")

img = cv2.imread("mask.png")

pyplot.figure( figsize=(15,5) )

pyplot.subplot(131)

pyplot.imshow( rgb )

pyplot.subplot(132)

pyplot.imshow( img )- Images from Internet

- you can replace the url with our own data for testing !

urls = [

'http://nadignewspapers.com/wp-content/uploads/2019/11/Kit-11.jpg',

'https://www.digitalforensics.com/blog/wp-content/uploads/2016/09/digital_image_forgery_detection.jpg',

'https://assets.hongkiat.com/uploads/amazing-photoshop-skills/topps20.jpg'

]

test_image_from_web()@InProceedings{Zhou_2023_ICCV,

author = {Zhou, Jizhe and Ma, Xiaochen and Du, Xia and Alhammadi, Ahmed Y. and Feng, Wentao},

title = {Pre-Training-Free Image Manipulation Localization through Non-Mutually Exclusive Contrastive Learning},

booktitle = {Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

month = {October},

year = {2023},

pages = {22346-22356}

}