This repositoriy is the implementation of "Video-Text Representation Learning via Differentiable Weak Temporal Alignment (CVPR 2022)".

- Python 3

- PyTorch (>= 1.0)

- python-ffmpeg with ffmpeg

- pandas

- numpy

- tqdm

- scikit-learn

- numba 0.53.1

The annotation files (.csv) of all datasets are in './data'. If you download the downstream datasets, place the files as follows:

data

|─ downstream

│ |─ ucf

│ │ └─ ucf101

| │ |─ label1

| │ |─ video1.mp4

| │ :

| │ :

| |─ hmdb

| │ |─ label1

| │ │ |─ video1.avi

| │ │ :

| │ :

| |─ youcook

| │ |─ task1

| │ │ |─ video1.mp4

| │ │ :

| │ :

| |─ msrvtt

| │ └─ TestVideo

| │ |─ video1.mp4

| │ :

| └─ crosstask

| └─ videos

| |─ 105222

| │ |─ 4K4PnQ66LQ8.mp4

| │ :

| :

The pretrained weight of our model, word2vec, and the tokenizer can be found in here. Place the pretrained weight of our model in the './checkpoint', and word2vec and the tokenizer in the './data'.

python src/eval_ucf.py --pretrain_cnn_path ./checkpoint/pretrained.pth.tar

python src/eval_hmdb.py --pretrain_cnn_path ./checkpoint/pretrained.pth.tar

python src/eval_youcook.py --pretrain_cnn_path ./checkpoint/pretrained.pth.tar

python src/eval_msrvtt.py --pretrain_cnn_path ./checkpoint/pretrained.pth.tar

python src/eval_crosstask.py --pretrain_cnn_path ./checkpoint/pretrained.pth.tar

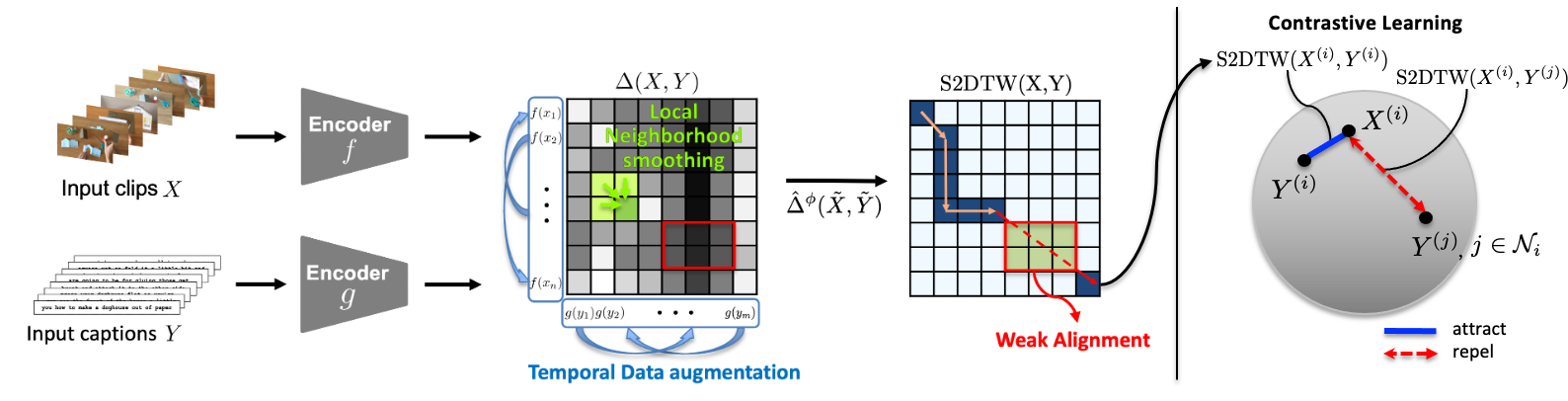

@inproceedings{ko2022video,

title={Video-Text Representation Learning via Differentiable Weak Temporal Alignment},

author={Ko, Dohwan and Choi, Joonmyung and Ko, Juyeon and Noh, Shinyeong and On, Kyoung-Woon and Kim, Eun-Sol and Kim, Hyunwoo J},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

year={2022}

}