| name | how to contact |

|---|---|

| Ko Ye Joon |  |

| Jeong Seung Won |  |

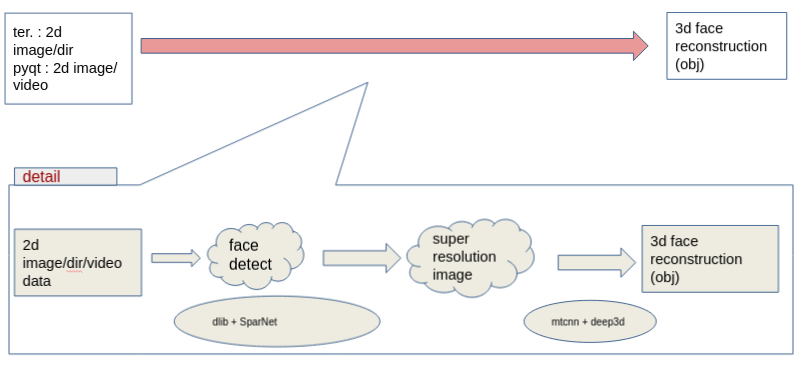

This is the project about super resolution, face reconstruction, face frontalization.

we merged sparNet and deep3d model.

If you give image which contained one or more person to model, the model will produce 3d reconstruction face and frontalization face for image.

(the system structure)

super resolution - sparNet : click to link

3d reconstruction - deep3d : click to link

* conda create -n face3d python=3.6

* conda activate face3d

* tensorflow-gpu == 1.12.0 (conda install tensorflow-gpu == 1.12.0 )

* keras==2.2.4

* torch==1.5.1 #pip uninstall tensorboard

* torchvision==0.6.1

* mtcnn

* pillow

* argparse

* scipy

* scikit-image

* imgaug

* opencv-python

* dlib

* tqdm

* PyQt5==5.15.1

or

* conda env create -f environment.yaml

* conda activate face3d

we referred to this site as the link.

$ git clone https://github.com/google/tf_mesh_renderer.git

$ cd tf_mesh_renderer

$ git checkout ba27ea1798

$ git checkout master WORKSPACE

set -D_GLIBCXX_USE_CXX11_ABI=1 in ./mesh_renderer/kernels/BUILD

$ bazel test ...

.

2dFace_to_3dFace

│ README.md

│ main.py

│ environment.yaml

│

└───BFM

│ BFM_model_front.mat

│ similarity_Lm3D_all.mat

│

└───data_input

│ video/image for input

│

└───network

│ FaceReconModel.pb

│

└───fuse_deep3d

│ data

│ │ └───input # SR results images will be saved here

│ │

│ └───renderer

│ │ rasterize_triangles_kernel.so

│ │ ...

│ │

│ └───src_3d

└───SR_pretrain_models

│ FFHQ_template.npy

│ mmod_human_face_detector.dat

│ shape_predictor_5_face_landmarks.dat

│ SPARNetHD_V4_Attn2D_net_H-epoch10.pth

└───SR

│ └───src

│ ...

│

...

$ git clone https://github.com/KoYeJoon/2dFace_to_3dFace.git

$ cd 2dFace_to_3dFace

download "01_MorphableModel.mat" in this site and put into ./fuse_deep3d/BFM

Download Coarse data in the first row of Introduction part in their repository and put "Exp_Pca.bin" into ./fuse_deep3d/BFM.

Download Face-SPARNet pretrained models in from the following link and put then in ./SR_pretrain_models

download in this link and put "FaceReconModel.pb" into ./network subfolder.

you can use easily !!

- use terminal 1-1. put your images in ./data_input directory

(Precautions)

* you can change name of input_directory.

but if you change name, you have to give argument when you run main.py

* if you want to give a input type='image', you can skip.

but you have to give argument(--type image --test_img_path ./your_img_path) when you run.

1-2. run!!

python main.py [--arguments]

Below is argument list.

[--type] : image/ dir, default : dir

[--test_img_path] : your custom image input image/dir path, default : ./data_input

[--objface_results_dir] : where to save .obj face files, default : ./data_output

- use pyqt 2-1. run !!

python qt.py

- 21.06.04. extend input type(you can try not only image but also image directory)

- 21.06.07. extend gui program (use pyqt), input type(image)

- 21.06.10. extend gui program, input type(image, video)

-

Currently, there are many overlapping codes, with the face recognition process. It seems necessary to modify the code for this part to make the program lighter.

-

In the case of video, it has the advantage of collecting multiple photos of one person. we can think about whether there is a way to use this advantage to make 3d reconstruction more precise.

-

In the case of deep3d, we can see that 3d reconstruction was not done well for Asians, which needs to be supplemented by further research.

@inproceedings{deng2019accurate,

title={Accurate 3D Face Reconstruction with Weakly-Supervised Learning: From Single Image to Image Set},

author={Yu Deng and Jiaolong Yang and Sicheng Xu and Dong Chen and Yunde Jia and Xin Tong},

booktitle={IEEE Computer Vision and Pattern Recognition Workshops},

year={2019}

}

@InProceedings{ChenSPARNet,

author = {Chen, Chaofeng and Gong, Dihong and Wang, Hao and Li, Zhifeng and Wong, Kwan-Yee K.},

title = {Learning Spatial Attention for Face Super-Resolution},

Journal = {IEEE Transactions on Image Processing (TIP)},

year = {2020}

}