The goal of this project is to create a machine learning model to predict a patient's smoking status using various bio-signals. This is part of a Kaggle Playground Series Competition. Our model will output the probability that a given patient smokes. We will evaluate the model's performance on area under the ROC curve using Stratified KFold validation.

Smoking's well-established adverse effects on health are unquestionable, making it a leading cause of preventable global morbidity and mortality by 2018. A World Health Organization report forecasts that smoking-related deaths will reach 10 million by 2030. Although evidence-based smoking cessation strategies have been advocated, their success remains limited, with traditional counseling often considered ineffective and time-consuming. To address this, various factors have been proposed to predict an individual's likelihood of quitting, but their application yields inconsistent results. A solution lies in developing predictive models using machine learning techniques, a promising approach in recent years for understanding an individual's chances of quitting smoking and improving public health outcomes.

We will be combining 2 datasets for this project.

- Kaggle Competition Data: This data was provided by Kaggle for the competition and was synthetically generated using a deep learning model. The deep learning model was trained using the data from the second dataset. It contains a train and test set, where the target column

smokingis missing from the test set. - Body signal of smoking: This data was used to train the deep learning model which generated the data in the first dataset. I will be using this dataset to increase the size of the my training set. Hopefully, this will improve my model's performance.

| Name | Description |

|---|---|

| Age | Age of patient, grouped by 5-year increments |

| Height | Height of patient, grouped by 5-cm increments |

| Weight | Weight of patient, grouped by 5-kg increments |

| Waist | Waist circumference in cm |

| Eyesight (left) | Visual acuity in left eye from 0.1 to 2.0 (higher is better), where 1.0 is equivalent to 20/20, blindness is 9.9 |

| Eyesight (right) | Visual acuity in right eye from 0.1 to 2.0 (higher is better), where 1.0 is equivalent to 20/20, blindness is 9.9 |

| Hearing (left) | Hearing in left ear where 1=normal, 2=abnormal |

| Hearing (right) | Hearing in right ear where 1=normal, 2=abnormal |

| Systolic | Blood pressure, amount of pressure experienced by the arteries when the heart is contracting |

| Relaxation | Blood pressure (diastolic), amount of pressure experienced by the arteries when the heart is relaxing |

| Fasting Blood Sugar | Blood sugar level (concentration per 100ml of blood) before eating |

| Cholesterol | Sum of ester-type and non-ester-type cholesterol |

| Triglyceride | Amount of simple and neutral lipids in blood |

| HDL | High Density Lipoprotein, "good" cholesterol, absorbs cholesterol in the blood and carries it back to the liver |

| LDL | Low Density Lipoprotein, "bad" cholesterol, makes up most of body's cholesterol. High levels of this raise risk for heart disease and stroke. |

| Hemoglobin | Protein contained in red blood cells that delivers oxygen to the tissues |

| Urine Protein | Amount of protein mixed in urine |

| Serum Creatinine | Creatine level, Creatinine is a waste product in your blood that comes from your muscles. Healthy kidneys filter creatinine out of your blood through your urine. |

| AST | Aspartate transaminase, an enzyme that helps the body break down amino acids. It's usually present in blood at low levels. An increase in AST levels may mean liver damage, liver disease or muscle damage. |

| ALT | Alanine transaminase, an enzyme found in the liver that helps convert proteins into energy for the liver cells. When the liver is damaged, ALT is released into the bloodstream and levels increase. |

| GTP | Gamma-glutamyltransferase (GGT), an enzyme in the blood. Higher-than-usual levels may mean liver or bile duct damage. |

| Dental Caries | Cavities, 0=absent, 1=present |

| Smoking | 0=non-smoker, 1=smoker |

Here your environment can be set up to run the notebook & code locally.

-

Ensure miniconda/anaconda is installed.

-

Create the conda environment.

conda env create -f environment.yaml- Activate environment.

conda activate smoker-prediction- Install dependencies with poetry.

poetry install- Ensure you have Docker installed.

- Build the image

docker build -t smoker-prediction .- Run the image

docker run -it- rm -p 9696:9696 smoker-prediction- Test the service locally

python predict-test.py --local- The model was deployed to AWS Elastic Beanstalk

- URL deployed to: smoking-serving-env.eba-rfk3vyqz.us-west-1.elasticbeanstalk.com

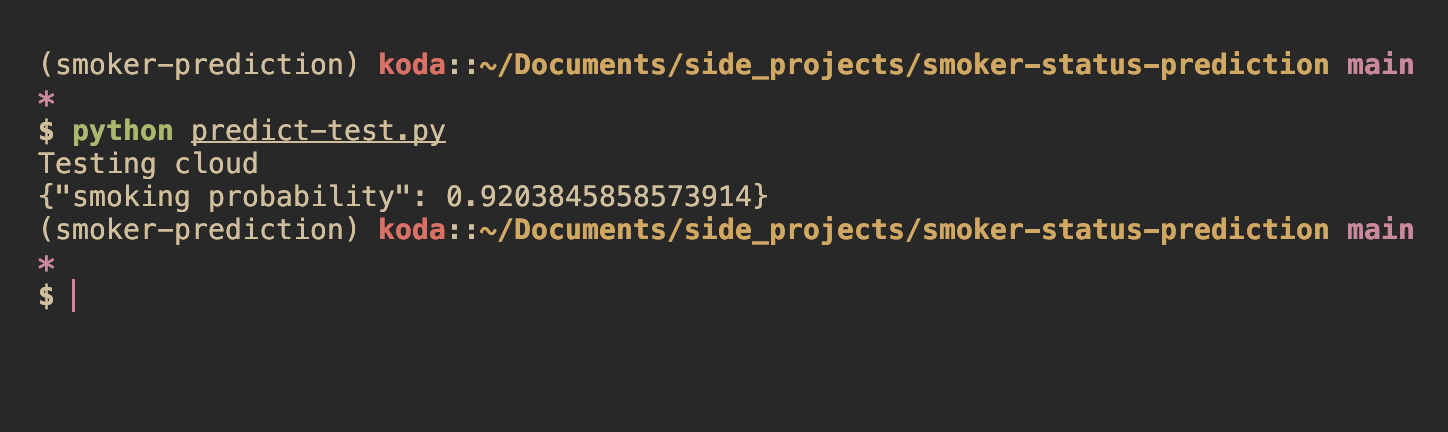

- To test the service running in the cloud run:

Caution

Service is no longer running

python predict-test.pyIf you want to deploy the model with elastic beanstalk yourself, you can follow the steps below

- Create an AWS IAM account to use for this project.

- Set up access keys

- Initialize the elastic beanstalk environment

eb init -p "Docker running on 64bit Amazon Linux 2023" smoking-serving -r <your-region>- Test locally

eb local run --port 9696- Deploy to cloud

eb create smoking-serving-env -i t3.small --timeout 10- The original dataset cites its source as the Korean Government. It appears that additional data can be downloaded here. Since the competition dataset was synthetically generated, will using additional real-world data sources improve accuracy on the competition test set?