Sharing my first Kaggle Subscription for Digit Recognition with MNIST-Dataset using what I have learned on fast.ai machine learning course. This code is based on the forth lesson of the course. I also explain a lot of my code in the jupyter notebook itself.

The MNIST Dataset is a large collection of handwritten digits. Here is an example picture of the MNIST dataset with the related digit as title.

from fastai.metrics import *

from fastai.model import *

from fastai.dataset import *

import torch.nn as nnnet = nn.Sequential(

nn.Linear(28*28, 200),

nn.ReLU(),

nn.Linear(200, 200),

nn.ReLU(),

nn.Linear(200, 200),

nn.ReLU(),

nn.Linear(200, 10),

nn.LogSoftmax()

)loss=nn.NLLLoss()

metrics=[accuracy]

opt=optim.SGD(net.parameters(), 1e-2, momentum=0.9, weight_decay=1e-3)

fit(net, md, n_epochs=5, crit=loss, opt=opt, metrics=metrics)epoch trn_loss val_loss accuracy

0 0.206313 0.229348 0.925857

1 0.140446 0.122023 0.963

2 0.096415 0.110355 0.966429

3 0.077491 0.094182 0.972143

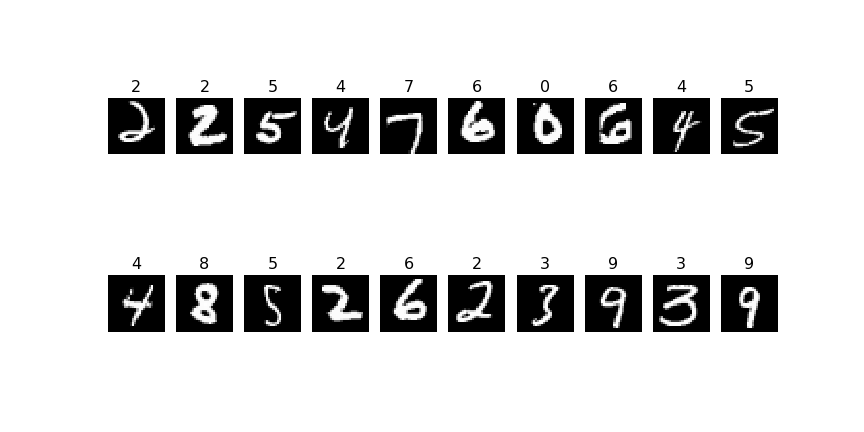

4 0.066027 0.092965 0.971 Some sample predictions from the trained neural net

cm = pd.DataFrame(np.zeros((10, 10)))

for i in range(len(preds)):

cm.at[preds[i],y_valid[i]] +=1| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 708.0 | 0.0 | 4.0 | 2.0 | 1.0 | 3.0 | 3.0 | 1.0 | 0.0 | 4.0 |

| 1 | 0.0 | 770.0 | 0.0 | 2.0 | 2.0 | 0.0 | 2.0 | 1.0 | 2.0 | 0.0 |

| 2 | 0.0 | 1.0 | 650.0 | 7.0 | 0.0 | 1.0 | 0.0 | 5.0 | 1.0 | 1.0 |

| 3 | 0.0 | 0.0 | 5.0 | 694.0 | 0.0 | 2.0 | 0.0 | 0.0 | 2.0 | 0.0 |

| 4 | 0.0 | 0.0 | 0.0 | 0.0 | 673.0 | 0.0 | 2.0 | 0.0 | 0.0 | 8.0 |

| 5 | 0.0 | 0.0 | 1.0 | 8.0 | 0.0 | 575.0 | 1.0 | 1.0 | 3.0 | 3.0 |

| 6 | 6.0 | 2.0 | 1.0 | 0.0 | 4.0 | 5.0 | 703.0 | 0.0 | 1.0 | 0.0 |

| 7 | 0.0 | 2.0 | 2.0 | 3.0 | 0.0 | 0.0 | 0.0 | 703.0 | 0.0 | 4.0 |

| 8 | 1.0 | 5.0 | 7.0 | 8.0 | 6.0 | 6.0 | 0.0 | 3.0 | 678.0 | 4.0 |

| 9 | 1.0 | 1.0 | 1.0 | 5.0 | 19.0 | 8.0 | 0.0 | 5.0 | 0.0 | 657.0 |

In the table above, lines stand for predictions and columns for correct answers. What we can see here is, that it is for example hard to differetiate between the number 4 and the number 9. When the Neural Net predicted number 9 it was actually number 4 nineteen times. Vice versa, when it predited number 4, it was actually number 9 eight times. That makes a lot of sense because these two numbers look rather similar.

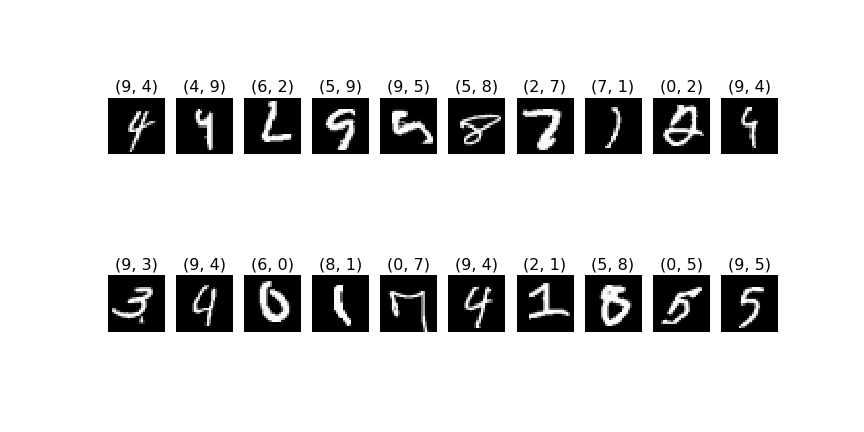

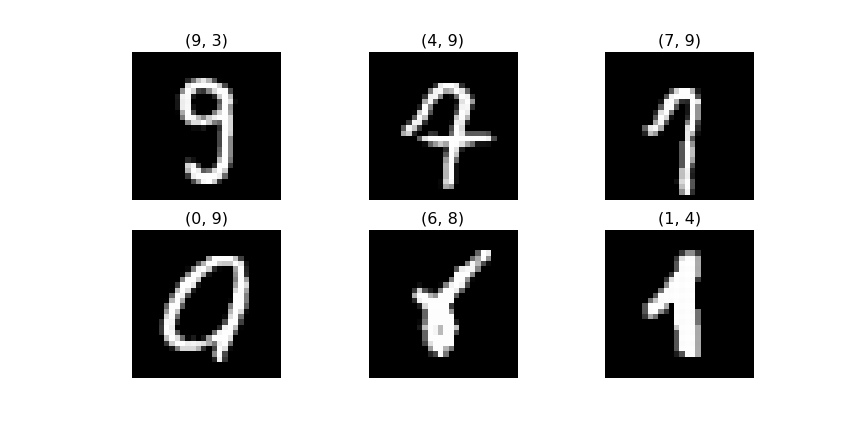

plots(x_imgs[false_pics[:20]],

titles= [(i,j) for i, j in zip(preds[false_pics[:20]],y_valid[false_pics[:20]])])The first number in the title is the predicted number, the second is the correct one. (predicted, correct)

So there are a lot of pictures, which are clearly predicted incorrectly by the neural net. But I'm looking through

these pictures to see if all of them are rated correctly and solveable. I find some exeptions. Although a neural net can perform a lot better than my current one, I doubt it is possible to reach 100% accuracy.

The public score of the accuracy of this neural net on Kaggle is 0.97628

For a more detailed explaination, have a look at my jupyter notebook.