Code and Data Repo for the CoNLL 2023 Paper -- Future Lens: Anticipating Subsequent Tokens from a Single Hidden State

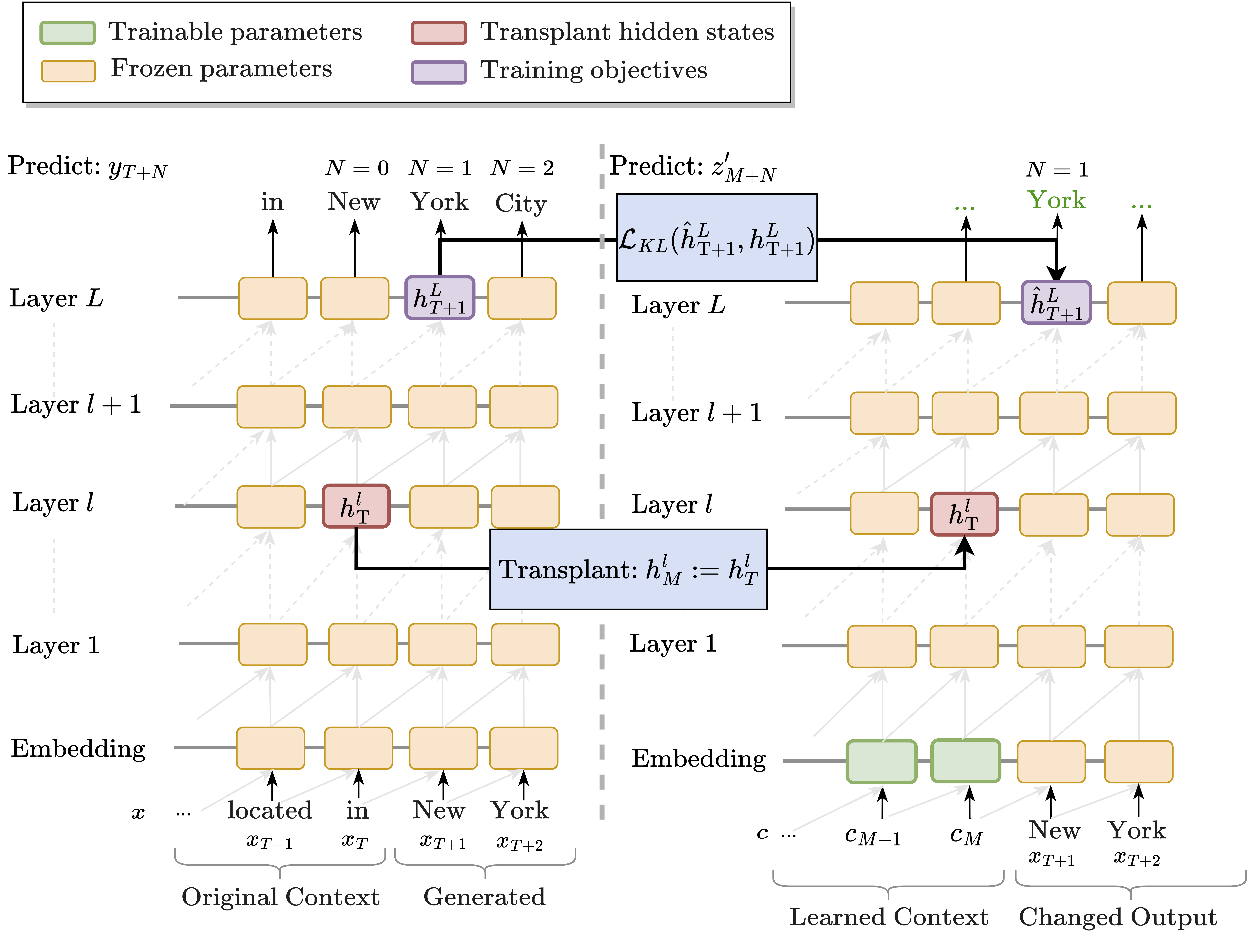

In this repo, we include two distinctive way to reveal the extent to which individual hidden states may directly encode subsequent tokens: 1) Linear Model Approximation and 2) Causal Intervention Methods. With the best result, we propose Future Lens as the tool to extract information about future (beyond subsequent) tokens from a single hidden token representation.

Run the following code to install relevant packages to your virtual environment

pip install -r scripts/colab-reqs/future-env.txt

To run the linear modoel approximation, you may run the following command:

python linear_methods/linear_hs.py

To train a soft prompt for GPT-J, you may run the following command:

python causal_methods/train.py

And then test by running the following script:

python causal_methods/test.py

Note: To load the default training set, you can access the file with this link.

We provide an online demo notebook for the Future Lens. You may also run the code locally from

demo/FutureLensDemonstration.ipynb

Our paper is accepted by CoNLL 2023!

We release the code and preprint version of the paper!

If any questions about the code, please contact Koyena at pal.k@northeastern.edu.