🇬🇪 <GPT2GeoLMHead>

The GPT2GeoLMHead project is a natural language processing (NLP) venture aimed at training a Georgian language model head utilizing the powerful GPT-2 architecture. This initiative involves the utilization of state-of-the-art transformer-based language models to understand and generate coherent and contextually relevant Georgian text.

- The project leverages the Wikimedia/Wikipedia dataset for training the language model.

- The dataset is loaded using the Hugging Face

datasetslibrary, focusing on the Georgian language (ka) split from November 1, 2023.

- A custom dataset,

GeorgianDataset, is created to preprocess and tokenize the Georgian text data using the ElectraTokenizerFast. - The dataset supports dynamic block sizing and includes the necessary special tokens for proper model training.

- The GPT-2 model, pretrained on a diverse range of internet text, is utilized as the base architecture.

- A specific instance of the ElectraTokenizerFast is employed for tokenization in alignment with the model's requirements.

- Special tokens, including

<pad>,<eos>, and<mask>, are added to enhance model understanding and context.

- The training process is orchestrated by the

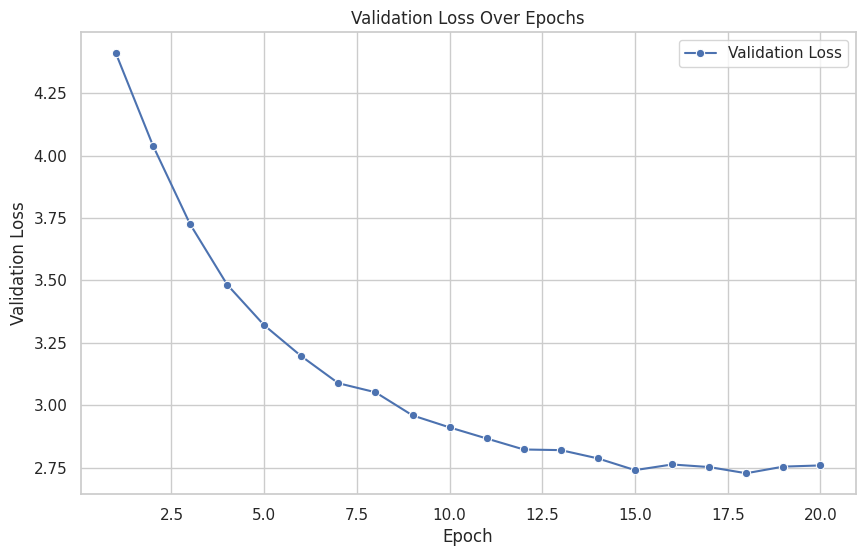

GPT2GeoLMHeadclass, which encapsulates the GPT-2 model, tokenizer, and training logic. - Training hyperparameters, such as learning rate, batch size, and the number of epochs, are configured in a dedicated

Configclass. - The training loop involves both training and validation phases, utilizing PyTorch's DataLoader for efficient data handling.

- CrossEntropyLoss is employed as the loss function, and the AdamW optimizer is used for gradient descent.

- The model includes an inference method, allowing users to generate Georgian text given a prompt.

- Various parameters, such as

num_beamsandtemperature, can be adjusted to influence the diversity and creativity of generated text.

- The project incorporates functionality to save the pretrained GPT-2 model and tokenizer for future use.

-

Initialization:

- Create an instance of

GPT2GeoLMHeadby providing the GPT-2 model and ElectraTokenizerFast. - Configure training parameters and dataset size in the

Configclass.

- Create an instance of

-

Training:

- Call the

trainmethod on theGPT2GeoLMHeadinstance, passing the training and validation datasets.

- Call the

-

Inference:

- Utilize the

inferencemethod to generate Georgian text based on a given prompt.

- Utilize the

-

Model Saving:

- Save the pretrained model and tokenizer using the

save_pretrainedmethod.

- Save the pretrained model and tokenizer using the

The GPT2GeoLMHead project showcases the application of cutting-edge language models for understanding and generating Georgian text. Its modular and object-oriented design facilitates easy extension and integration into various NLP applications. The project stands as a testament to the synergy of transformer architectures and the rich linguistic diversity encapsulated by the Georgian language.