A Snakemake based modular Workflow that facilitates RNA-Seq analyses with a special focus on the exploration of differential splicing behaviours.

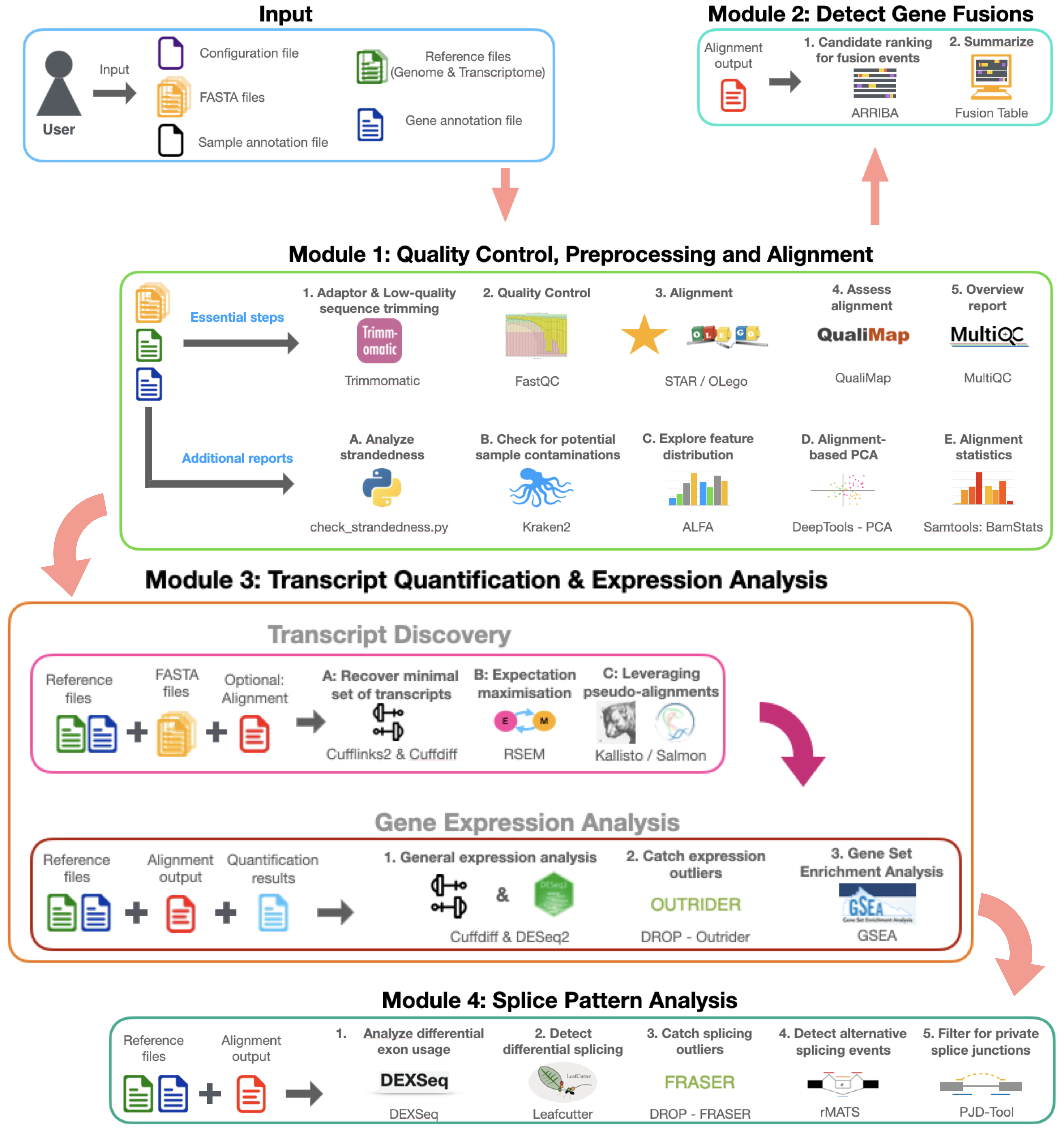

The given parent workflow is a wrapper workflow, which includes the following sub-workflows (called modules): \

- Module1: Quality Control, Preprocessing and Alignment

- Module2: Gene Fusion Detection

- Module3: Transcript Quantification & Expression Analysis

- Module4: Splice Pattern Analysis

- Conda: Conda Webpage

- Snakemake: Snakemake Webpage

- For PEP required:

- peppy is required and can be installed via Conda:

conda install -c conda-forge peppy - eido required is required and can be installed via Conda:

conda install -c conda-forge eido

- peppy is required and can be installed via Conda:

The input data for this workflow is provided via a sample sheet (default location: input_data/input_samples.csv),

whereby the structure of the sample sheet is defined by the PEP (file pep/pep_schema_config.yaml) file.

The sample sheet is a tabular file, which consists of the following columns:

| Column | Description | Required |

|---|---|---|

| sample_name | Name/ID of the sample | YES |

| sample_directory | Path to the directory, where the sample data (FASTQ-files) are located. This information is only used if the FASTQ-files are needed. | YES (depending on tool selection) |

| read1 | Name of the FASTQ-file for read1 sequences | YES (depending on tool selection) |

| read2 | Name of the FASTQ-file for read2 sequences | YES (depending on tool selection) |

| control | true or false (if true, the sample is treated as control sample) | YES |

| condition | Name of the condition (e.g. treatment group) | YES |

| protocol | Name of the protocol (e.g. RNAseq-PolyA). This information is not yet used... | NO |

| stranded | No, if library is unstranded, yes if library is stranded, reverse if library is reverse stranded | YES |

| adaptors_file | Path to the file, which contains the adaptors for the sample | YES (depending on tool selection) |

| additional_comment | Additional comment for the sample | NO |

Note: Currently, the entries for the columns protocol and additional_comment are not used.

Note: The entries "read1", read2" and "adaptors_file" are marked as mandatory, as they are needed for the execution of the alignment workflow. However, if the user has already aligned the samples, these columns can be either filled with dummy data (make sure the references files exist!), or one can manipulate the PEP-file (path: pep/pep_schema_config.yaml) to make these columns optional.

SnakeSplice supports the execution of the workflow starting with FASTQ files.

In this case, the sample sheet has to be filled with the information about the FASTQ files (see above).

Note: The FASTQ files have to be located in the same directory, which is specified in the column sample_directory of the sample sheet.

SnakeSplice also supports the execution of the workflow starting with BAM files.

In this case the location and further information of the BAM-files have to be specified in the respective configuration files (path: config_files/config_moduleX.yaml).\

Some tools require reference files, which need to be user-provided.

The location of these reference files have to be specified in the respective configuration files (path: config_files/config_moduleX.yaml).

Our recommendation: We recommend to use the same reference files for all samples, as the reference files are not adjusted to the samples.

We recommend an analysis set reference genome. Its advantages over other common forms of reference genomes can

be read here.

Such a reference genome can be downloaded from the UCSC Genome Browser.

- Download a suitable FASTA-file of reference genome (e.g. analysis set reference genome for hg19): Example link for hg19 reference genome

- Further annotation files can be downloaded from the UCSC Genome Browser. Example link for hg19 gene annotation file

- Some tools explicitly require ENSEMBL-based annotations: ENSEMBL Downloads

The respective workflow settings can be adjusted via the configuration files, which

are placed in the directory config_files.

In this folder is a config_main.yaml-file, which holds the general settings for the

workflow.

Additionally, every sub-workflow/module has its own

config_module{X}_{module_name}.yaml-file, which lists the settings for the

respective sub-workflow.

This configuration file holds the general settings for this master workflow. It consists of 2 parts:

- Module switches -

module_swiches:

Here, the user can switch on/off the sub-workflows/modules, which should be executed. Note: Submodule 1 has to be run first alone, as the output of this submodule is used as input for the other submodules. Subsequently, the other modules can be run in (almost) any order. - Module output directory names -

module_output_dir_names:

Every submodule saves their output in a separate sub-directory of the main output directoryoutput.

The names of these sub-directories can be adjusted here.

Every submodule has its own configuration file, which holds the settings for the respective submodule.

The configuration files are located in the directory config_files and have the following naming scheme:

config_module{X}_{module_name}.yaml, where X is the number of the submodule and module_name is the name of the submodule.

The configuration files are structured in the following way:

- switch variables -

switch_variables: Here, the user can switch on/off the different steps of the submodule. - output directories -

output_directories: Here, the user can adjust the names of the output directories per tool. - bam files attributes -

bam_files_attributes: Some tools require additional information about the BAM files, which are not provided in the sample sheet. This information can be specified here. - tool-specific settings -

tool_specific_settings: Here, the user can adjust the settings for the different tools, which are used in the submodule.

Since the execution of SnakeSplice is based on Snakemake, the user can configure the execution of SnakeSplice via the command line or via a profile configuration file.

The user can configure the execution of SnakeSplice via the command line.

Details regarding the configuration of Snakemake via the command line can be found here.

A profile configuration file can be used to summarize all desired settings for the snakemake execution.

SnakeSplice comes with two predefined profile configuration files, which can be found in the directory config_files/profiles.

profile_config_local.yaml:\ A predefined profile configuration file for the execution on a local machine.profile_config_cluster.yaml:\ A predefined profile configuration file for the execution on a cluster (using SLURM).

This workflow offers a predefined profile configuration file for the execution on a cluster (using SLURM).

The respective setting options are listed and explained below.

Note: Go to the bottom of this file to find out, how to execute Snakemake using this profile-settings file.

| Command line argument | Default entry | Description |

|---|---|---|

--use-conda |

True | Enables the use of conda environments (and Snakemake wrappers) |

--keep-going |

True | Go on with independent jobs, if one job fails |

--latency-wait |

60 | Wait given seconds if an output file of a job is not present after the job finished. |

--rerun-incomplete |

True | Rerun all jobs where the output is incomplete |

--printshellcmds |

True | Printout shell commands that will be executed |

--jobs |

50 | Number of jobs / rules to run (maximal) in parallel |

--default-resources |

[cpus=1, mem_mb=2048, time_min=60] | Default resources for each job (can be overwritten in the rule definition) |

--resources |

[cpus=100, mem_mb=500000] | Resource constraints for the whole workflow |

--cluster |

"sbatch -t {resources.time_min} --mem={resources.mem_mb} -c {resources.cpus} -o logs_slurm/{rule}.%j.out -e logs_slurm/{rule}.%j.out --mail-type=FAIL --mail-user=user@mail.com" | Cluster command for the execution on a cluster (here: SLURM) |

- Activate Conda-Snakemake environment

conda activate snakemake - Execute Workflow (you can adjust the passed number of cores to your desire...)

snakemake -s Snakefile --cores 4 --use-conda - Run Workflow in background

rm nohup.out && nohup snakemake -s Snakefile --cores 4 --use-conda &

- Visualize DAG of jobs

snakemake --dag | dot -Tsvg > dag.svg - Dry run -> Get overview of job executions, but no real output is generated

snakemake -n -r --cores 4

- Adjust settings in profile-settings file (e.g. here in

profiles/profile_cluster/config.yaml). - Execute workflow

mkdir -p logs_slurm && rm nohup.out || true && nohup snakemake --profile profiles/profile_cluster &

sacct -a --format=JobID,User,Group,Start,End,State,AllocNodes,NodeList,ReqMem,MaxVMSize,AllocCPUS,ReqCPUS,CPUTime,Elapsed,MaxRSS,ExitCode -j <job-ID>

Explanation:

-a: Show jobs for all users--format=JobID...: Format output

killall -TERM snakemake

sinfo -o "%n %e %m %a %c %C"

After the successful execution of SnakeSplice, a self-contained HTML-report can be generated.

The report can be generated by executing the following command:

snakemake --report report.html