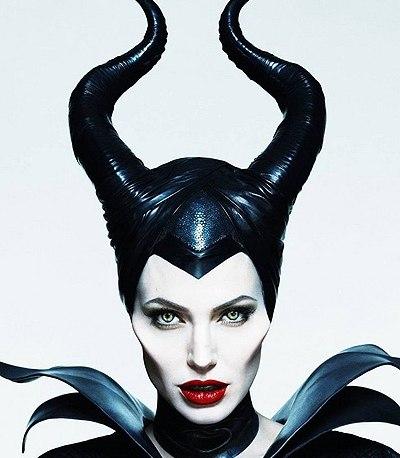

Send your selfie and get Pepe version of yourself

- We detect the face on the image via

dliblibrary then align and reshape it - We apply pix2pix model to generate the output image.

Using the results of the paper "Differentiable Augmentation for Data-Efficient GAN Training" and code from their repo we fine-tune model on 365 Pepe images.

thispepedoesntexist

Here we use StyleGAN network blending trick. The aim was to blend a base model which generates people and the fine-tuned model from Step 1. The method was different to simply interpolating the weights of the two models as it allows you to control independently which model you got low and high resolution features from. This trick allows us to generate faces with 'Pepe texture' on it.

We also tried to blend models the other way round, but didn't used it in further experiments.

Now when we have paired images (selfie - styled selfie). We learned this transformation by training pix2pix model in a supervised manner.

- Pillow

- python-telegram-bot

- numpy

- opencv-python

- dlib

- scipy

- torch

- torchvision

- albumentations

$ python3 custom_bot.py