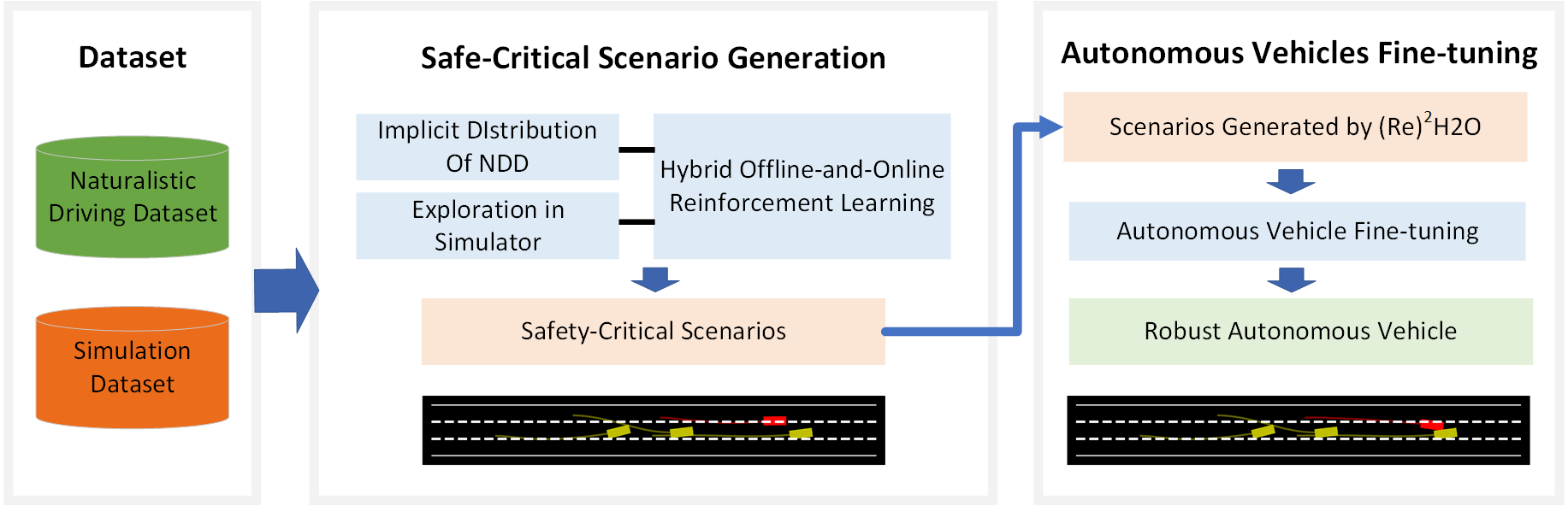

(Re)2H2O (https://arxiv.org/abs/2302.13726) is a approach that combines offline real-world data and online simulation data to generate diverse and challenging scenarios for testing autonomous vehicles (AVs). Via a Reversely Regularized Hybrid Offline-and-Online Reinforcement Learning framework, (Re)2H2O penalizes Q-values on real-world data and rewards Q-values on simulated data to ensure the generated scenarios are both varied and adversarial. Extensive experiments have shown that this (Re)2H2O is capable of generating riskier scenarios compared to competitive baselines and can generalize its applicability to various autonomous driving models. Additionally, these generated scenarios are proven to be effective for fine-tuning the performance of AVs.

To install the dependencies, run the command:

pip install -r requirements.txtAdd this repo directory to your PYTHONPATH environment variable:

export PYTHONPATH="$PYTHONPATH:$(pwd)"

Also, sumo is needed in this project.

Traffic dataset needs to be downloaded from the highD dataset. Afterward, the dataset should be processed following the steps below:

-

Split the highD dataset into traffic flow data containing a specific number of vehicles, while

rootpathis the path of highD dataset,num_agentsnumber of vehicles in the traffic flow,dis_thresholdis maximum distance threshold between vehicles in the initial state:python dataset/highD_cut.py rootpath=./highD-dataset num_agents=2 dis_threshold=15

-

Process the obtained traffic flow data into a dataset suitable for reinforcement learning algorithms:

python dataset/dataset.py rootpath_highd="./highD-dataset/data/" rootpath_cut="./highD-dataset/highd_cut/

To train the adv-agents via (Re)2H2O, run:

python main.py --ego_policy="-sumo" --adv_policy="-RL" --n_epochs_ego=0 --n_epochs_ego=1000 --num_agents=2 --realdata_path="../dataset/r3_dis_20_car_3/" --is_save=FalseNumber of adv-agents is set through the parameter num_agents, and parameter realdata_path needs to match num_agents. If you want to save the scenarios generated during training and save the trained model, set is_save==True.

To train policy of adv-agents, adv_policy should be set to "-RL", while ego_policy can be set to an any specific policy.

Also, if you want to train the ego-agents, we provide an algorithm based on SAC (Soft Actor-Critic). Run:

python main.py --ego_policy="RL" --adv_policy="sumo" --n_epochs_ego=500 --n_epochs_ego=0 --num_agents=2 --realdata_path="../dataset/r3_dis_20_car_3/" --is_save=FalseTo evaluate training effectiveness and further enhance model performance, a process for interactive training between ego-agents and adv-agents has been designed. Here is a running example:

python main.py --ego_policy="RL-sumo-RL" --adv_policy="sumo-RL-sumo" --n_epochs_ego=500-500 --n_epochs_ego=1000 --num_agents=2 --realdata_path="../dataset/r3_dis_20_car_3/" --is_save=FalseTo obtain the learning curves, you can resort to wandb to login your personal account with your wandb API key.

export WANDB_API_KEY=YOUR_WANDB_API_KEY

and set parameter use_wandb=True to turn on the online syncronization.

Also, generated scenarios can be visualized by functions in file utils/scenarios_reappear.py.

If you are using (Re)2H2O framework or code for your project development, please cite the following paper:

@INPROCEEDINGS{niu2023re,

author={Niu, Haoyi and Ren, Kun and Xu, Yizhou and Yang, Ziyuan and Lin, Yichen and Zhang, Yi and Hu, Jianming},

booktitle={2023 IEEE Intelligent Vehicles Symposium (IV)},

title={(Re)2H2O: Autonomous Driving Scenario Generation via Reversely Regularized Hybrid Offline-and-Online Reinforcement Learning},

year={2023},

volume={},

number={},

pages={1-8},

doi={10.1109/IV55152.2023.10186559}

}