A project developing privacy-preserving, vertically-distributed learning.

- 🔗 Links vertically partitioned data without exposing membership using Private Set Intersection (PSI)

- 🔒 Trains a model on vertically partitioned data using SplitNNs, so only data holders can access data

Vertically-partitioned data is data

in which

fields relating to a single record

are distributed across multiple datasets.

For example,

multiple hospitals may have admissions data on the same individuals.

Vertically-partitioned data could be applied to solve vital problems,

but data holders can't combine their datasets

by simply comparing notes with other data holders

unless they want to break user privacy.

PyVertical uses PSI

to link datasets in a privacy-preserving way.

We train SplitNNs on the partitioned data

to ensure the data remains separate throughout the entire process.

See the changelog

for information

on the current status of PyVertical.

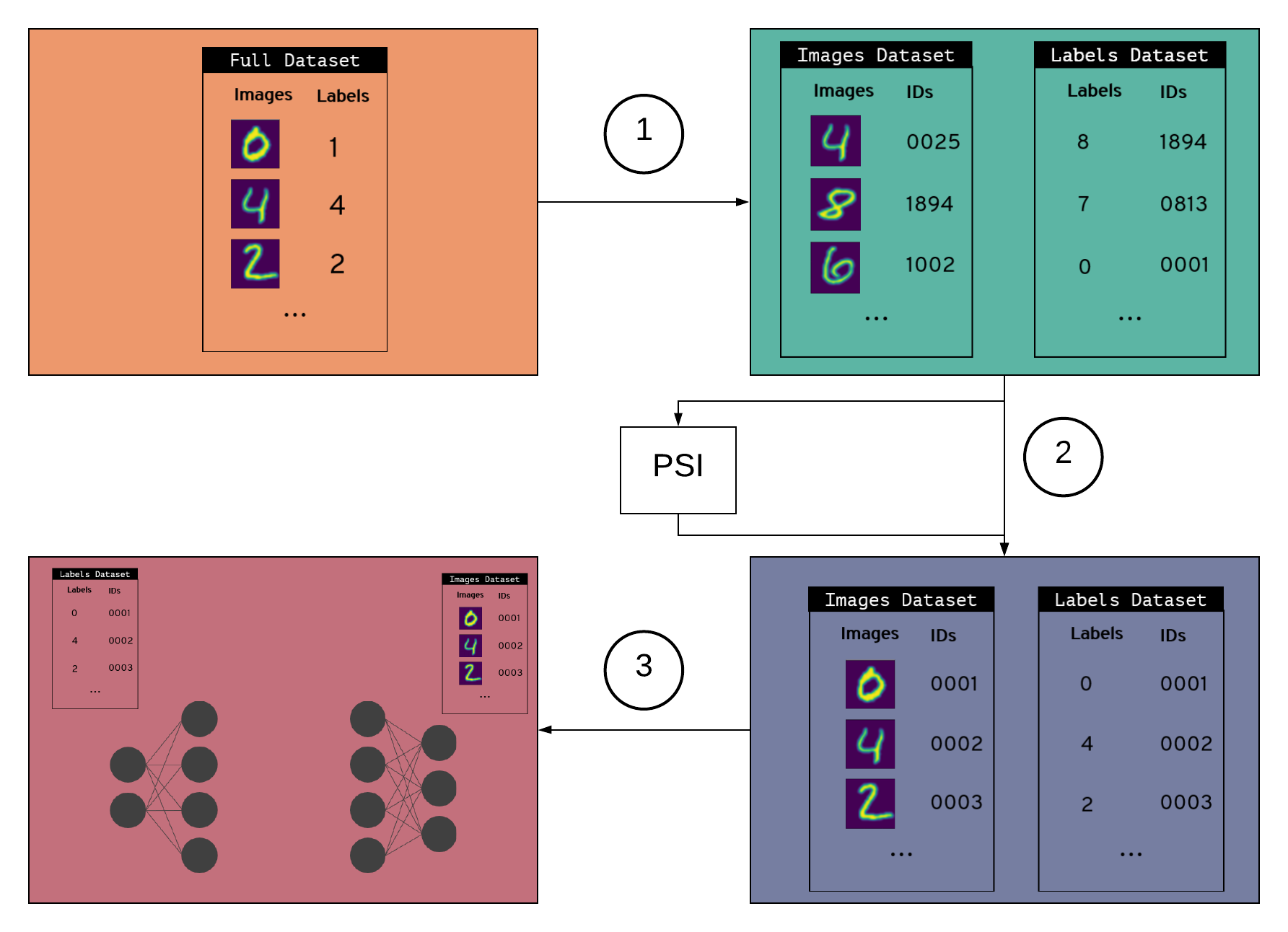

PyVertical process:

- Create partitioned dataset

- Simulate real-world partitioned dataset by splitting MNIST into a dataset of images and a dataset of labels

- Give each data point (image + label) a unique ID

- Randomly shuffle each dataset

- Randomly remove some elements from each dataset

- Link datasets using PSI

- Use PSI to link indices in each dataset using unique IDs

- Reorder datasets using linked indices

- Train a split neural network

- Hold both datasets in a dataloader

- Send images to first part of split network

- Send labels to second part of split network

- Train the network

This project is written in Python. The work is displayed in jupyter notebooks.

To install the dependencies, we recommend using Conda:

- Clone this repository

- In the command line, navigate to your local copy of the repository

- Run

conda env create -f environment.yml- This creates an environment

pyvertical-dev - Comes with most dependencies you will need

- This creates an environment

- Activate the environment with

conda activate pyvertical-dev - Run

pip install syft[udacity] - Run

conda install notebook

N.b. Installing the dependencies takes several steps to circumvent versioning incompatibility between

syft and jupyter.

In the future,

all packages will be moved into the environment.yml.

In order to use PSI with PyVertical,

you need to install bazel to build the necessary Python bindings for the C++ core.

After you have installed bazel, run the build script with .github/workflows/scripts/build-psi.sh.

This should generate a _psi_bindings.so file

and place it in src/psi/.

You can instead opt to use Docker.

To run:

- Build the image with

docker build -t pyvertical:latest . - Launch a container with

docker run -it -p 8888:8888 pyvertical:latest

- Defaults to launching jupyter lab

Check out

examples/PyVertical Example.ipynb

to see PyVertical in action.

- MVP

- Simple example on MNIST dataset

- One data holder has images, the other has labels

- Extension demonstration

- Apply process to electronic health records (EHR) dataset

- Dual-headed SplitNN: input data is split amongst several data holders

- Integrate with

syft

Pull requests are welcome. For major changes, please open an issue first to discuss what you would like to change.

Read the OpenMined contributing guidelines and styleguide for more information.

|

|

|

|

|---|---|---|---|

| TTitcombe | Pavlos-p | H4LL | rsandmann |

We use pytest to test the source code.

To run the tests manually:

- In the command line, navigate to the root of this repository

- Run

python -m pytest

CI also checks the code conforms to flake8 standards

and black formatting