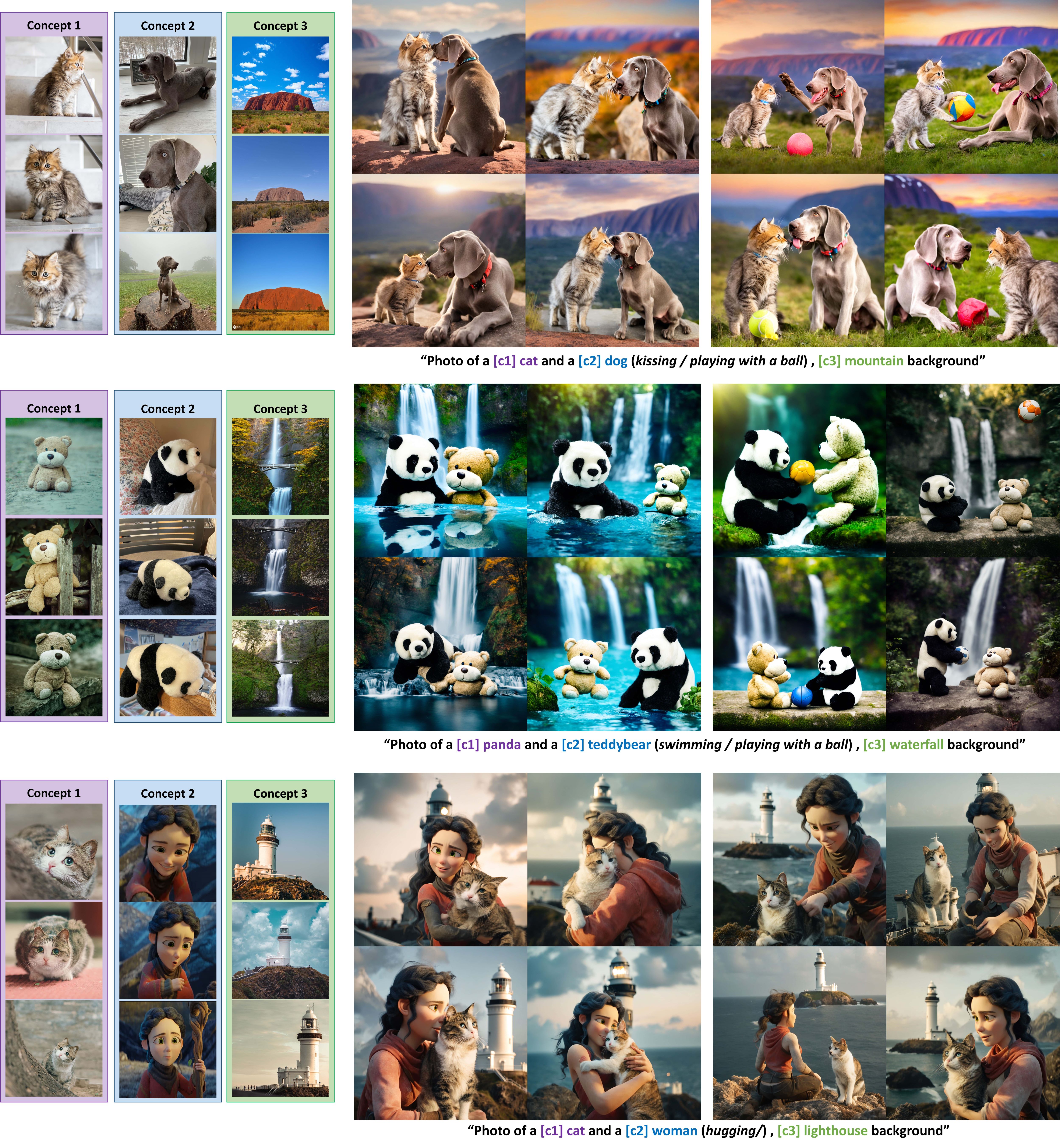

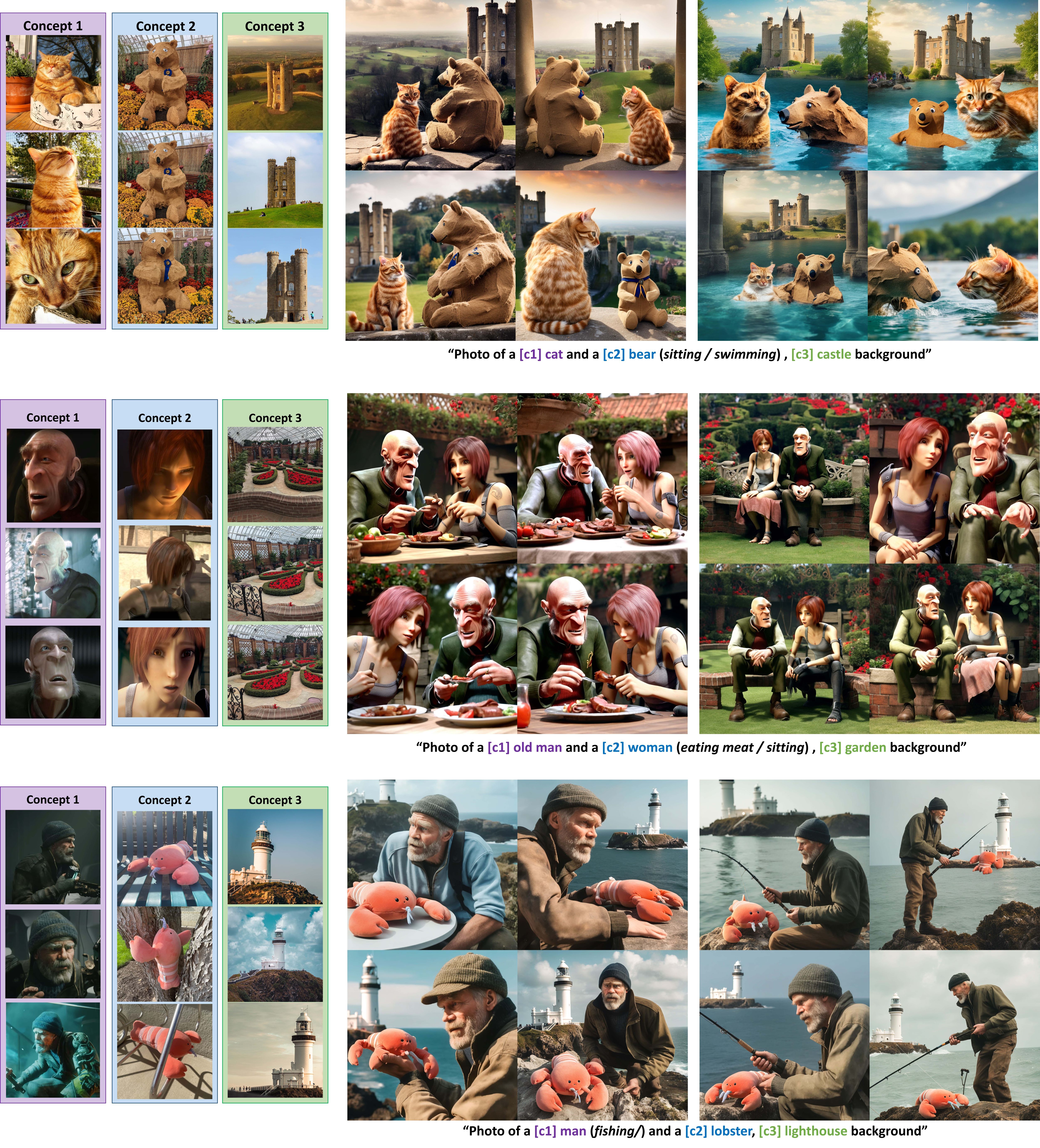

Official source codes for "TweedieMix: Improving Multi-Concept Fusion for Diffusion-based Image/Video Generation"

$ conda create -n tweediemix python=3.11.8

$ conda activate tweediemix

$ pip install torch==2.2.2 torchvision==0.17.2 torchaudio==2.2.2 --index-url https://download.pytorch.org/whl/cu118

$ pip install -r requirements.txt

$ git clone https://github.com/KwonGihyun/TweedieMix

$ cd TweedieMix/text_segment

$ git clone https://github.com/IDEA-Research/GroundingDINO.git

$ cd GroundingDINO/

$ pip install -e .

download and install xformers which is compatible for your own environment in https://download.pytorch.org/whl/xformers/

e.g. pip install xformers-0.0.26+cu118-cp311-cp311-manylinux2014_x86_64.whl

If you have problem in installing GroundingDINO, please refer to original repository

Train single concept aware model using Custom Diffusion framework

bash singleconcept_train.sh

Most of the concept datasets can be downloaded from customconcept101 dataset

We provide both of Custom Diffusion (key,query weight finetuning) and Low-Rank Adaptation

We also provide several pre-trained weights in LINK

Sampling multi-concept aware images with personalized checkpoints

we provide script for sampling multi-concepts for example,

bash sample_catdog.sh

we also provide several scripts for multi-concept generation for both of custom diffusion weight or LoRa weights.

For different generation setting, adjust parameters in the bash file.

After generating multi-concept image, generate video output using Image-to-Video Diffusion

we provide script for I2V generation

python run_video.py

For different generation setting, adjust parameters in the script file.

If you find this paper useful for your research, please consider citing

@InProceedings{

kwon2024tweedie,

title={TweedieMix: Improving Multi-Concept Fusion for Diffusion-based Image/Video Generation},

author={Kwon, Gihyun and Ye, Jong Chul},

booktitle={https://arxiv.org/abs/2410.05591},

year={2024}

}Also please refer to our previous version Concept Weaver

@InProceedings{kwon2024concept,

author = {Kwon, Gihyun and Jenni, Simon and Li, Dingzeyu and Lee, Joon-Young and Ye, Jong Chul and Heilbron, Fabian Caba},

title = {Concept Weaver: Enabling Multi-Concept Fusion in Text-to-Image Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {8880-8889}

}Our source code is based on Plug-and-Play Diffusion , Custom Diffusion, LangSAM