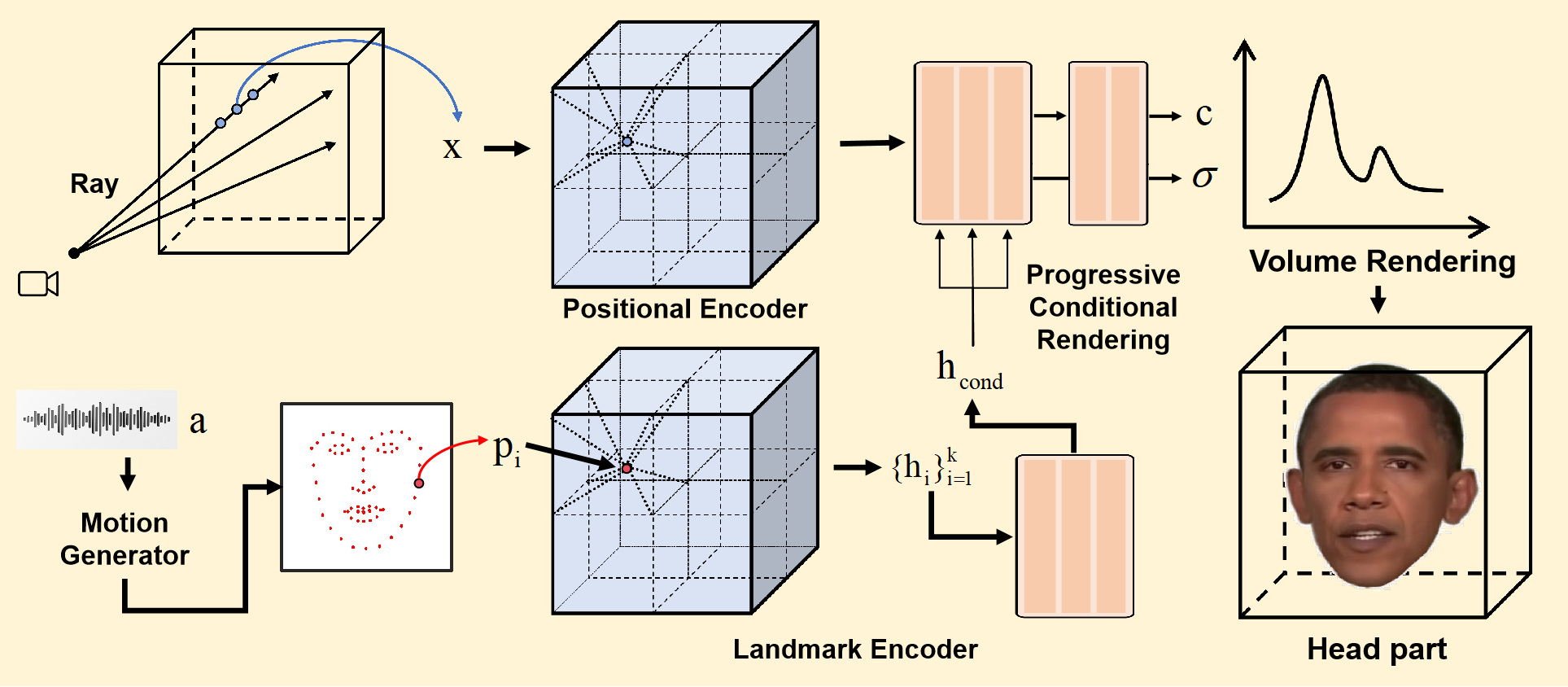

R2-Talker: Realistic Real-Time Talking Head Synthesis with Hash Grid Landmarks Encoding and Progressive Multilayer Conditioning

This is the official repository for the paper: R2-Talker: Realistic Real-Time Talking Head Synthesis with Hash Grid Landmarks Encoding and Progressive Multilayer Conditioning.

- ☐ Add progressive optimization for hash grid

- ☐ Add landmark generator

- ☑ Add landmark encoder

- ☑ Support methods: R2-Talker, RAD-NeRF, Geneface+instant-ngp

| Method | Driving Features | Audio Encoder |

|---|---|---|

| R2-Talker | 3D Facial Landmarks | Hash grid encoder |

| RAD-NeRF | Audio Features | Audio Feature Extractor |

| Geneface+instant-ngp | 3D facial landmarks | Audio Feature Extractor |

Install dependency & Build extension (optional)

Tested on Ubuntu 22.04, Pytorch 1.12 and CUDA 11.6.

git clone git@github.com:KylinYee/R2-Talker-code.git

cd R2-Talker-code# for ubuntu, portaudio is needed for pyaudio to work.

sudo apt install portaudio19-dev

pip install -r requirements.txtBy default, we use load to build the extension at runtime.

However, this may be inconvenient sometimes.

Therefore, we also provide the setup.py to build each extension:

# install all extension modules

bash scripts/install_ext.shPreparation & Pre-processing Custom Training Video

## install pytorch3d

pip install "git+https://github.com/facebookresearch/pytorch3d.git"

## prepare face-parsing model

wget https://github.com/YudongGuo/AD-NeRF/blob/master/data_util/face_parsing/79999_iter.pth?raw=true -O data_utils/face_parsing/79999_iter.pth

## prepare basel face model

# 1. download `01_MorphableModel.mat` from https://faces.dmi.unibas.ch/bfm/main.php?nav=1-2&id=downloads and put it under `data_utils/face_tracking/3DMM/`

# 2. download other necessary files from AD-NeRF's repository:

wget https://github.com/YudongGuo/AD-NeRF/blob/master/data_util/face_tracking/3DMM/exp_info.npy?raw=true -O data_utils/face_tracking/3DMM/exp_info.npy

wget https://github.com/YudongGuo/AD-NeRF/blob/master/data_util/face_tracking/3DMM/keys_info.npy?raw=true -O data_utils/face_tracking/3DMM/keys_info.npy

wget https://github.com/YudongGuo/AD-NeRF/blob/master/data_util/face_tracking/3DMM/sub_mesh.obj?raw=true -O data_utils/face_tracking/3DMM/sub_mesh.obj

wget https://github.com/YudongGuo/AD-NeRF/blob/master/data_util/face_tracking/3DMM/topology_info.npy?raw=true -O data_utils/face_tracking/3DMM/topology_info.npy

# 3. run convert_BFM.py

cd data_utils/face_tracking

python convert_BFM.py

cd ../..

## prepare ASR model

# if you want to use DeepSpeech as AD-NeRF, you should install tensorflow 1.15 manually.

# else, we also support Wav2Vec in PyTorch.-

Put training video under

data/<ID>/<ID>.mp4.The video must be 25FPS, with all frames containing the talking person. The resolution should be about 512x512, and duration about 1-5min.

# an example training video from AD-NeRF mkdir -p data/obama wget https://github.com/YudongGuo/AD-NeRF/blob/master/dataset/vids/Obama.mp4?raw=true -O data/obama/obama.mp4

-

Run script (may take hours dependending on the video length)

# run all steps python data_utils/process.py data/<ID>/<ID>.mp4 # if you want to run a specific step python data_utils/process.py data/<ID>/<ID>.mp4 --task 1 # extract audio wave

-

3D facial landmark generator will be added in the feature. If you want to process the custom data, please ref to Geneface to obtain

trainval_dataset.npy, using ourbinarizedFile2landmarks.pyto extract landmarks and put the landmarks todata/<ID>/. -

File structure after finishing all steps:

./data/<ID> ├──<ID>.mp4 # original video ├──ori_imgs # original images from video │ ├──0.jpg │ ├──0.lms # 2D landmarks │ ├──... ├──gt_imgs # ground truth images (static background) │ ├──0.jpg │ ├──... ├──parsing # semantic segmentation │ ├──0.png │ ├──... ├──torso_imgs # inpainted torso images │ ├──0.png │ ├──... ├──aud.wav # original audio ├──aud_eo.npy # audio features (wav2vec) ├──aud.npy # audio features (deepspeech) ├──bc.jpg # default background ├──track_params.pt # raw head tracking results ├──transforms_train.json # head poses (train split) ├──transforms_val.json # head poses (test split) |——aud_idexp_train.npy # head landmarks (train split) |——aud_idexp_val.npy # head landmarks (test split) |——aud_idexp.npy # head landmarks

For your convenience, we provide some processed results of the Obama video here. And you can also download more raw videos from geneface here.

Quick Start & Detailed Usage

We have prepared relevant materials here.

Please download these materials and put them in the new pretrained file

-

File structure after finishing all steps:

./pretrained ├──r2talker_Obama_idexp_torso.pth # pretrained model ├──test_eo.npy # driving audio features (wav2vec) ├──test_lm3ds.npy # driving audio features (landmarks) ├──test.wav # raw driving audio ├──bc.jpg # default background ├──transforms_val.json # head poses ├──test.mp4 # raw driving video

-

Run inference:

# save video to trail_test/results/*.mp4 sh scripts/test_pretrained.sh

First time running will take some time to compile the CUDA extensions.

# step.1 train (head)

# by default, we load data from disk on the fly.

# we can also preload all data to CPU/GPU for faster training, but this is very memory-hungry for large datasets.

# `--preload 0`: load from disk (default, slower).

# `--preload 1`: load to CPU, requires ~70G CPU memory (slightly slower)

# `--preload 2`: load to GPU, requires ~24G GPU memory (fast)

python main.py data/Obama/ --workspace trial_r2talker_Obama_idexp/ -O --iters 200000 --method r2talker --cond_type idexp

# step.2 train (finetune lips for another 50000 steps, run after the above command!)

python main.py data/Obama/ --workspace trial_r2talker_Obama_idexp/ -O --finetune_lips --iters 250000 --method r2talker --cond_type idexp

# step.3 train (torso)

# <head>.pth should be the latest checkpoint in trial_obama

python main.py data/Obama/ --workspace trial_r2talker_Obama_idexp_torso/ -O --torso --iters 200000 --head_ckpt trial_r2talker_Obama_idexp/checkpoints/ngp_ep0035.pth --method r2talker --cond_type idexpcheck the scripts directory for more provided examples.

This code is developed heavily relying on RAD-NeRF, GeneFace, and AD-NeRF. Thanks for these great projects.

@article{zhiling2023r2talker,

title={R2-Talker: Realistic Real-Time Talking Head Synthesis with Hash Grid Landmarks Encoding and Progressive Multilayer Conditioning},

author={Zhiling Ye, Liangguo Zhang, Dingheng Zeng, Quan Lu, Ning Jiang},

journal={arXiv preprint arXiv:2312.05572},

year={2023}

}