This project is an attempt to provide a data lineage visualization for on-premise usage. It's based on Confluent Audit Logs. The aim is to visualize consumer/producer as Stream Lineage can do with Confluent Cloud.

Disclaimer : It's an expiremental product (aka POC) developed during my free time.

./start.shThis script will be deploy this stack :

| Service | Component | Port forwarding | Comment |

|---|---|---|---|

| broker | Confluent Server | 19094 | SASL_PLAINTEXT:19094 |

| zookeeper | Zookeeper | 22181 | ZOOKEEPER_CLIENT_PORT = 22181 |

| zookeeper-add-kafka-users | / | / | Used at the beginning to create multiple kafka users |

| schema-registry | Confluent Schema Registry | 8081 | Provide a serving layer for your metadata |

| connect | Kafka Connect | 8083 | elasticsearch, activemq, activemq-sink and datagen connectors are already installed |

| ksqldb-server | Confluent KsqlDB | 8088 | / |

| ksqldb-cli | Ksqldb CLI | / | Used for deploying ksqldb queries |

| control-center | Confluent Control Center | 9021 | A web-based tool for managing and monitoring Apache Kafka®. |

| elasticsearch | Elasticsearch | 9300,9200 | Used in downstream system for persisting an aggregation from a Kafka topic |

| data-lineage-forwarder | Data Lineage Forwarder | / | A Kafka Streams application which route audit logs event into multiple topics (fetch, produce, dlq) |

| data-lineage-api | Data Lineage API | 8080 | A Kafka Streams application which aggregate events and expose a lineage graph API. You can see the swagger contract at http://localhost:8080/swagger-ui.html |

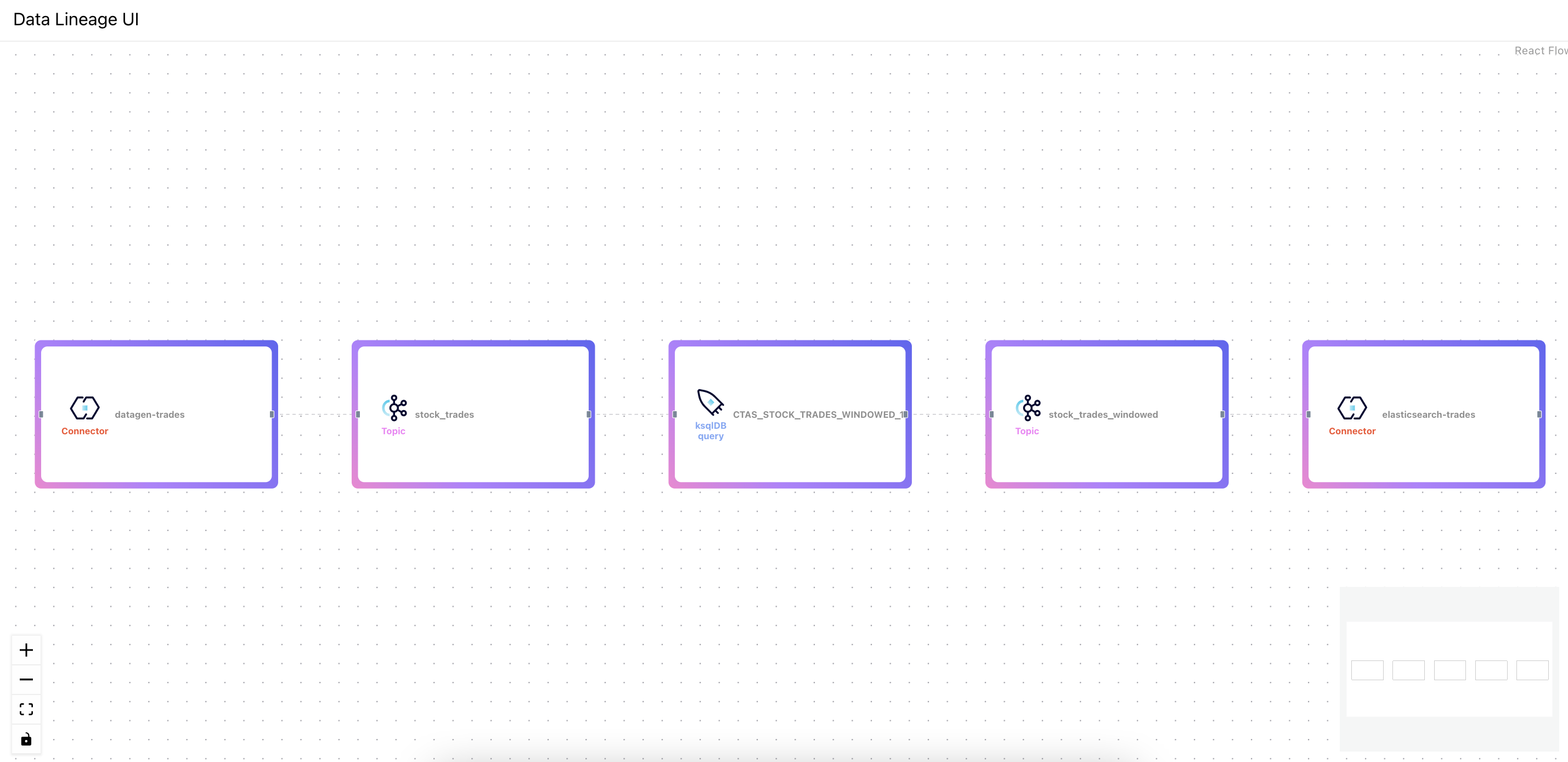

| data-lineage-ui | Data Lineage UI | 80 | A simple React UI which materalize the lineage graph with react-flow package |

When the script is done, you must see in the logs this line :

[...]

🚀 All the stack is running, feel free to go on http://localhost:80. Enjoy your visualization ! 🎉

Go at http://localhost, and you can visualize your data lineage dashboard.

If you try to delete the elastic sink connector, you could see the dashboard instantly updated.

curl -X DELETE http://localhost:8083/connectors/elasticsearch-trades./stop.sh- Manage Kafka Streams application

- Manage inactive producer

- Add some metadatas for each node (user, schemas, throughput, etc ..)