- Bi-Level Optimization (BLO) is originated from the area of economic game theory and then introduced into the optimization community. BLO is able to handle problems with a hierarchical structure, involving two levels of optimization tasks, where one task is nested inside the other.

The standard BLO problem can be formally expressed as

- In machine learning and computer vision fields, despite the different motivations and mechanisms, a lot of complex problems, such as hyper-parameter optimization, multi-task and meta learning, neural architecture search, adversarial learning and deep reinforcement learning, actually all contain a series of closely related subproblms.

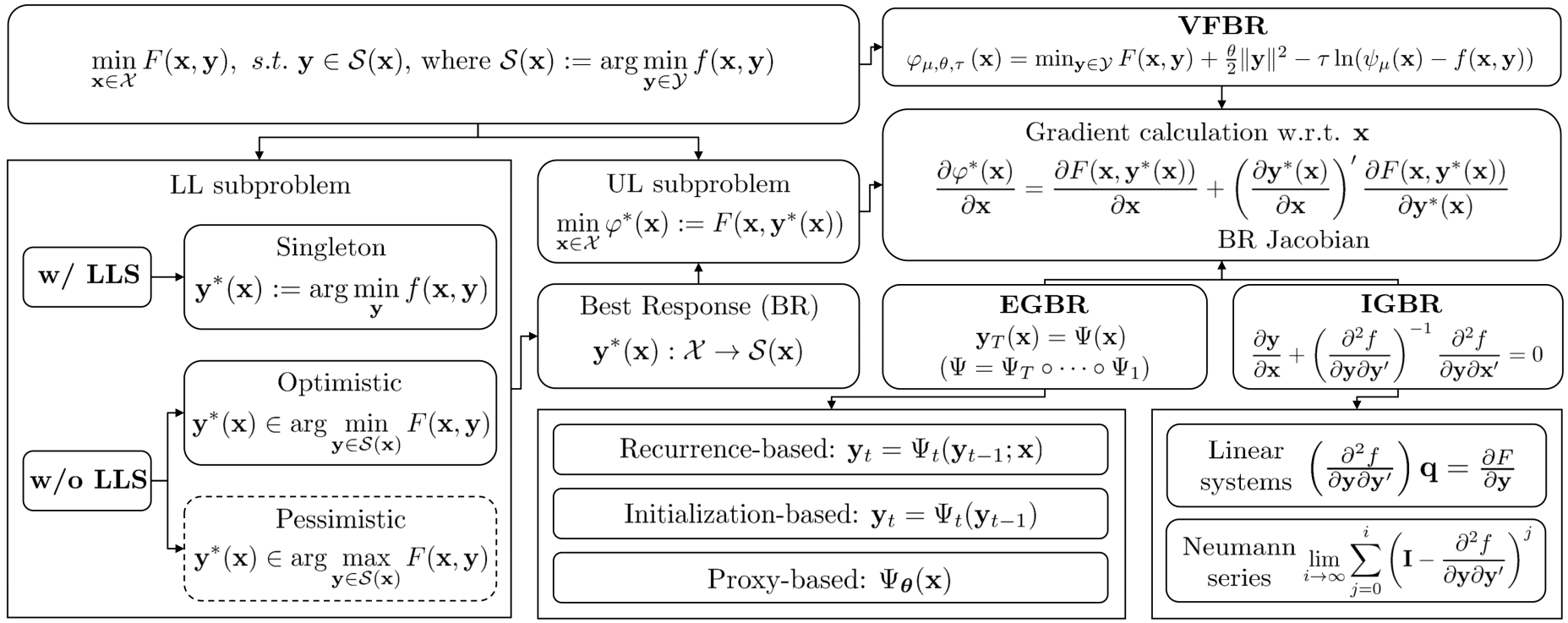

In our recent survey published in TPAMI, named "Investigating Bi-Level Optimization for Learning and Vision from a Unified Perspective: A Survey and Beyond", we uniformly express these complex learning and vision problems from the perspective of BLO. Also we construct a best-response-based single-level reformulation and establish a unified algorithmic framework to understand and formulate mainstream gradient-based BLO methodologies, covering aspects ranging from fundamental automatic differentiation schemes to various accelerations, simplifications, extensions and their convergence and complexity properties. We summarize mainstream gradient-based BLOs and illustrate their intrinsic relationships within our general algorithmic platform. We also discuss the potentials of our unified BLO framework for designing new algorithms and point out some promising directions for future research.

- In this website, we first summarize our related progress and references of existing works for a quick look at the current progress. Futhermore, we provide a list of important papers discussed in this survey, corresponding codes, and additional resources on BLOs. We will continuously maintain this website to promote the research in BLO fields.

-

Risheng Liu, Jiaxin Gao, Jin Zhang, Deyu Meng, Zhouchen Lin. Investigating Bi-Level Optimization for Learning and Vision from a Unified Perspective: A Survey and Beyond. IEEE TPAMI 2021. [Paper] [Project Page]

-

Risheng Liu, Zi Li, Xin Fan, Chenying Zhao, Hao Huang, Zhongxuan Luo. Learning Deformable Image Registration from Optimization: Perspective, Modules, Bilevel Training and Beyond. IEEE TPAMI 2021. [Paper]

-

Risheng Liu, Long Ma, Jiaao Zhang, Xin Fan, Zhongxuan Luo. Retinex-Inspired Unrolling With Cooperative Prior Architecture Search for Low-Light Image Enhancement. CVPR 2021. [Paper] [Project Page]

-

Risheng Liu, Yaohua Liu, Shangzhi Zeng, Jin Zhang. Towards Gradient-based Bilevel Optimization with Non-convex Followers and Beyond. NeurIPS 2021 (Spotlight, Acceptance Rate ≤ 3%). [Paper] [Code]

-

Pan Mu, Zhu Liu, Yaohua Liu, Risheng Liu, Xin Fan. Triple-level Model Inferred Collaborative Network Architecture for Video Deraining. IEEE TIP 2021. [Paper] [Code]

-

Risheng Liu, Zhu Liu, Jinyuan Liu, Xin Fan. Searching a Hierarchically Aggregated Fusion Architecture for Fast Multi-Modality Image Fusion. ACM MM 2021. [Paper] [Code].

-

Dian Jin, Long Ma, Risheng Liu, Xin Fan. Bridging the Gap between Low-Light Scenes: Bilevel Learning for Fast Adaptation. ACM MM 2021. [Paper]

- Risheng Liu, Xuan Liu, Xiaoming Yuan, Shangzhi Zeng, Jin Zhang. A Value Function-based Interior-point Method for Non-convex Bilevel Optimization. ICML 2021.[Paper][Code]

- Yaohua Liu, Risheng Liu. BOML: A Modularized Bilevel Optimization Library in Python for Meta-learning. ICME 2021.[Paper][Code]

- Risheng Liu, Pan Mu, Xiaoming Yuan, Shangzhi Zeng, Jin Zhang. A Generic First-Order Algorithmic Framework for Bi-Level Programming Beyond Lower-Level Singleton. ICML 2020. [Paper][Code]

- Risheng Liu, Pan Mu, Jian Chen, Xin Fan, Zhongxuan Luo. Investigating Task-driven Latent Feasibility for Nonconvex Image Modeling. IEEE TIP 2020.[Code]

- Risheng Liu, Zi Li, Yuxi Zhang, Xin Fan, Zhongxuan Luo. Bi-level Probabilistic Feature Learning for Deformable Image Registration. IJCAI 2020.[Paper] [code]

We have published BOML previously, a modularized Tensorflow-based optimization library that unifies several ML algorithms into a common bilevel optimization framework. Now we integrate more recently proposed algorithms and more compatible applications and release the Pytorch version.

- Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks (MAML)

- On First-Order Meta-Learning Algorithms (FMAML)

- Meta-SGD: Learning to Learn Quickly for Few-Shot Learning (Meta-SGD)

- Learning to Forget for Meta-Learning (L2F)

- Bilevel Programming for Hyperparameter Optimization and Meta-Learning (RAD)

- Truncated Back-propagation for Bilevel Optimization (T-RAD)

- Gradient-Based Meta-Learning with Learned Layerwise Metric and Subspace (MT-Net)

- Meta-Learning with warped gradient Descent (WarpGrad))

- DARTS: Differentiable Architecture Search (OS-RAD)

- A Generic First-Order Algorithmic Framework for Bi-Level Programming Beyond Lower-Level Singleton (BDA)

- Meta-Learning with Implicit Gradients (LS)

- Optimizing millions of hyperparameters by implicit differentiation (NS)

- Towards Gradient-based Bilevel Optimization with Non-convex Followers and Beyond (IAPTT-GM)

- Luca Franceschi, Michele Donini, Paolo Frasconi, Massimiliano Pontil. Forward and Reverse Gradient-Based Hyperparameter Optimization. ICML 2017.

- Amirreza Shaban, Ching-An Cheng, Nathan Hatch, Byron Boots. Truncated Back-propagation for Bilevel Optimization. AISTATS 2019.

- Hanxiao Liu, Karen Simonyan, Yiming Yang. DARTS: Differentiable Architecture Search. ICLR 2019.

- Chelsea Finn, Pieter Abbeel, Sergey Levine. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. ICML 2017.

- Alex Nichol, Joshua Achiam, John Schulman. On First-Order Meta-Learning Algorithms. arXiv 2018.

- Sebastian Flennerhag, Andrei A. Rusu, Razvan Pascanu, Francesco Visin, Hujun Yin, Raia Hadsell. Meta-Learning with Warped Gradient Descent. ICLR 2020.

- Eunbyung Park, Junier B. Oliva. Meta-Curvature. NeurIPS 2019.

- Yoonho Lee, Seungjin Choi. Meta-Learning with Adaptive Layerwise Metric and Subspace. arXiv 2018.

- Matthew MacKay, Paul Vicol, Jon Lorraine, David Duvenaud, Roger Grosse. Self-tuning Networks: Bilevel Optimization of Hyperparameters Using Structured Best-response Functions. ICLR 2019.

- Jonathan Lorraine, David Duvenaud. Stochastic Hyperparameter Optimization through Hypernetworks. arXiv 2018.

- Fabian Pedregosa. Hyperparameter Optimization with Approximate Gradient. ICML 2016.

- Aravind Rajeswaran, Chelsea Finn, Sham Kakade, Sergey Levine. Meta-Learning with Implicit Gradients. NeurIPS 2019.

- Jonathan Lorraine, Paul Vicol, David Duvenaud. Optimizing Millions of Hyperparameters by Implicit Differentiation. AISTATS 2020.

- Luca Franceschi, Michele Donini, Paolo Frasconi, Massimiliano Pontil. Forward and Reverse Gradient-Based Hyperparameter Optimization. ICML 2017.

- Amirreza Shaban, Ching-An Cheng, Nathan Hatch, Byron Boots. Truncated Back-propagation for Bilevel Optimization. AISTATS 2019.

- Matthew MacKay, Paul Vicol, Jon Lorraine, David Duvenaud, Roger Grosse. Self-tuning networks: Bilevel Optimization of Hyperparameters Using Structured Best-response Functions. ICLR 2019.

- Fabian Pedregosa. Hyperparameter Optimization with Approximate Gradient. ICML 2016.

- D. Maclaurin, D. Duvenaud, and R. Adams. Gradient-Based Hyperparameter Optimization Through Reversible Learning. PMLR 2015.

- Takayuki Okuno, Akiko Takeda, Akihiro Kawana. Hyperparameter Learning via Bilevel Nonsmooth Optimization. arXiv 2018

- Ankur Sinha, Tanmay Khandait, Raja Mohanty. A Gradient-based Bilevel Optimization Approach for Tuning Hyperparameters in Machine Learning. arXiv 2020.

- Alex Nichol, Joshua Achiam, John Schulman. On First-Order Meta-Learning Algorithms. arXiv 2018.

- Sebastian Flennerhag, Andrei A. Rusu, Razvan Pascanu, Francesco Visin, Hujun Yin, Raia Hadsell. Meta-Learning with Warped Gradient Descent. ICLR 2020.

- Aravind Rajeswaran, Chelsea Finn, Sham Kakade, Sergey Levine. Meta-Learning with Implicit Gradients. NIPS 2019.

- Luca Franceschi, Paolo Frasconi, Michele Donini, Massimiliano Pontil. A Bridge Between Hyperparameter Optimization and Learning-to-learn, NeurIPS 2017.

- Luca Franceschi, Paolo Frasconi, Saverio Salzo, Riccardo Grazzi, Massimilano Pontil. Bilevel Programming for Hyperparameter Optimization and Meta-learning. ICML 2018.

- Luca Bertinetto, João F. Henriques, Philip H.S. Torr, Andrea Vedaldi. Meta-learning with Differentiable Closed-form Solvers, ICLR 2019.

- Alex Nichol, John Schulman. Reptile: A Scalable Metalearning Algorithm.

- Alesiani Francesco, Shujian Yu, Ammar Shaker, and Wenzhe Yin. Towards Interpretable Multi-Task Learning Using Bilevel Programming. ECML PKDD 2020

- Hanxiao Liu, Karen Simonyan, Yiming Yang. DARTS: Differentiable Architecture Search. ICLR 2019.

- Yibo Hu, Xiang Wu, Ran He. TF-NAS: Rethinking Three Search Freedoms of Latency-constrained Differentiable Neural Architecture Search. ECCV 2020.

- Dongze Lian, Yin Zheng, Yintao Xu, Yanxiong Lu, Leyu Lin, Peilin Zhao, Junzhou Huang, Shenghua Gao. Towards Fast Adaptation of Neural Architectures with Meta-learning. ICLR 2019.

- Yuhui Xu, Lingxi Xie, Xiaopeng Zhang, Xin Chen, Guo-Jun Qi, Qi Tian, Hongkai Xiong. PC-Darts: Partial channel connections for memory-efficient architecture search. ICLR 2019.

- Yi Li, Lingxiao Songa, Xiang Wu, Ran He, Tieniu Tan.Learning a Bi-level Adversarial Network with Global and Local Perception for Makeup-invariant Face Verification. Pattern Recognition 2019.

- Haoming Jiang, Zhehui Chen, Yuyang Shi, Bo Dai, Tuo Zhao. Learning to defense by learning to attack. ICLR 2019.

- Yuesong Tian, Li Shen, Li Shen, Guinan Su, Zhifeng Li, Wei Liu. Alphagan: Fully Differentiable Architecture Search for Generative Adversarial Networks. CVPR 2020.

- Haifeng Zhang, Weizhe Chen, Zeren Huang, Minne Li, Yaodong Yang, Weinan Zhang, Jun Wang. Bi-level Actor-critic for Multi-agent Coordination. AAAI 2020

- David Pfau, Oriol Vinyals. Connecting Generative Adversarial Networks and Actor-critic Methods. NeurIPS 2016.

- Zhuoran Yang, Yongxin Chen, Mingyi Hong, Zhaoran Wang. Provably Global Convergence of Actor-critic: A Case for Linear Quadratic Regulator with Ergodic Cost. NeurIPS 2019.

- Zhangyu Chen, Dong Liu, Xiaofei Wu, Xiaochuan Xu. Research on Distributed Renewable Energy Transaction Decision-making based on Multi-Agent Bilevel Cooperative Reinforcement Learning. CIRED 2019.

- Ying Liu, Wenhong Cai, Xiaohui Yuan, Jinhai Xiang. [GL-GAN: Adaptive Global and Local Bilevel Optimization model of Image Generation. arXiv 2020.] (https://arxiv.org/abs/2008.02436)

- Chen, Zhangyu and Liu, Dong and Wu, Xiaofei and Xu, Xiaochun. Research on Distributed Renewable Energy Transaction Decision-making Based on Multi-Agent Bilevel Cooperative Reinforcement Learning. CIRED 2019.

- Yang, Jiachen and Borovikov, Igor and Zha, Hongyuan. Hierarchical cooperative multi-agent reinforcement learning with skill discovery. AAMAS 2020.

- Wang, Xuechun and Chen, Hongkun and Wu, Jun and Ding, Yurong and Lou, Qinghui and Liu, Shuwei. Bi-level Multi-agents Interactive Decision-making Model in Regional Integrated Energy System. Energy Internet and Energy System Integration 2019.

- Torabi, Faraz and Warnell, Garrett and Stone, Peter. Generative adversarial imitation from observation. arXiv 2018.

- Li, Yunzhu and Song, Jiaming and Ermon, Stefano. Infogail: Interpretable imitation learning from visual demonstrations. NeurIPS 2017.

- Sanjeev Arora, Simon S. Du, Sham Kakade, Yuping Luo, Nikunj Saunshi. Provable Representation Learning for Imitation Learning via Bi-level Optimization. arXiv 2020.

- Basura Fernando, Stephen Gould. Learning End-to-end Video Classification with Rank-pooling. ICML 2016.

If this paper is helpful for your research, please cite our paper:

@article{liu2021investigating,

title={Investigating bi-level optimization for learning and vision from a unified perspective: A survey and beyond},

author={Liu, Risheng and Gao, Jiaxin and Zhang, Jin and Meng, Deyu and Lin, Zhouchen},

journal={arXiv preprint arXiv:2101.11517},

year={2021}

}