d3rlpy is a data-driven deep reinforcement learning library as an out-of-the-box tool.

from d3rlpy.dataset import MDPDataset

from d3rlpy.algos import CQL

# MDPDataset takes arrays of state transitions

dataset = MDPDataset(observations, actions, rewards, terminals)

# train data-driven deep RL

cql = CQL()

cql.fit(dataset.episodes)

# ready to control

actions = cql.predict(x)Documentation: https://d3rlpy.readthedocs.io

d3rlpy is designed for data-driven deep reinforcement learning algorithms where the algorithm finds the good policy within the given dataset, which is suitable to tasks where online interaction is not feasible. d3rlpy also supports the conventional online training paradigm to fit in with any cases.

d3rlpy provides state-of-the-art algorithms through scikit-learn style APIs without compromising flexibility that provides detailed configurations for professional users. Moreoever, d3rlpy is not just designed like scikit-learn, but also fully compatible with scikit-learn utilites.

d3rlpy provides further tweeks to improve performance of state-of-the-art algorithms potentially beyond their original papers. Therefore, d3rlpy enables every user to achieve professional-level performance just in a few lines of codes.

$ pip install d3rlpy

| algorithm | discrete control | continuous control | data-driven RL? |

|---|---|---|---|

| Behavior Cloning (supervised learning) | ✅ | ✅ | |

| Deep Q-Network (DQN) | ✅ | ⛔ | |

| Double DQN | ✅ | ⛔ | |

| Deep Deterministic Policy Gradients (DDPG) | ⛔ | ✅ | |

| Twin Delayed Deep Deterministic Policy Gradients (TD3) | ⛔ | ✅ | |

| Soft Actor-Critic (SAC) | ✅ | ✅ | |

| Random Ensemble Mixture (REM) | 🚧 | ⛔ | ✅ |

| Batch Constrained Q-learning (BCQ) | ✅ | ✅ | ✅ |

| Bootstrapping Error Accumulation Reduction (BEAR) | ⛔ | ✅ | ✅ |

| Advantage-Weighted Regression (AWR) | ✅ | ✅ | ✅ |

| Advantage-weighted Behavior Model (ABM) | 🚧 | 🚧 | ✅ |

| Conservative Q-Learning (CQL) (recommended) | ✅ | ✅ | ✅ |

| Advantage Weighted Actor-Critic (AWAC) | ⛔ | ✅ | ✅ |

- standard Q function

- Quantile Regression

- Implicit Quantile Network

- Fully parametrized Quantile Function (experimental)

Basically, all features are available with every algorithm.

- evaluation metrics in a scikit-learn scorer function style

- embedded preprocessors

- export greedy-policy as TorchScript or ONNX

- ensemble Q function with bootstrapping

- delayed policy updates

- parallel cross validation with multiple GPU

- online training

- data augmentation

- model-based algorithm

- user-defined custom network

This library is designed as if born from scikit-learn. You can fully utilize scikit-learn's utilities to increase your productivity.

from sklearn.model_selection import train_test_split

from d3rlpy.metrics.scorer import td_error_scorer

train_episodes, test_episodes = train_test_split(dataset)

cql.fit(train_episodes,

eval_episodes=test_episodes,

scorers={'td_error': td_error_scorer})You can naturally perform cross-validation.

from sklearn.model_selection import cross_validate

scores = cross_validate(cql, dataset, scoring={'td_error': td_error_scorer})And more.

from sklearn.model_selection import GridSearchCV

gscv = GridSearchCV(estimator=cql,

param_grid={'actor_learning_rate': [3e-3, 3e-4, 3e-5]},

scoring={'td_error': td_error_scorer},

refit=False)

gscv.fit(train_episodes)d3rlpy introduces MDPDataset, a convenient data structure for reinforcement

learning.

MDPDataset splits sequential data into transitions that includes a tuple of

data observed at t and t+1, which is usually used for training.

from d3rlpy.dataset import MDPDataset

# offline data

observations = np.random.random((100000, 100)).astype('f4') # 100-dim feature observations

actions = np.random.random((100000, 4)) # 4-dim continuous actions

rewards = np.random.random(100000)

terminals = np.random.randint(2, size=100000)

# builds MDPDataset from offline data

dataset = MDPDataset(observations, actions, rewards, terminals)

# splits offline data into episodes

dataset.episodes[0].observations

# splits episodes into transitions

dataset.episodes[0].transitions[0].observation

dataset.episodes[0].transitions[0].action

dataset.episodes[0].transitions[0].next_reward

dataset.episodes[0].transitions[0].next_observation

dataset.episodes[0].transitions[0].terminalTransitionMiniBatch is also a convenient class to make a mini-batch of

sampled transitions.

And, memory copies done in TransitionMiniBatch are implemented with Cython,

which provides extremely fast computation.

from random import sample

from d3rlpy.dataset import TransitionMiniBatch

transitions = sample(dataset.episodes[0].transitions, 100)

# fast batching up with efficient-memory copy

batch = TransitionMiniBatch(transitions)

batch.observations.shape == (100, 100)One more interesting feature in the dataset structure is that each transition has pointers to its next and previous transition. This feature enables JIT frame stacking just as serveral works do with Atari tasks, which is also implemented with Cython for reducing bottlenecks.

observations = np.random.randint(256, size=(100000, 1, 84, 84), dtype=np.uint8) # 1x84x84 pixel images

actions = np.random.randint(4, size=100000) # discrete actions with 4 options

rewards = np.random.random(100000)

terminals = np.random.randint(2, size=100000)

# builds MDPDataset from offline data

dataset = MDPDataset(observations, actions, rewards, terminals, discrete_action=True)

# samples transitions

transitions = sample(dataset.episodes[0].transitions, 32)

# makes mini-batch with frame stacking

batch = TransitionMiniBatch(transitions, n_frames=4)

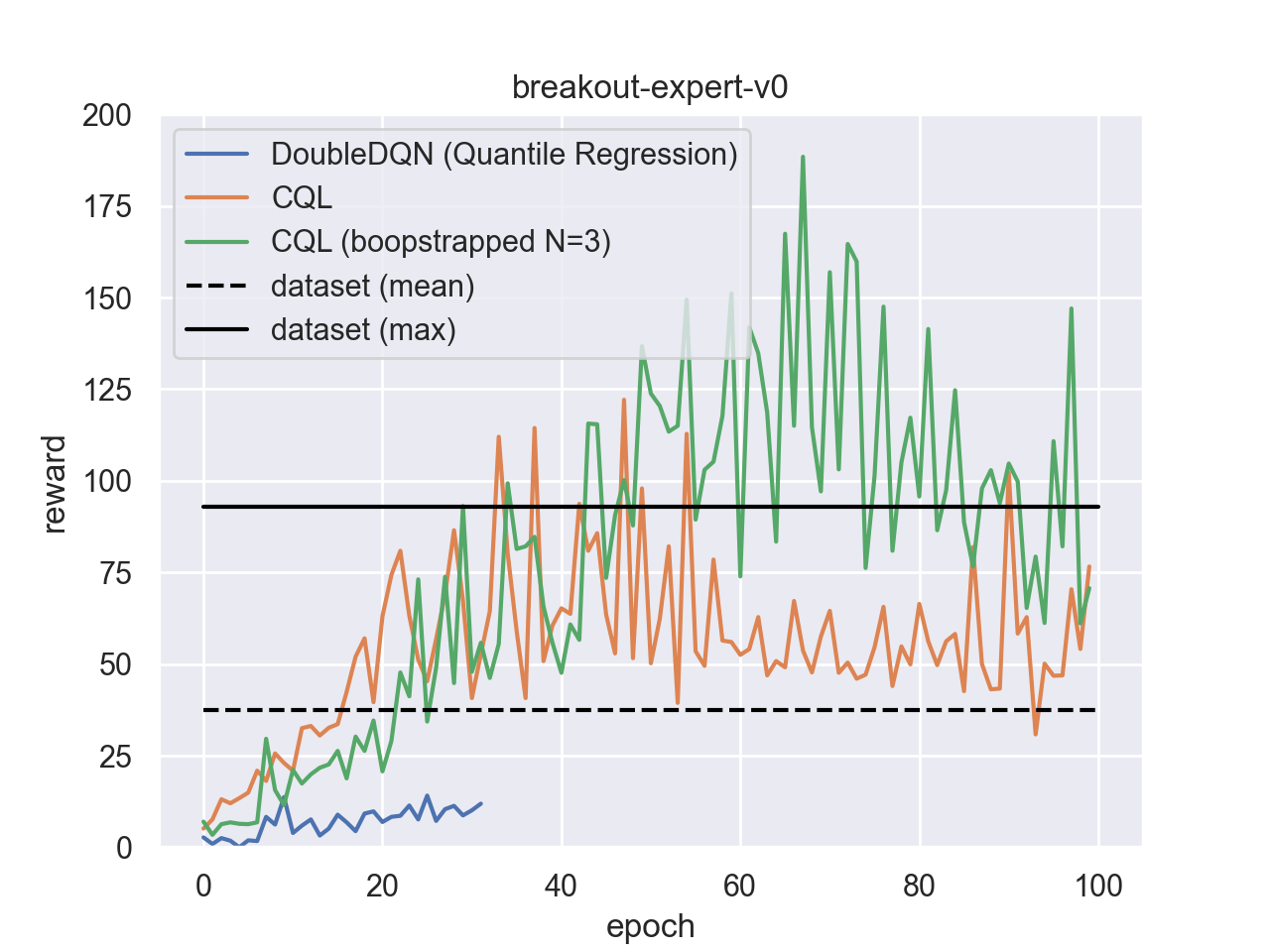

batch.observations.shape == (32, 4, 84, 84)from d3rlpy.datasets import get_atari

from d3rlpy.algos import DiscreteCQL

from d3rlpy.metrics.scorer import evaluate_on_environment

from d3rlpy.metrics.scorer import discounted_sum_of_advantage_scorer

from sklearn.model_selection import train_test_split

# get data-driven RL dataset

dataset, env = get_atari('breakout-expert-v0')

# split dataset

train_episodes, test_episodes = train_test_split(dataset, test_size=0.2)

# setup algorithm

cql = DiscreteCQL(n_frames=4,

n_critics=3,

bootstrap=True,

q_func_type='qr',

scaler='pixel',

use_gpu=True)

# start training

cql.fit(train_episodes,

eval_episodes=test_episodes,

n_epochs=100,

scorers={

'environment': evaluate_on_environment(env),

'advantage': discounted_sum_of_advantage_scorer

})| performance | demo |

|---|---|

|

|

See more Atari datasets at d4rl-atari.

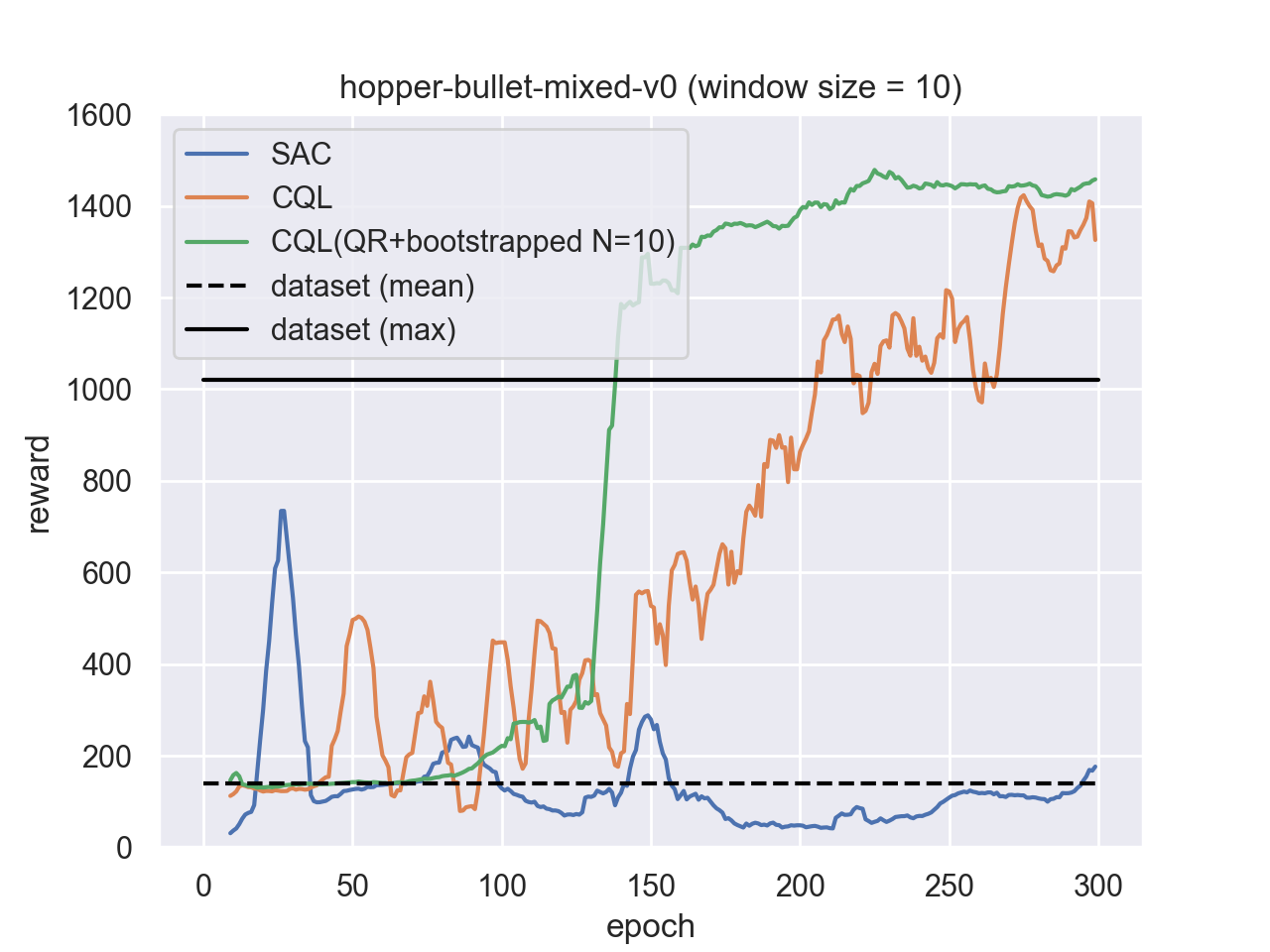

from d3rlpy.datasets import get_pybullet

from d3rlpy.algos import CQL

from d3rlpy.metrics.scorer import evaluate_on_environment

from d3rlpy.metrics.scorer import discounted_sum_of_advantage_scorer

from sklearn.model_selection import train_test_split

# get data-driven RL dataset

dataset, env = get_pybullet('hopper-bullet-mixed-v0')

# split dataset

train_episodes, test_episodes = train_test_split(dataset, test_size=0.2)

# setup algorithm

cql = CQL(actor_learning_rate=1e-3,

critic_learning_rate=1e-3,

temp_learning_rate=1e-3,

alpha_learning_rate=1e-3,

n_critics=10,

bootstrap=True,

update_actor_interval=2,

q_func_type='qr',

use_gpu=True)

# start training

cql.fit(train_episodes,

eval_episodes=test_episodes,

n_epochs=300,

scorers={

'environment': evaluate_on_environment(env),

'advantage': discounted_sum_of_advantage_scorer

})| performance | demo |

|---|---|

|

|

See more PyBullet datasets at d4rl-pybullet.

import gym

from d3rlpy.algos import SAC

from d3rlpy.online.buffers import ReplayBuffer

# setup environment

env = gym.make('HopperBulletEnv-v0')

eval_env = gym.make('HopperBulletEnv-v0')

# setup algorithm

sac = SAC(use_gpu=True)

# setup replay buffer

buffer = ReplayBuffer(maxlen=1000000, env=env)

# start training

sac.fit_online(env, buffer, n_epochs=100, eval_env=eval_env)Try a cartpole example on Google Colaboratory!

This library is fully formatted with yapf. You can format the entire scripts as follows:

$ ./scripts/format

The unit tests are provided as much as possible.

This repository is using pytest-cov instead of pytest.

You can run the entire tests as follows:

$ ./scripts/test

If you give -p option, the performance tests with toy tasks are also run

(this will take minutes).

$ ./scripts/test -p

This work is supported by Information-technology Promotion Agency, Japan (IPA), Exploratory IT Human Resources Project (MITOU Program) in the fiscal year 2020.