Training a NN in the Iris Data Set

%matplotlib inline

import matplotlib

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.neural_network import MLPClassifier

from sklearn.metrics import classification_report, confusion_matrixdf = pd.read_csv('data.csv', header=None)

df

<style scoped>

.dataframe tbody tr th:only-of-type {

vertical-align: middle;

}

</style>

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

| 0 | 1 | 2 | 3 | 4 | |

|---|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 | Iris-setosa |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 | Iris-setosa |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 | Iris-setosa |

| 3 | 4.6 | 3.1 | 1.5 | 0.2 | Iris-setosa |

| 4 | 5.0 | 3.6 | 1.4 | 0.2 | Iris-setosa |

| 5 | 5.4 | 3.9 | 1.7 | 0.4 | Iris-setosa |

| 6 | 4.6 | 3.4 | 1.4 | 0.3 | Iris-setosa |

| 7 | 5.0 | 3.4 | 1.5 | 0.2 | Iris-setosa |

| 8 | 4.4 | 2.9 | 1.4 | 0.2 | Iris-setosa |

| 9 | 4.9 | 3.1 | 1.5 | 0.1 | Iris-setosa |

| 10 | 5.4 | 3.7 | 1.5 | 0.2 | Iris-setosa |

| 11 | 4.8 | 3.4 | 1.6 | 0.2 | Iris-setosa |

| 12 | 4.8 | 3.0 | 1.4 | 0.1 | Iris-setosa |

| 13 | 4.3 | 3.0 | 1.1 | 0.1 | Iris-setosa |

| 14 | 5.8 | 4.0 | 1.2 | 0.2 | Iris-setosa |

| 15 | 5.7 | 4.4 | 1.5 | 0.4 | Iris-setosa |

| 16 | 5.4 | 3.9 | 1.3 | 0.4 | Iris-setosa |

| 17 | 5.1 | 3.5 | 1.4 | 0.3 | Iris-setosa |

| 18 | 5.7 | 3.8 | 1.7 | 0.3 | Iris-setosa |

| 19 | 5.1 | 3.8 | 1.5 | 0.3 | Iris-setosa |

| 20 | 5.4 | 3.4 | 1.7 | 0.2 | Iris-setosa |

| 21 | 5.1 | 3.7 | 1.5 | 0.4 | Iris-setosa |

| 22 | 4.6 | 3.6 | 1.0 | 0.2 | Iris-setosa |

| 23 | 5.1 | 3.3 | 1.7 | 0.5 | Iris-setosa |

| 24 | 4.8 | 3.4 | 1.9 | 0.2 | Iris-setosa |

| 25 | 5.0 | 3.0 | 1.6 | 0.2 | Iris-setosa |

| 26 | 5.0 | 3.4 | 1.6 | 0.4 | Iris-setosa |

| 27 | 5.2 | 3.5 | 1.5 | 0.2 | Iris-setosa |

| 28 | 5.2 | 3.4 | 1.4 | 0.2 | Iris-setosa |

| 29 | 4.7 | 3.2 | 1.6 | 0.2 | Iris-setosa |

| ... | ... | ... | ... | ... | ... |

| 120 | 6.9 | 3.2 | 5.7 | 2.3 | Iris-virginica |

| 121 | 5.6 | 2.8 | 4.9 | 2.0 | Iris-virginica |

| 122 | 7.7 | 2.8 | 6.7 | 2.0 | Iris-virginica |

| 123 | 6.3 | 2.7 | 4.9 | 1.8 | Iris-virginica |

| 124 | 6.7 | 3.3 | 5.7 | 2.1 | Iris-virginica |

| 125 | 7.2 | 3.2 | 6.0 | 1.8 | Iris-virginica |

| 126 | 6.2 | 2.8 | 4.8 | 1.8 | Iris-virginica |

| 127 | 6.1 | 3.0 | 4.9 | 1.8 | Iris-virginica |

| 128 | 6.4 | 2.8 | 5.6 | 2.1 | Iris-virginica |

| 129 | 7.2 | 3.0 | 5.8 | 1.6 | Iris-virginica |

| 130 | 7.4 | 2.8 | 6.1 | 1.9 | Iris-virginica |

| 131 | 7.9 | 3.8 | 6.4 | 2.0 | Iris-virginica |

| 132 | 6.4 | 2.8 | 5.6 | 2.2 | Iris-virginica |

| 133 | 6.3 | 2.8 | 5.1 | 1.5 | Iris-virginica |

| 134 | 6.1 | 2.6 | 5.6 | 1.4 | Iris-virginica |

| 135 | 7.7 | 3.0 | 6.1 | 2.3 | Iris-virginica |

| 136 | 6.3 | 3.4 | 5.6 | 2.4 | Iris-virginica |

| 137 | 6.4 | 3.1 | 5.5 | 1.8 | Iris-virginica |

| 138 | 6.0 | 3.0 | 4.8 | 1.8 | Iris-virginica |

| 139 | 6.9 | 3.1 | 5.4 | 2.1 | Iris-virginica |

| 140 | 6.7 | 3.1 | 5.6 | 2.4 | Iris-virginica |

| 141 | 6.9 | 3.1 | 5.1 | 2.3 | Iris-virginica |

| 142 | 5.8 | 2.7 | 5.1 | 1.9 | Iris-virginica |

| 143 | 6.8 | 3.2 | 5.9 | 2.3 | Iris-virginica |

| 144 | 6.7 | 3.3 | 5.7 | 2.5 | Iris-virginica |

| 145 | 6.7 | 3.0 | 5.2 | 2.3 | Iris-virginica |

| 146 | 6.3 | 2.5 | 5.0 | 1.9 | Iris-virginica |

| 147 | 6.5 | 3.0 | 5.2 | 2.0 | Iris-virginica |

| 148 | 6.2 | 3.4 | 5.4 | 2.3 | Iris-virginica |

| 149 | 5.9 | 3.0 | 5.1 | 1.8 | Iris-virginica |

150 rows × 5 columns

y_data = df.iloc[:, -1]types_separated = [df.loc[df[4] == i] for i in y_data.unique()]

setosa = types_separated[0]

versicolor = types_separated[1]

virginica = types_separated[2]print('sepal sizes')

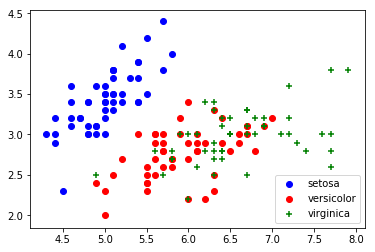

fig = plt.figure()

ax1 = fig.add_subplot(111)

ax1.scatter(setosa[0], setosa[1], c='b', marker="o", label='setosa')

ax1.scatter(versicolor[0], versicolor[1], c='r', marker="o", label='versicolor')

ax1.scatter(virginica[0], virginica[1], c='g', marker="+", label='virginica')

plt.legend(loc='lower right');

plt.show()sepal sizes

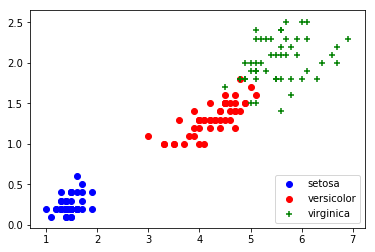

print('petal sizes')

fig = plt.figure()

ax1 = fig.add_subplot(111)

ax1.scatter(setosa[2], setosa[3], c='b', marker="o", label='setosa')

ax1.scatter(versicolor[2], versicolor[3], c='r', marker="o", label='versicolor')

ax1.scatter(virginica[2], virginica[3], c='g', marker="+", label='virginica')

plt.legend(loc='lower right');

plt.show()petal sizes

df = df.sample(frac=1).reset_index(drop=True)TRAINING_SET_SIZE = 0.8

TRAIN_SET_ENDING = int(len(df)*TRAINING_SET_SIZE)(not gonna use cross-validation, just want a quick and dirty test)

train_data = df.iloc[:TRAIN_SET_ENDING, :]

test_data = df.iloc[TRAIN_SET_ENDING:, :].reset_index(drop=True)X_train = train_data.iloc[:, :-1]

y_train = train_data.iloc[:, -1]X_test = test_data.iloc[:, :-1]

y_test = test_data.iloc[:, -1]scaler = StandardScaler()

scaler.fit(X_train)StandardScaler(copy=True, with_mean=True, with_std=True)

X_train = scaler.transform(X_train)

X_test = scaler.transform(X_test)mlp = MLPClassifier(hidden_layer_sizes=15, max_iter=10000)mlp.fit(X_train,y_train)MLPClassifier(activation='relu', alpha=0.0001, batch_size='auto', beta_1=0.9,

beta_2=0.999, early_stopping=False, epsilon=1e-08,

hidden_layer_sizes=15, learning_rate='constant',

learning_rate_init=0.001, max_iter=10000, momentum=0.9,

n_iter_no_change=10, nesterovs_momentum=True, power_t=0.5,

random_state=None, shuffle=True, solver='adam', tol=0.0001,

validation_fraction=0.1, verbose=False, warm_start=False)

predictions = mlp.predict(X_test)print(confusion_matrix(y_test, predictions))

print(classification_report(y_test, predictions))[[11 0 0]

[ 0 10 0]

[ 0 0 9]]

precision recall f1-score support

Iris-setosa 1.00 1.00 1.00 11

Iris-versicolor 1.00 1.00 1.00 10

Iris-virginica 1.00 1.00 1.00 9

micro avg 1.00 1.00 1.00 30

macro avg 1.00 1.00 1.00 30

weighted avg 1.00 1.00 1.00 30