FedERA is a highly dynamic and customizable framework that can accommodate many use cases with flexibility by implementing several functionalities over different federated learning algorithms, and essentially creating a plug-and-play architecture to accommodate different use cases.

FedERA has been extensively tested on and works with the following devices:

- Intel CPUs

- Nvidia GPUs

- Nvidia Jetson

- Raspberry Pi

- Intel NUC

With FedERA, it is possible to operate the server and clients on separate devices or on a single device through various means, such as utilizing different terminals or implementing multiprocessing.

- Install the latest version from source code:

$ git clone https://github.com/anupamkliv/FedERA.git

$ cd FedERA

$ pip install -r requirements.txt

Website documentation has been made availbale for FedERA. Please visit FedERA Documentation for more details.

python -m federa.server.start_server \

--algorithm fedavg \

--clients 2 \

--rounds 10 \

--epochs 10 \

--batch_size 10 \

--lr 0.01 \

--dataset mnist \

python -m federa.client.start_client \

--ip localhost:8214 \

--device cpu \

| Argument | Description | Default |

|---|---|---|

| algorithm | specifies the aggregation algorithm | fedavg |

| clients | specifies number of clients selected per round | 2 |

| fraction | specifies fraction of clients selected | 1 |

| rounds | specifies total number of rounds | 2 |

| model_path | specifies initial server model path | initial_model.pt |

| epochs | specifies client epochs per round | 1 |

| accept_conn | determines if connections accepted after FL begins | 1 |

| verify | specifies if verification module runs before rounds | 0 |

| threshold | specifies minimum verification score | 0 |

| timeout | specifies client training time limit per round | None |

| resize_size | specifies dataset resize dimension | 32 |

| batch_size | specifies dataset batch size | 32 |

| net | specifies network architecture | LeNet |

| dataset | specifies dataset name | FashionMNIST |

| niid | specifies data distribution among clients | 1 |

| carbon | specifies if carbon emissions tracked at client side | 0 |

| encryption | specifies whether to use ssl encryption or not | 0 |

| server_key | specifies path to server key certificate | server-key.pem |

| server_cert | specifies path to server certificate | server.pem |

| Argument | Description | Default |

|---|---|---|

| server_ip | specifies server IP address | localhost:8214 |

| device | specifies device | cpu |

| encryption | specifies whether to use ssl encryption or not | 0 |

| ca | specifies path to CA certificate | ca.pem |

Files architecture of FedERA. These contents may be helpful for users to understand our repo.

FedERA

├── federa

│ ├── client

│ │ ├── src

│ | | ├── client_lib

│ | | ├── client

│ | | ├── ClientConnection_pb2_grpc

│ | | ├── ClientConnection_pb2

│ | | ├── data_utils

│ | | ├── distribution

│ | | ├── get_data

│ | | ├── net_lib

│ | | ├── net

│ │ └── start_client

│ └── server

│ ├── src

│ | ├── algorithms

│ | ├── server_evaluate

│ | ├── client_connection_servicer

│ | ├── client_manager

│ | ├── client_wrapper

│ | ├── ClientConnection_pb2_grpc

│ | ├── ClientConnection_pb2

│ | ├── server_lib

│ | ├── server

│ | ├── verification

│ └── start_server

└── test

├── misc

├── benchtest

| ├── test_algorithms

| ├── test_datasets

| ├── test_models

| ├── test_modules

| ├── test_results

| └── test_scalability

└──unittest

└── test_algorithms

- Module 1: Verification module docs

- Module 2: Timeout module docs

- Module 3: Intermediate client connections module docs

- Module 4: Carbon emission tracking module docs

Various unit tests are available in the test directory. To run any tests, run the following command from the root directory:

python -m test.benchtest.test_algorithms

python -m test.benchtest.test_datasets

python -m test.benchtest.test_models

python -m test.benchtest.test_modules

python -m test.benchtest.test_scalability

Following federated learning algorithms are implemented in this framework:

| Method | Paper | Publication |

|---|---|---|

| FedAvg | Communication-Efficient Learning of Deep Networks from Decentralized Data | AISTATS'2017 |

| FedDyn | Federated Learning Based on Dynamic Regularization | ICLR' 2021 |

| Scaffold | SCAFFOLD: Stochastic Controlled Averaging for Federated Learning | ICML'2020 |

| Personalized FedAvg | Improving Federated Learning Personalization via Model Agnostic Meta Learning | Pre-print |

| FedAdagrad | Adaptive Federated Optimization | ICML'2020 |

| FedAdam | Adaptive Federated Optimization | ICML'2020 |

| FedYogi | Adaptive Federated Optimization | ICML'2020 |

| Mime | Mime: Mimicking Centralized Stochastic Algorithms in Federated Learning | ICML'2020 |

| Mimelite | Mime: Mimicking Centralized Stochastic Algorithms in Federated Learning | ICML'2020 |

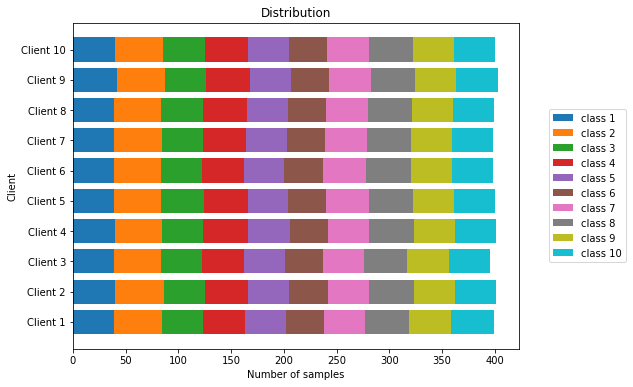

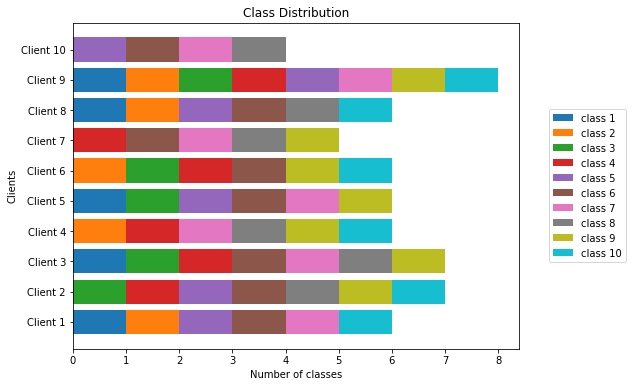

Sophisticated in the real world, FL needs to handle various kind of data distribution scenarios, including iid and non-iid scenarios. Though there already exists some datasets and partition schemes for published data benchmark, it still can be very messy and hard for researchers to partition datasets according to their specific research problems, and maintain partition results during simulation.

We provide multiple Non-IID data partition schemes. Here we show the data partition visualization of several common used datasets as the examples.

Each client has same number of samples, and same distribution for all class samples.

| Dataset | Training samples | Test samples | Classes |

|---|---|---|---|

| MNIST | 60,000 | 10,000 | 10 |

| FashionMnist | 60,000 | 10,000 | 10 |

| CIFAR-10 | 50,000 | 10,000 | 10 |

| CIFAR-100 | 50,000 | 10,000 | 100 |

We also provide a simple way to add your own dataset to the framework. Look into docs for more details.

FedERA has support for the following Deep Learning models, which are loaded from torchvision.models:

- LeNet

- ResNet18

- ResNet50

- VGG16

- AlexNet

We also provide a simple way to add your own models to the framework. Look into docs for more details.

In FedERA CodeCarbon[1] package is used to estimate the carbon emissions generated by clients during training. CodeCarbon is a Python package that provides an estimation of the carbon emissions associated with software code.

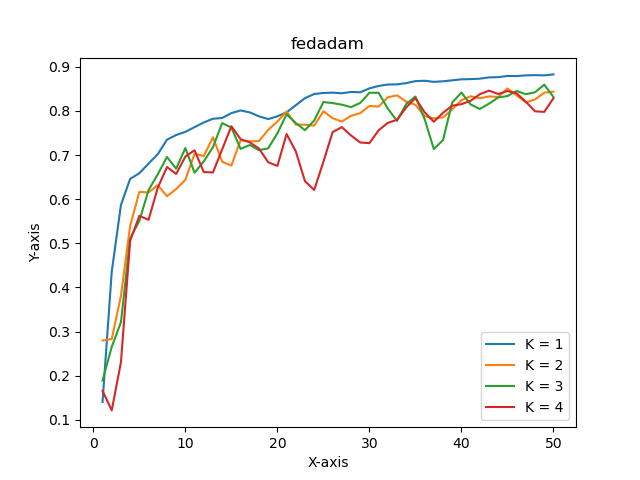

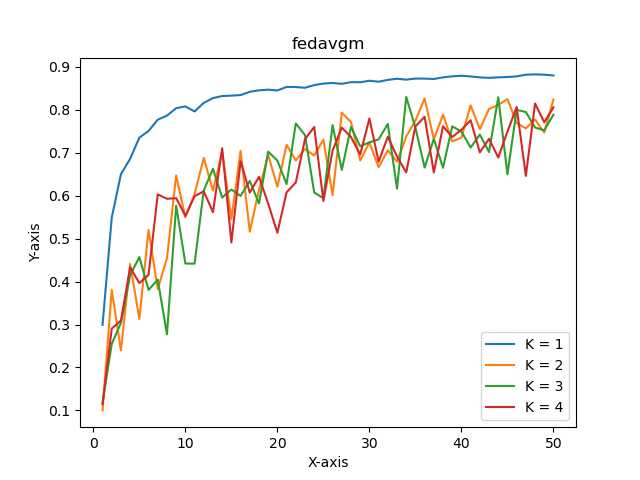

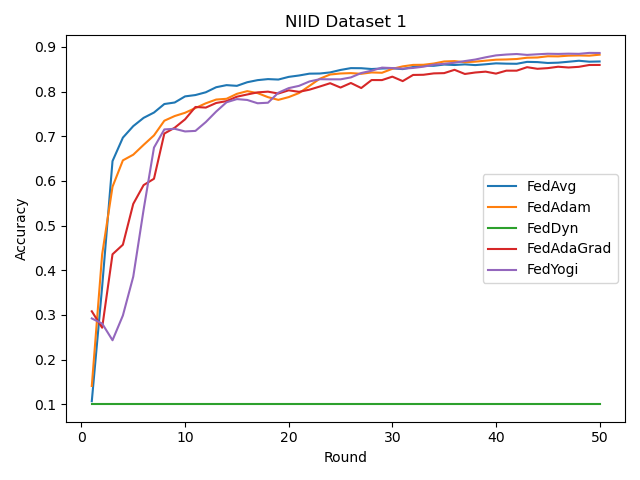

The accuracy.py file has functions defined needed to plot all the graphs show in this section.

| Dataset | MNIST | CIFAR-10 | CIFAR-100 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Algorithm | K=1 | K=2 | K=3 | K=1 | K=2 | K=3 | K=1 | K=2 | K=3 |

| FedAvg | |||||||||

| FedAvgM | |||||||||

| FedAdam | |||||||||

| FedAdagrad | |||||||||

| FedYogi | |||||||||

| Mime | |||||||||

| Mimelite | |||||||||

| FedDyn | |||||||||

| Scaffold | |||||||||

| Personalized-FedAvg | |||||||||

Project Investigator: Prof. (abc@edu).

For technical issues related to FedERA development, please contact our development team through Github issues or email: