This is the second project for Intel Edge AI for IoT developers nanodegree. In this project we are given three scenarios-

- Manufacturing

- Retail

- Transportation

These scenarios come from three different industry sectors which try to mimic the requirements of a real client. Our job will be to analyze the constraints, propose the hardware solution and build out and test the application on the Intel DEVCloud. Specifically the goal is to build an application which reduces congestion and queuing systems, situations where the queues are not managed efficiently - one queue may be overloaded while another is empty. Along with the scenarios, sample video footage from the client is provided which can be used to test our applications' performance.

Reduce the congestion in the queues using Intel's OpenVINO API and the person detection model from the Open Model Zoo to count the number of people in each queue, so that people can be directed to the least congested queue. We need to build an application that finds the optimized solution for each scenario which satisfy the client's requirements like the limitations on the budgets they can spend on the hardware and the power consumption requirements for the hardware.

- Propose a possible hardware solution

- Build out the application and test its performance on the DevCloud using multiple hardware types

- Compare the performance to see which hardware performed best

- Validate the proposal based on the test results

The model used for all the scenarios is person-detection-retail-0013 which can be found in Open Model Zoo. The details of the model used for this project can be found here. All the code in the project ran on Intel DEVCloud, so we don't need to set up anything locally.

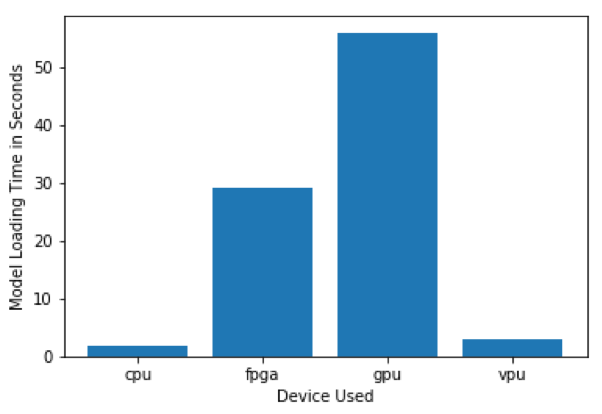

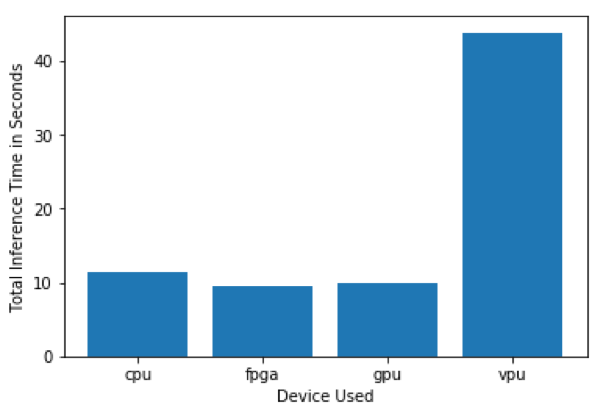

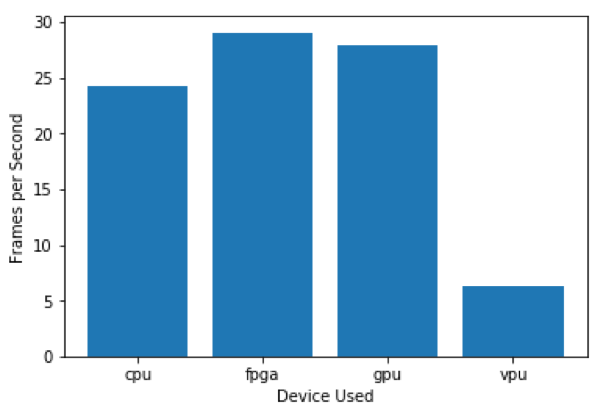

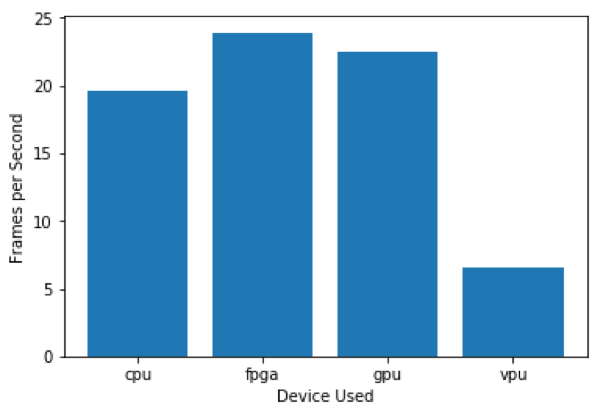

All the scenarios are benchmarked for three metrics across different hardware - Model load time, Inference time and Frames per second.

Which hardware might be most appropriate for this scenario? (CPU/IGPU/VPU/FPGA)

Proposed Hardware: FPGA

| Requirements observed | How does the chosen hardware meet this requirement? |

|---|---|

| Client requirement is to do image processing 5 times per second on a video feed of 30-35 FPS | FPGA provides low latency as compared to other devices |

| It can be easily reprogrammed and optimized for other tasks like finding flaws in the semiconductor chips | FPGAs are ideal for this scenario because they are very flexible in the sense that they are field programmable |

| It should at least last for 5-10 years | FPGAs that use devices from Intel’s IoT group have a guaranteed availability of 10 years, from start to production |

| Budget is not a constraint since the revenue is good | FPGAs are at least $1000 |

| Maximum number of people in the queue | 5 |

|---|---|

| Model precision chosen (FP32, FP16, or INT8) | FP16 |

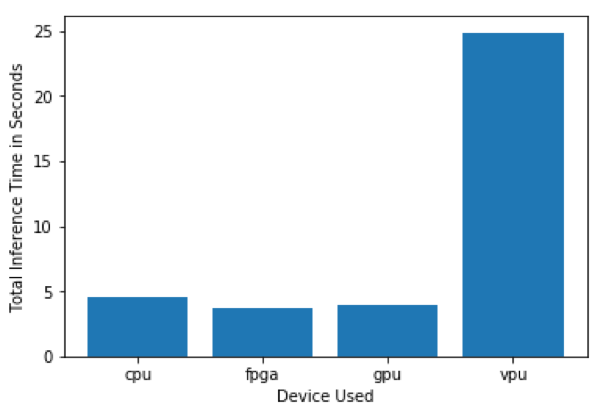

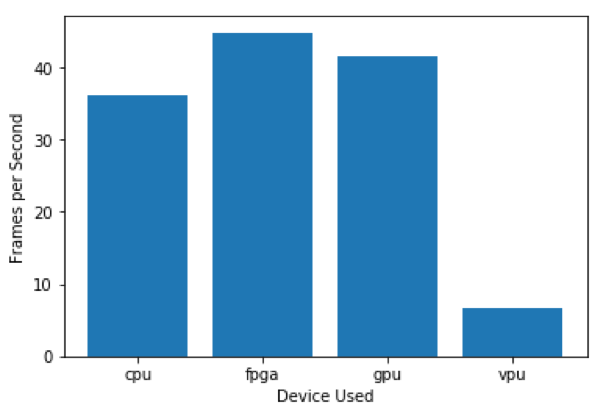

All the scenarios are benchmarked for three metrics across different hardware - Model load time, Inference time and Frames per second.

- Model load time

- Inference time

- Frames per second

As per the requirements listed above, FPGA indeed proves out to be the best hardware for this scenario since it offers the highest frame rate and lowest inference time.

Which hardware might be most appropriate for this scenario? (CPU/IGPU/VPU/FPGA)

Proposed Hardware: Integrated GPU that comes with core i7 systems of the client

| Requirements observed | How does the chosen hardware meet this requirement? |

|---|---|

| Limited Budget | No need to buy new hardware, use the IGPU in the systems at the checkout counters |

| Latency should not be an issue since even on weekdays the average wait time for customers is around 230 seconds | IGPU offers comparatively higher latency as compared to devices like VPUs |

| Client also wants to save as much as possible on electric bill | IGPUs are pretty customizable as they have configurable power consumption option |

| Maximum number of people in the queue | 5 (during peak hours) |

|---|---|

| Model precision chosen (FP32, FP16, or INT8) | IGPU EUs are only optimized for FP16 |

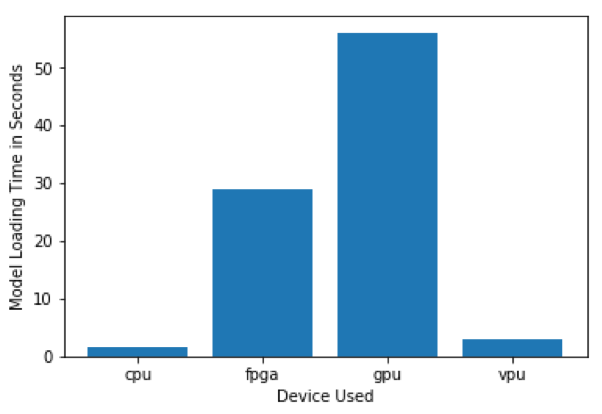

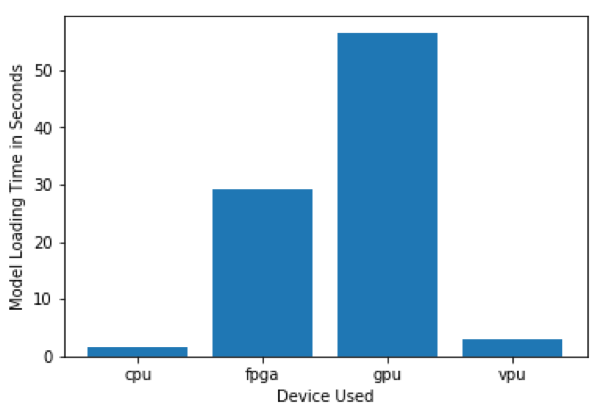

- Model load time

- Inference time

- Frames per second

The main requirements of the client were a limited budget and saving as much on electric bills as possible. The client already has modern systems equipped with core-i7. Although the model loading time for an Integrated GPU is the highest but still it is the perfect hardware for this current scenario as it offers low latency and high frames per second with no additional cost.

Which hardware might be most appropriate for this scenario? (CPU/IGPU/VPU/FPGA)

Proposed Hardware: VPU (Intel Neural Compute Stick-2)

| Requirements observed | How does the chosen hardware meet this requirement? |

|---|---|

| Client has a maximum budget of $300 per system and she would like to save as much as possible | VPUs are small, low-cost devices which can accelerate the performance of pre-existing CPUs |

| Save as much as on power requirements | VPUs are very low power devices |

| Maximum number of people in the queue | 15 (during rush hours) |

|---|---|

| Model precision chosen (FP32, FP16, or INT8) | FP16 |

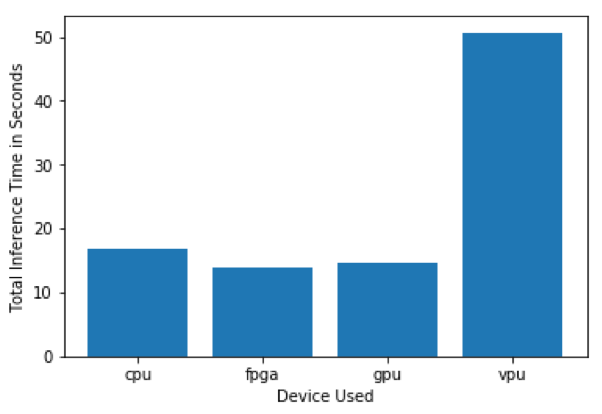

- Model load time

- Inference time

- Frames per second

Although VPUs have the slowest inference time, the qualities that make them very attractive are low-budget, low-power and very fast model loading time. These are the things that matter a lot to the client so our hardware recommendations are Intel Neural Compute Stick-2.