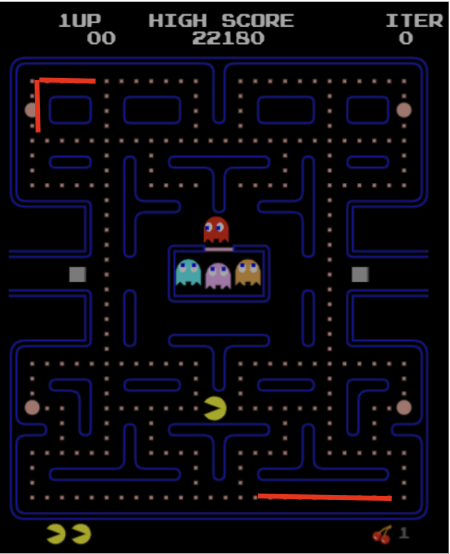

Implemented three different agents, utility, simply-rule-based and learning agents for the game pac-man.

Check out the Demo

clone the repo and open index.html using browser. All codes are in Vanilla JS

The PacMan game is forked form:

Only two rules

- if no ghost are approaching then seeking pellet

- else run evasion algorithm

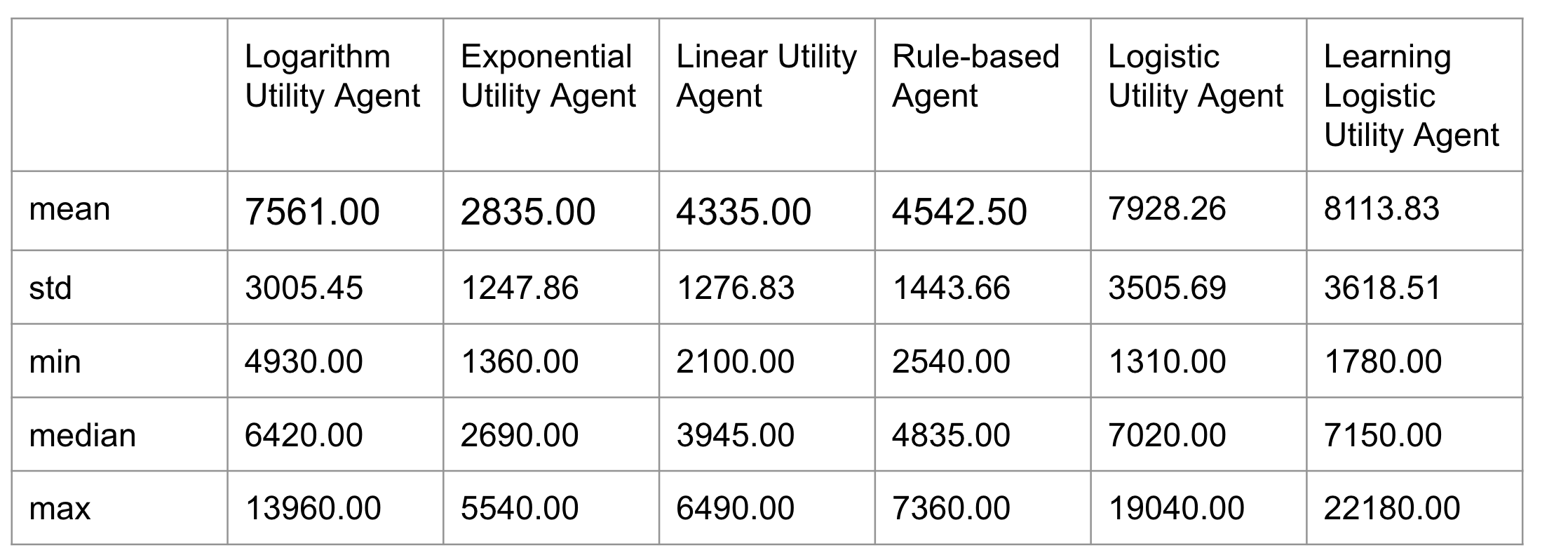

Two strategies were considered. Chose the second approach due to better performance

- Find a random pellet

- Within the food pellets list, return a random pellet to seek

- Pro: faster, since we just had to sample a random index in the array

- Con: Often made pacman seek a pellet in a far away corner, reducing the efficiency

- Mean 2203 Max 5480

- Find the nearest pellet

- We utilize the manhattan distance from pacman to a tile to get the distance to food

- Return the pellet with minimum distance

- Pro: Have pacman be more efficient since he seeks the closest pellet

- Con: O(n) time where n is the number of pellets

- Mean 3936 Max: 7720

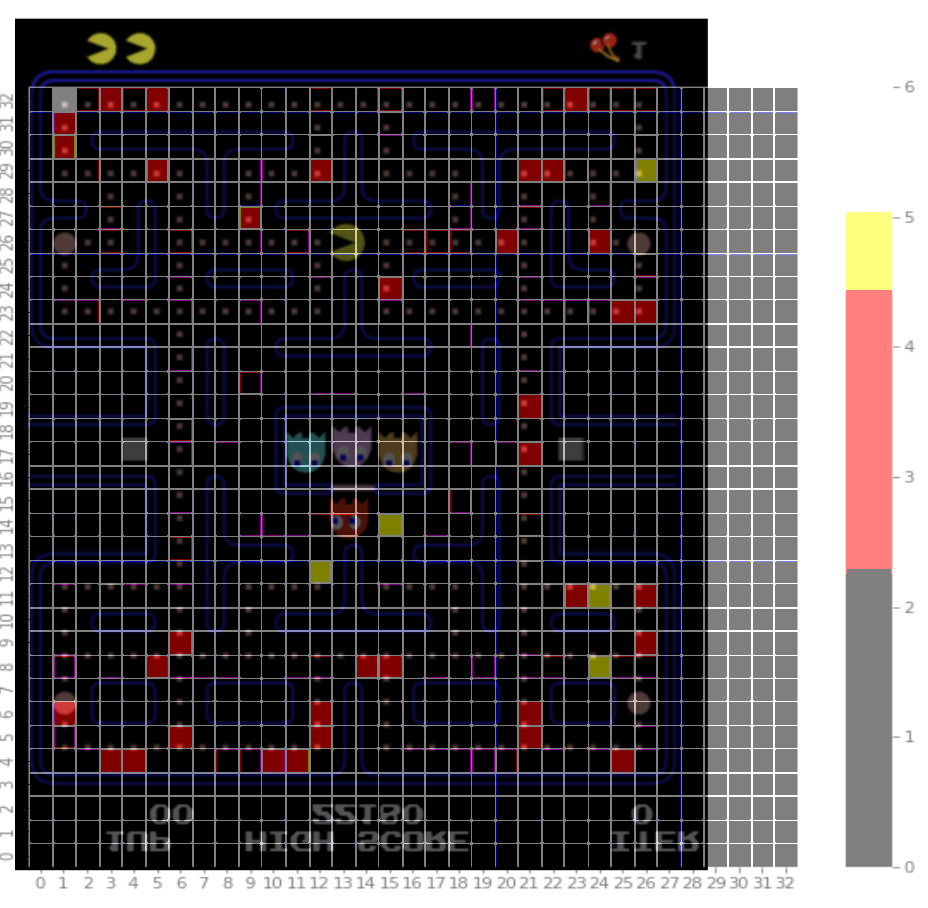

Used BFS to find the safest tile within x radius of pacman

The safest tile is the tile which has the max minimum distance between all ghost

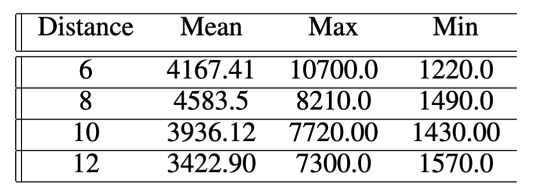

The result shows as below

Why we use 8 as the minimum distance

The corner and long aisle is the easiest place for pacman to be flanked (And the length of those is around 8 )

Increasing the range for evasion can improve prediction result, but Pac-Man will spend more time escaping instead of seeking pellets.

- Generate a utility score for each available tiles adjacent to PacMan

-

$u = \text{tile value} + \text{safety value}$ $\text{tile value} = -k * \text{distance to pellets}$ -

$k$ is a constant value for weighting the distance value -

$\text{safety value} = max(f(\text{distance to ghost}))$ or$average(f\text{distance to ghost})$

- PacMan always move to the tile with max utilty

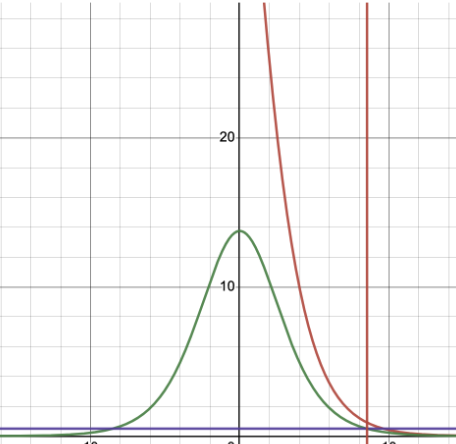

- I experimented different function for

$f$ - Linear scaled

- exponential scaled

- logarithmic scaled

- Logistic scaled

When two tiles have same utility pacman man will oscillate between them as show above.

- It is not a problem when equal utility is caused by safety_value.

- It is a problem when equal utility is caused by tile_value

To mitigate I tried two methods

- Give a penalty if pacman move backward,

- Give a preference [0.1,0.2,0.3,0.4] for each direction, the preference will be shuffled when pacman move to a new tile.

I conclude the going back penalty and direction preference will not mess up the utility function as long as these value no larger than the derivative of tile value function.

However preference shuffle will be a better solution

Three goals

- Don’t bump into ghost in order to pick up pellets

- Can seek pellet even if it means we get closer to a ghost

- Agent will collide with ghost if ghost is scared

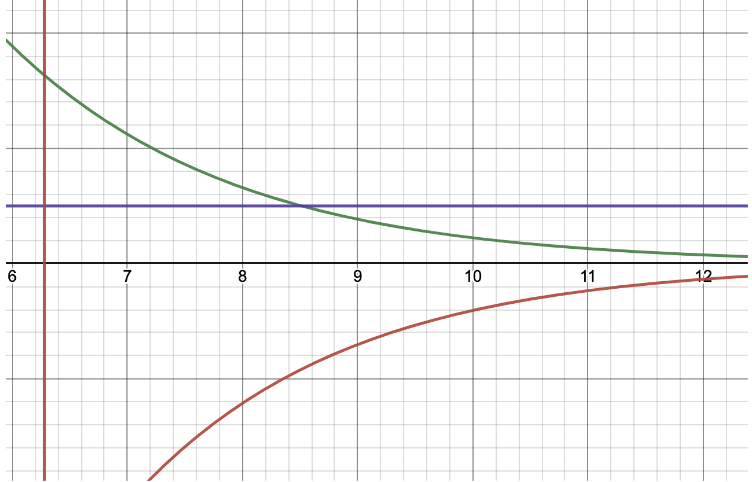

I will only show the analysis why I picked logistic function

- Green derivative of utility function

- Red is utility function

- Purple is Y = 0.5, derivative of tile_value.

- Safe_distance where purple and green intercepted

- X < safe_distance risk averse.

- X >= safe_distance can be made as risk neutral by ignoring safety value below certain threshold, in this case 0.496

- Obviously goal 1 and goal 2 can be achieved.

and when ghost are in flee mode(PacMan obtain power pellet)

by simply negate the utilty function, pacman will prioritize to pursued ghost when within the safe distance.

here is the demo

I took a very naive approach on learning agent.

- Based on Logistic Utility Agent I implemented an learning agent.

- Because the nature of vanilla javascript this is very rudimentary approach.

- Run 200 iteration and log every location pacman died.

- Add dead_count / (1+ghost_distance) to that tile to indicate those tiles are dangerous