ZEGOCLOUD's easy example is a simple wrapper around our RTC product. You can refer to the sample code for quick integration.

- Xcode 12 or later

- CocoaPods

- An iOS device or Simulator that is running on iOS 13.0 or later and supports audio and video. We recommend you use a real device.

- Create a project in ZEGOCLOUD Admin Console. For details, see ZEGO Admin Console - Project management.

- Clone the easy example Github repository.

- Open Terminal, navigate to the

ZegoEasyExamplefolder where thePodfileis located, and run thepod repo updatecommand. - Run the

pod installcommand to install all dependencies that are needed.

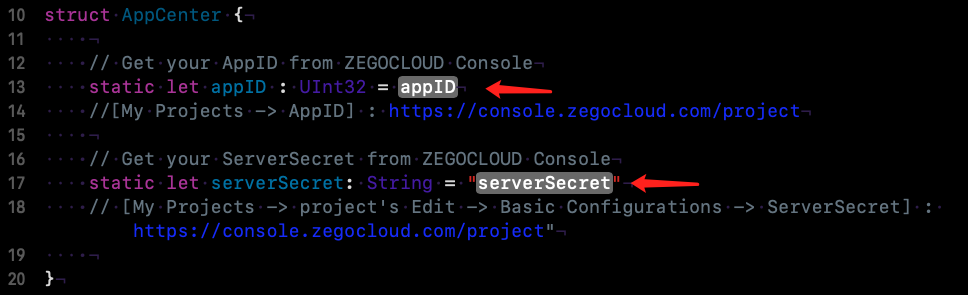

You need to modify

You need to modify appID and serverSecret to your own account, which can be obtained in the ZEGO Admin Console.

-

Connect the iOS device to your computer.

-

Open Xcode, click the Any iOS Device in the upper left corner, select the iOS device you are using.

-

Click the Build button in the upper left corner to run the sample code and experience the Live Audio Room service.

1 add ZegoExpressEngine and ZegoToken SDK in your project

2 Run the pod install command to install all dependencies that are needed.

target 'Your_Project_Name' do

# Comment the next line if you don't want to use dynamic frameworks

use_frameworks!

# Pods for ZegoEasyExample

pod 'ZegoExpressEngine'

pod ‘ZegoToken’

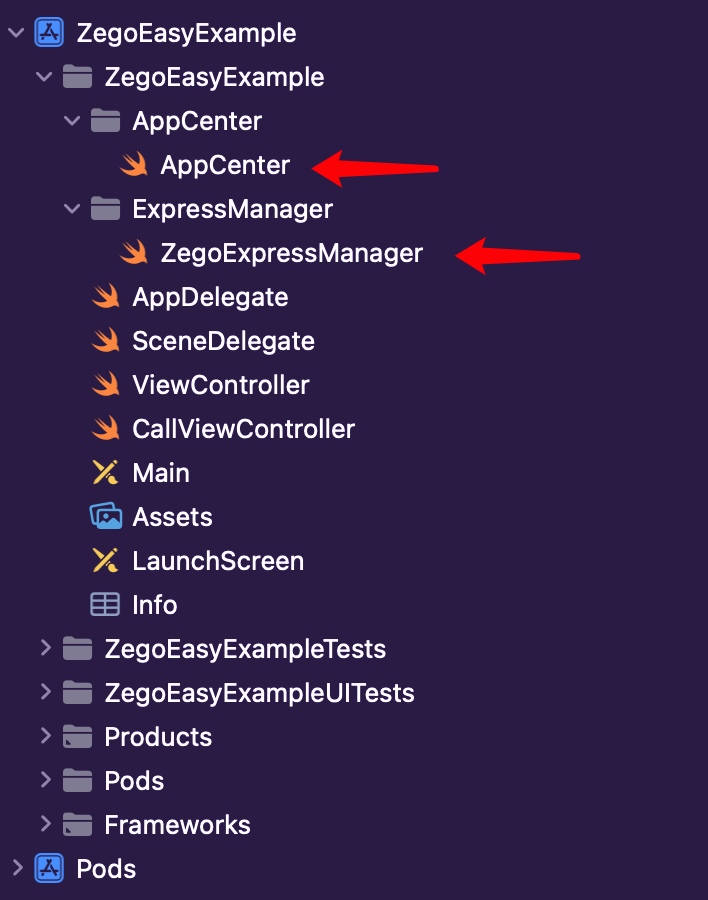

endCopy the AppCenter.swift and ZegoExpressManager.swift files to your project

The calling sequence of the SDK interface is as follows: createEngine --> joinRoom --> setLocalVideoView/setRemoteVideoView --> leaveRoom

Before using the SDK function, you need to create the SDK first. We recommend creating it when the application starts. The sample code is as follows:

func application(_ application: UIApplication, didFinishLaunchingWithOptions launchOptions: [UIApplication.LaunchOptionsKey: Any]?) -> Bool {

// create engine

ZegoExpressManager.shared.createEngine(appID: AppCenter.appID)

return true

}When you want to communicate with audio and video, you need to call the join room interface first. According to your business scenario, you can set different audio and video controls through options, such as:

- call scene:[.autoPlayVideo, .autoPlayAudio, .publishLocalAudio, .publishLocalVideo]

- Live scene - host: [.autoPlayVideo, .autoPlayAudio, .publishLocalAudio, .publishLocalVideo]

- Live scene - audience:[.autoPlayVideo, .autoPlayAudio]

- Chat room - host:[.autoPlayAudio, .publishLocalAudio]

- Chat room - audience:[.autoPlayAudio]

The following sample code is an example of a call scenario:

@IBAction func pressJoinRoom(_ sender: UIButton) {

// join room

let roomID = "111"

let user = ZegoUser(userID: "id\(Int(arc4random()))", userName: "Tim")

let token = generateToken(userID: user.userID)

let option: ZegoMediaOptions = [.autoPlayVideo, .autoPlayAudio, .publishLocalAudio, .publishLocalVideo]

ZegoExpressManager.shared.joinRoom(roomID: roomID, user: user, token: token, options: option)

presentVideoVC()

}If your project needs to use the video communication function, you need to set the View for displaying the video, call setLocalVideoView for the local video, and call setRemoteVideoView for the remote video.

setLocalVideoView:

override func viewDidLoad() {

super.viewDidLoad()

self.view.backgroundColor = UIColor.white

// set video view

ZegoExpressManager.shared.setLocalVideoView(renderView: localVideoView)

}setRemoteVideoView:

func onRoomUserUpdate(udpateType: ZegoUpdateType, userList: [String], roomID: String) {

for userID in userList {

// set video view

ZegoExpressManager.shared.setRemoteVideoView(userID:userID, renderView: remoteVideoView)

}

}When you want to leave the room, you can call the leaveroom interface.

@IBAction func pressLeaveRoomButton(_ sender: Any) {

ZegoExpressManager.shared.leaveRoom()

self.dismiss(animated: true, completion: nil)

}