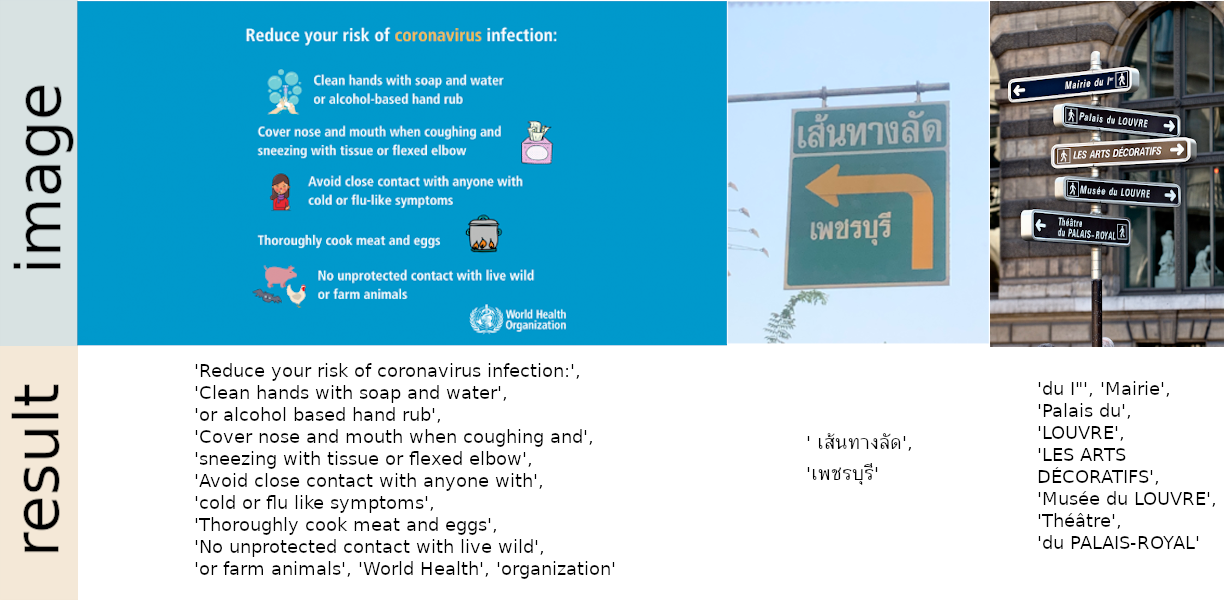

Ready-to-use OCR with 40+ languages supported including Chinese, Japanese, Korean and Thai.

We are currently supporting following 42 languages.

Afrikaans (af), Azerbaijani (az), Bosnian (bs), Simplified Chinese (ch_sim), Traditional Chinese (ch_tra), Czech (cs), Welsh (cy), Danish (da), German (de), English (en), Spanish (es), Estonian (et), French (fr), Irish (ga), Croatian (hr), Hungarian (hu), Indonesian (id), Icelandic (is), Italian (it), Japanese (ja), Korean (ko), Kurdish (ku), Latin (la), Lithuanian (lt), Latvian (lv), Maori (mi), Malay (ms), Maltese (mt), Dutch (nl), Norwegian (no), Polish (pl), Portuguese (pt),Romanian (ro), Slovak (sk), Slovenian (sl), Albanian (sq), Swedish (sv),Swahili (sw), Thai (th), Tagalog (tl), Turkish (tr), Uzbek (uz), Vietnamese (vi)

List of characters is in folder easyocr/character. If you are native speaker of any language and think we should add or remove any character, please create an issue and/or pull request (like this one).

Install using pip for stable release,

pip install easyocrFor latest development release,

pip install git+git://github.com/jaidedai/easyocr.gitNote: for Windows, please install torch and torchvision first by following official instruction here https://pytorch.org

import easyocr

reader = easyocr.Reader(['th','en'])

reader.readtext('test.jpg')Model weight for chosen language will be automatically downloaded or you can download it manually from the following links and put it in 'model' folder

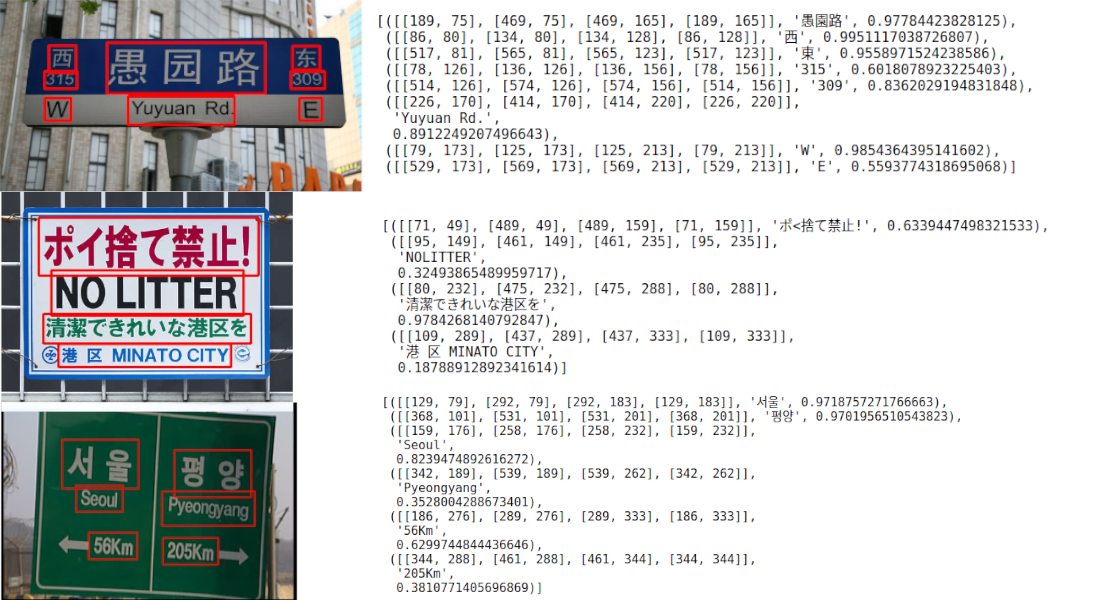

Output will be in list format, each item represents bounding box, text and confident level, respectively.

[([[1344, 439], [2168, 439], [2168, 580], [1344, 580]], 'ใจเด็ด', 0.4542357623577118),

([[1333, 562], [2169, 562], [2169, 709], [1333, 709]], 'Project', 0.9557611346244812)]In case you do not have GPU or your GPU has low memory, you can run it in CPU mode by adding gpu = False

reader = easyocr.Reader(['th','en'], gpu = False)There are optional arguments for readtext function, decoder can be 'greedy'(default), 'beamsearch', or 'wordbeamsearch'. For 'beamsearch' and 'wordbeamsearch', you can also set beamWidth (default=5). Bigger number will be slower but can be more accurate. For multiprocessing, you can set workers and batch_size. Current version converts image into grey scale for recognition model, so contrast can be an issue. You can try playing with contrast_ths, adjust_contrast and filter_ths.

- Language packs: Russian-based languages + Arabic + etc.

- Language model for better decoding

- Better documentation and api

This project is based on researches/codes from several papers/open-source repositories.

Detection part is using CRAFT algorithm from this official repository and their paper.

Recognition model is CRNN (paper). It is composed of 3 main components, feature extraction (we are currently using Resnet), sequence labeling (LSTM) and decoding (CTC). Training pipeline for recognition part is a modified version from this repository.

Beam search code is based on this repository and his blog.

And good read about CTC from distill.pub here.