In this lab, you'll explore applying several types of kernels on some more visual data. At the end of the lab, you'll then apply your knowledge of SVMs to a real-world dataset!

In this lab you will:

- Create and evaluate a non-linear SVM model in scikit-learn using real-world data

- Interpret the prediction results of an SVM model by creating visualizations

To start, reexamine the final datasets from the previous lab:

from sklearn.datasets import make_blobs

from sklearn.datasets import make_moons

import matplotlib.pyplot as plt

%matplotlib inline

from sklearn import svm

from sklearn.model_selection import train_test_split

import numpy as np

plt.figure(figsize=(10, 4))

plt.subplot(121)

plt.title('Four Blobs')

X_3, y_3 = make_blobs(n_samples=100, n_features=2, centers=4, cluster_std=1.6, random_state=123)

plt.scatter(X_3[:, 0], X_3[:, 1], c=y_3, s=25)

plt.subplot(122)

plt.title('Two Moons with Substantial Overlap')

X_4, y_4 = make_moons(n_samples=100, shuffle=False , noise=0.3, random_state=123)

plt.scatter(X_4[:, 0], X_4[:, 1], c=y_4, s=25)

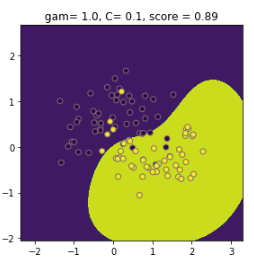

plt.show()Recall how a radial basis function kernel has 2 hyperparameters: C and gamma. To further investigate tuning, you'll generate 9 subplots with varying parameter values and plot the resulting decision boundaries. Take a look at this example from scikit-learn for inspiration. Each of the 9 plots should look like this:

Note that the score represents the percentage of correctly classified instances according to the model.

C_range = np.array([0.1, 1, 10])

gamma_range = np.array([0.1, 1, 100])

param_grid = dict(gamma=gamma_range, C=C_range)

details = []

# Create a loop that builds a model for each of the 9 combinations# Prepare your data for plotting# Plot the prediction results in 9 subplots Repeat what you did before but now, use decision_function() instead of predict(). What do you see?

# Plot the decision function results in 9 subplotsRecall that the polynomial kernel has 3 hyperparameters:

-

$\gamma$ , which can be specified using parametergamma -

$r$ , which can be specified using parametercoef0 -

$d$ , which can be specified using parameterdegree

Build 8 different plots using all the possible combinations:

-

$r= 0.1$ and$2$ -

$\gamma= 0.1$ and$1$ -

$d= 3$ and$4$

Note that decision_function() cannot be used on a classifier with more than two classes, so simply use predict() again.

r_range = np.array([0.1, 2])

gamma_range = np.array([0.1, 1])

d_range = np.array([3, 4])

param_grid = dict(gamma=gamma_range, degree=d_range, coef0=r_range)

details = []

# Create a loop that builds a model for each of the 8 combinations# Prepare your data for plotting# Plot the prediction results in 8 subplots Build a support vector machine using the Sigmoid kernel.

Recall that the sigmoid kernel has 2 hyperparameters:

-

$\gamma$ , which can be specified using parametergamma -

$r$ , which can be specified using parametercoef0

Look at 9 solutions using the following values for

-

$\gamma$ = 0.001, 0.01, and 0.1 -

$r$ = 0.01, 1, and 10

# Create a loop that builds a model for each of the 9 combinations# Prepare your data for plotting# Plot the prediction results in 9 subplots - The polynomial kernel is very sensitive to the hyperparameter settings. Especially when setting a "wrong" gamma - this can have a dramatic effect on the model performance

- Our experiments with the Polynomial kernel were more successful

Explore the same parameters you did before when exploring polynomial kernel

- Perform a train-test split. Assign 33% of the data to the test set and set the

random_stateto 123 - Train 8 models using the training set for each combination of different parameters

- Plot the results as above, both for the training and test sets

- Make some notes for yourself on training vs test performance and select an appropriate model based on these results

# Perform a train-test split, then create a loop that builds a model for each of the 8 combinations

X_train, X_test, y_train, y_test = None

# Create a loop that builds a model for each of the 8 combinations

r_range = np.array([0.1, 2])

gamma_range = np.array([0.1, 1])

d_range = np.array([3, 4])# Prepare your data for plotting# Plot the prediction results in 8 subplots on the training set # Now plot the prediction results for the test setUntil now, you've only explored datasets with two features to make it easy to visualize the decision boundary. Remember that you can use Support Vector Machines on a wide range of classification datasets, with more than two features. While you will no longer be able to visually represent decision boundaries (at least not if you have more than three feature spaces), you'll still be able to make predictions.

To do this, you'll use the salaries dataset again (in 'salaries_final.csv').

This dataset has six predictors:

-

Age: continuous -

Education: Categorical - Bachelors, Some-college, 11th, HS-grad, Prof-school, Assoc-acdm, Assoc-voc, 9th, 7th-8th, 12th, Masters, 1st-4th, 10th, Doctorate, 5th-6th, Preschool -

Occupation: Categorical - Tech-support, Craft-repair, Other-service, Sales, Exec-managerial, Prof-specialty, Handlers-cleaners, Machine-op-inspct, Adm-clerical, Farming-fishing, Transport-moving, Priv-house-serv, Protective-serv, Armed-Forces -

Relationship: Categorical - Wife, Own-child, Husband, Not-in-family, Other-relative, Unmarried -

Race: Categorical - White, Asian-Pac-Islander, Amer-Indian-Eskimo, Other, Black -

Sex: Categorical - Female, Male

Simply run the code below to import and preview the dataset.

import statsmodels as sm

from sklearn.preprocessing import StandardScaler

from sklearn.linear_model import LogisticRegression

import pandas as pd

salaries = pd.read_csv('salaries_final.csv', index_col=0)

salaries.head()The following cell creates dummy variables for all categorical columns and splits the data into target and predictor variables.

# Create dummy variables and

# Split data into target and predictor variables

target = pd.get_dummies(salaries['Target'], drop_first=True)

xcols = salaries.columns[:-1]

data = pd.get_dummies(salaries[xcols], drop_first=True)Now build a simple linear SVM using this data. Note that using SVC, some slack is automatically allowed, so the data doesn't have to perfectly linearly separable.

- Create a train-test split of 75-25. Set the

random_stateto 123 - Standardize the data

- Fit an SVM model, making sure that you set "probability = True"

- After you run the model, calculate the classification accuracy score on both the test set

# Split the data into a train and test set

X_train, X_test, y_train, y_test = None# Standardize the dataWarning: It takes quite a while to build the model! The score is slightly better than the best result obtained using decision trees, but at the cost of computational resources. Changing kernels can make computation times even longer.

# Fit SVM model

# ⏰ This cell may take several minutes to run# Calculate the classification accuracy scoreGreat, you've got plenty of practice with Support Vector Machines! In this lab, you explored kernels and applying SVMs to real-life data!