This repository contains the PyTorch implementation of Selective Classification used in the

- NeurIPS'2020 paper Self-Adaptive Training: beyond Empirical Risk Minimization,

- Journal version Self-Adaptive Training: Bridging the Supervised and Self-Supervised Learning.

Self-adaptive training significantly improves the generalization of deep networks under noise and enhances the self-supervised representation learning. It also advances the state-of-the-art on learning with noisy label, adversarial training and the linear evaluation on the learned representation.

- 2021.10: We have released the code for Selective Classification.

- 2021.01: We have released the journal version of Self-Adaptive Training, which is a unified algorithm for both the supervised and self-supervised learning. Code for self-supervised learning will be available soon.

- 2020.09: Our work has been accepted at NeurIPS'2020.

- Python >= 3.6

- PyTorch >= 1.0

- CUDA

- Numpy

The main.py contains training and evaluation functions in standard training setting.

-

Training and evaluation using the default parameters

We provide our training scripts in directory

train.sh. For a concrete example, we can use the command as below to train the default model (i.e., VGG16-BN) on CIFAR10 dataset:$ bash train.sh

-

Additional arguments

arch: the architecture of backbone model, e.g., vgg16_bndataset: the trainiing dataset, e.g., cifar10loss: the loss function for training, e.g., satsat-momentum: the momentum term of our approach

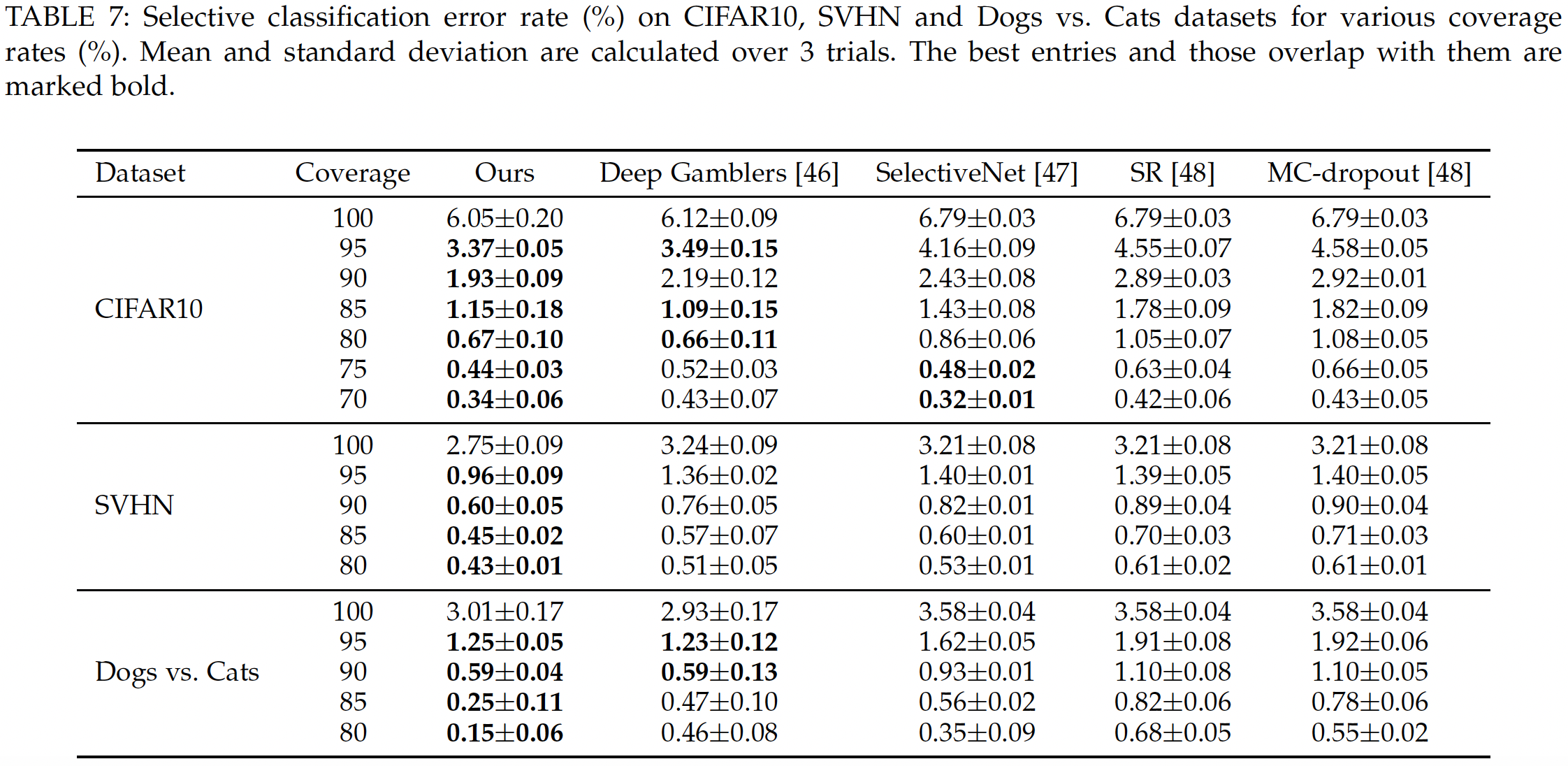

Self-Adaptive Training vs. prior state-of-the-arts on the Selective Classification at vaiours coverage rate.

For technical details, please check the conference version or the journal version of our paper.

@inproceedings{huang2020self,

title={Self-Adaptive Training: beyond Empirical Risk Minimization},

author={Huang, Lang and Zhang, Chao and Zhang, Hongyang},

booktitle={Advances in Neural Information Processing Systems},

volume={33},

year={2020}

}

@article{huang2021self,

title={Self-Adaptive Training: Bridging the Supervised and Self-Supervised Learning},

author={Huang, Lang and Zhang, Chao and Zhang, Hongyang},

journal={arXiv preprint arXiv:2101.08732},

year={2021}

}

This code is based on:

We thank the authors for sharing their code.

If you have any question about this code, feel free to open an issue or contact laynehuang@pku.edu.cn.