As described in Azure ML documentation, you can azureml-ai-monitoring Python package to collect real-time inference data received and produced by your machine learning model, deployed to Azure ML managed online endpoint.

This repo provides all the required resources to deploy and test a Data Collector solution end-to-end.

Successful deployment depends on the following 3 files, borrowed from the original Azure ML examples repo: inference model, environment configuration and scoring script.

sklearn_regression_model.pkl is a SciKit-Learn sample regression model in a pickle format. We'll re-use it "as is".

conda.yaml is our Conda file, to define running environment for our machine learning model. It has been modified to include the following AzureML monitoring Python package.

azureml-ai-monitoring

score_datacollector.py is a Python script, used by the managed online endpoint to feed and retrieve data from our inference model. This script was updated to enable data collection operations.

Collector and BasicCorrelationContext classes are referenced, along with the pandas package. Inclusion of pandas is crucial, as Data Collector at the time of writing was able to log directly only DataFrames.

from azureml.ai.monitoring import Collector

from azureml.ai.monitoring.context import BasicCorrelationContext

import pandas as pdinit function initialises global Data Collector variables.

global inputs_collector, outputs_collector, artificial_context

inputs_collector = Collector(name='model_inputs')

outputs_collector = Collector(name='model_outputs')

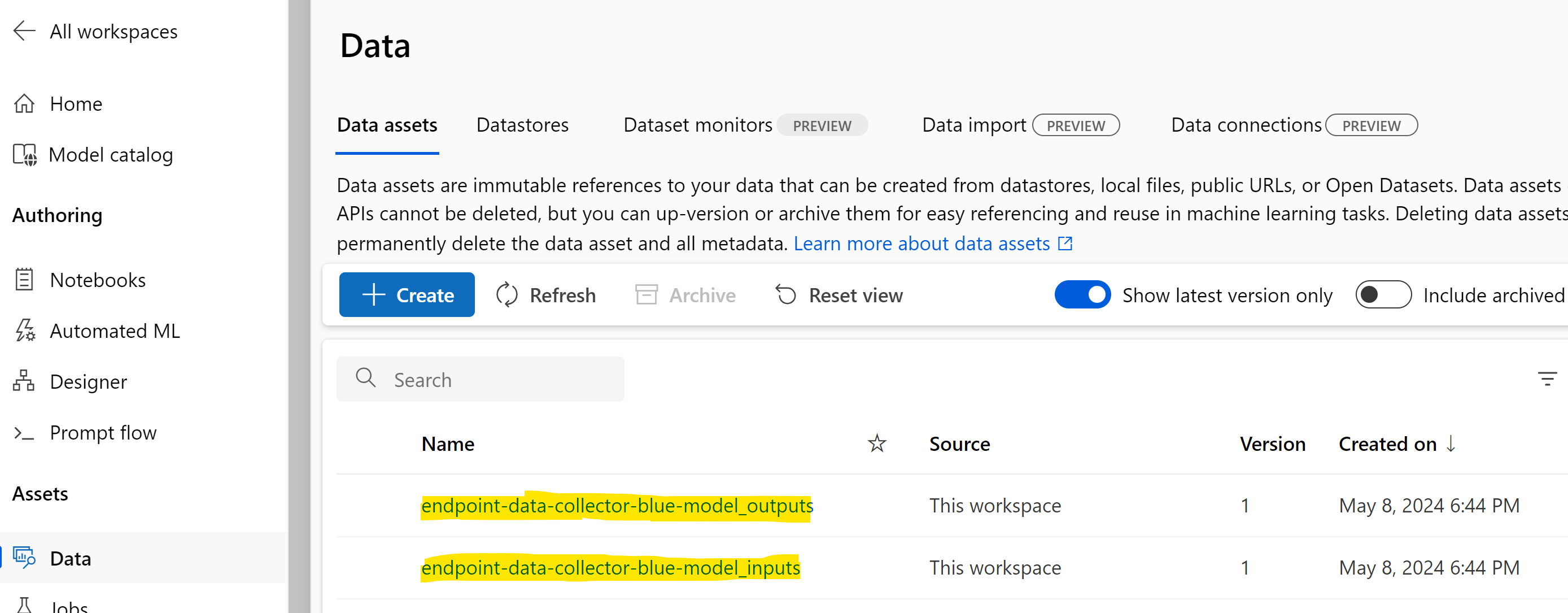

artificial_context = BasicCorrelationContext(id='Laziz_Demo')"model_inputs" and "model_outputs" are reserved Data Collector names, used to auto-register relevant Azure ML data assets.

run function contains 2 data processing blocks. First, we convert our input inference data into pandas DataFrame to log it along with our correlation context.

input_df = pd.DataFrame(data)

context = inputs_collector.collect(input_df , artificial_context)The same operation is then performed with the model's prediction to log it in the Data Collctor's output.

output_df = pd.DataFrame(result)

outputs_collector.collect(output_df, context)To deploy and test Data Collector, you can execute cells in the provided Jupyter notebook.

You would need to set values of your Azure subscription, resource group and Azure ML workspace name.

subscription_id = "<YOUR_AZURE_SUBSCRIPTION>"

resource_group_name = "<YOUR_AZURE_ML_RESOURCE_GROUP>"

workspace_name = "<YOUR_AZURE_ML_WORKSPACE>"You may upload local model for initial testing (Option 1).

model = Model(path = "./model/sklearn_regression_model.pkl")However, recommended and more robust option is to register the model in your Azure ML (Option 2), as it provides better management control, eliminates model's re-upload and enables more controlled reproducibility of the testing results.

file_model = Model(

path="./model/",

type=AssetTypes.CUSTOM_MODEL,

name="scikit-model",

description="SciKit model created from local file",

)

ml_client.models.create_or_update(file_model)Ensure that you refer the right version of your registered inference model and environment.

model = "scikit-model:1"

env = "azureml:scikit-env:2"You need to enable explicitly both input and output collectors, referred in the scoring script.

collections = {

'model_inputs': DeploymentCollection(

enabled="true",

),

'model_outputs': DeploymentCollection(

enabled="true",

)

}

data_collector = DataCollector(collections=collections)Those values can be passed then to data_collector parameter of ManagedOnlineDeployment.

deployment = ManagedOnlineDeployment(

...

data_collector=data_collector

)Once the inference model is deployed to managed online endpoint, you can test data logging with provided sample-request.json file.

ml_client.online_endpoints.invoke(

endpoint_name=endpoint_name,

deployment_name="blue",

request_file="./sample-request.json",

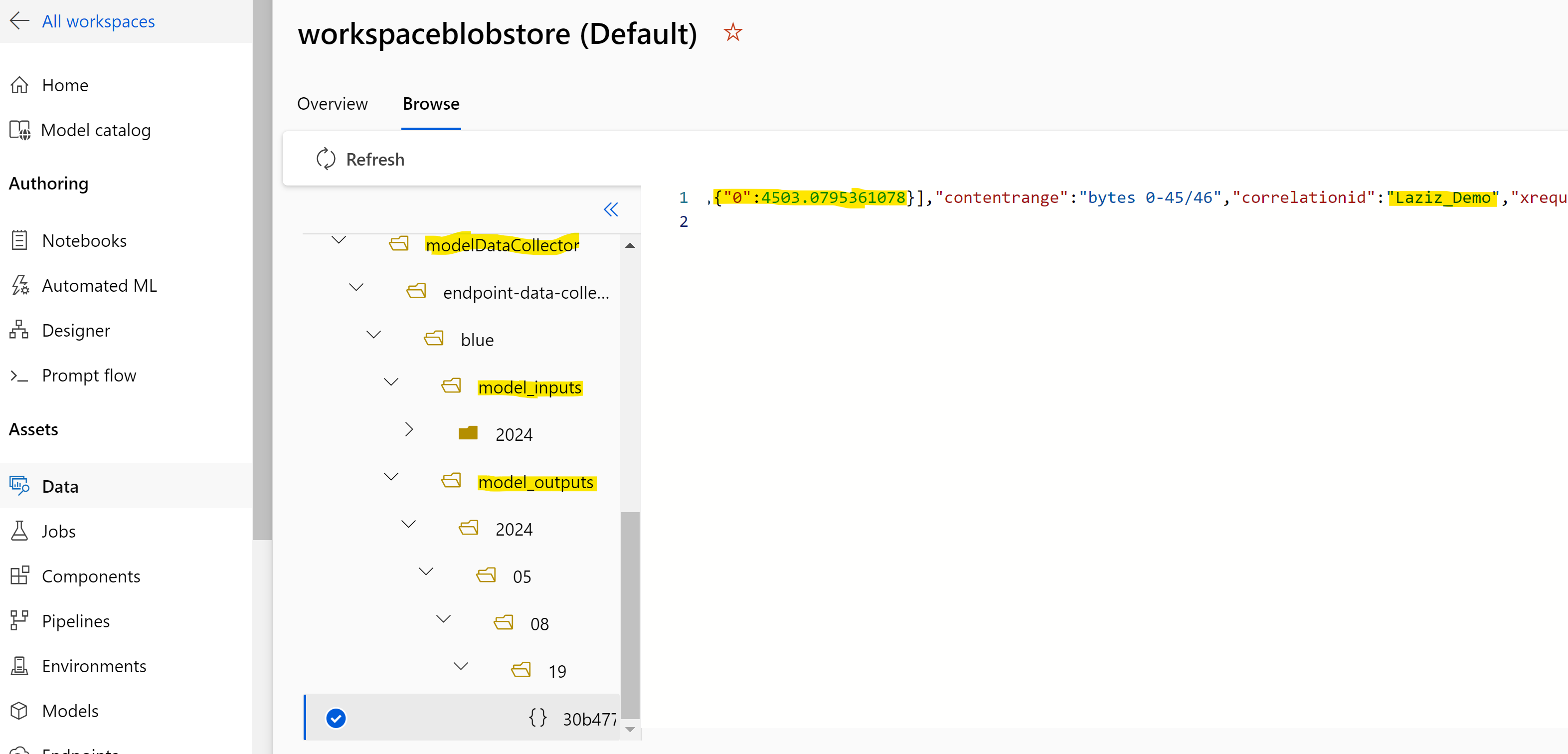

)Logged inference data can be found in workspaceblobstore (Default), unless you define custom paths for the input and output data collectors in Step 2.4 above.