Official Implementation for Agile But Safe: Learning Collision-Free High-Speed Legged Locomotion.

Robotics: Science and Systems (RSS) 2024

Tairan He*, Chong Zhang*, Wenli Xiao, Guanqi He, Changliu Liu, Guanya Shi

This codebase is under CC BY-NC 4.0 license, with inherited license in Legged Gym and RSL RL from ETH Zurich, Nikita Rudin and NVIDIA CORPORATION & AFFILIATES. You may not use the material for commercial purposes, e.g., to make demos to advertise your commercial products.

Please read through the whole README.md before cloning the repo.

Note: Before running our code, it's highly recommended to first play with RSL's Legged Gym version to get a basic understanding of the Isaac-LeggedGym-RslRL framework.

-

Create environment and install torch

conda create -n xxx python=3.8 # or use virtual environment/docker pip3 install torch torchvision torchaudio --extra-index-url https://download.pytorch.org/whl/cu116 # used version during this work: torch==2.0.1 torchvision==0.15.2 torchaudio==2.0.2 # for older cuda ver: pip3 install torch==1.10.0+cu113 torchvision==0.11.1+cu113 torchaudio==0.10.0+cu113 -f https://download.pytorch.org/whl/cu113/torch_stable.html -

Install Isaac Gym preview 4 release https://developer.nvidia.com/isaac-gym

unzip files to a folder, then install with pip:

cd isaacgym/python && pip install -e .check it is correctly installed by playing:

cd examples && python 1080_balls_of_solitude.py

-

Clone this codebase and install our

rsl_rlin the training folderpip install -e rsl_rl

-

Install our

legged_gympip install -e legged_gym

Ensure you have installed the following packages:

- pip install numpy==1.21 (must < 1.24, >1.20)

- pip install tensorboard

- pip install setuptools==59.5.0

-

Try training.

can use "--headless" to disable gui, press "v" to pause/resume gui play.

for go1, in

legged_gym/legged_gym,# agile policy python scripts/train.py --task=go1_pos_rough --max_iterations=4000 # agile policy, lagrangian ver python scripts/train.py --task=go1_pos_rough_ppo_lagrangian --max_iterations=4000 # recovery policy python scripts/train.py --task=go1_rec_rough --max_iterations=1000 -

Play the trained policy

python scripts/play.py --task=go1_pos_rough python scripts/play.py --task=go1_rec_rough

-

Use the testbed, and train/test Reach-Avoid network:

# try testbed python scripts/testbed.py --task=go1_pos_rough [--load_run=xxx] --num_envs=1 # train RA (be patient it will take time to converge) # make sure you have at least exported one policy by play.py so the exported folder exists python scripts/testbed.py --task=go1_pos_rough --num_envs=1000 --headless --trainRA # test RA (only when you have trained one RA) python scripts/testbed.py --task=go1_pos_rough --num_envs=1 --testRA # evaluate python scripts/testbed.py --task=go1_pos_rough --num_envs=1000 --headless [--load_run=xxx] [--testRA] -

Sample dataset for ray-prediction network training

python scripts/camrec.py --task=go1_pos_rough --num_envs=3- Tips 1: You can edit the

shiftvalue in Line 93 and thelog_rootin Line 87 to collect different dataset files in parallel (so you can merge them by simply moving the files), and manually change the obstacles inenv_cfg.asset.object_filesin Line 63. - Tips 2: After collecting the data, there's a template code in

train_depth_resnet.pyto train the ray-prediction network, but using what you like for training CV models is highly encouraged! - Tips 3: You may change camera configs of resolution, position, FOV, and depth range in the config file Line 151.

- Tips 1: You can edit the

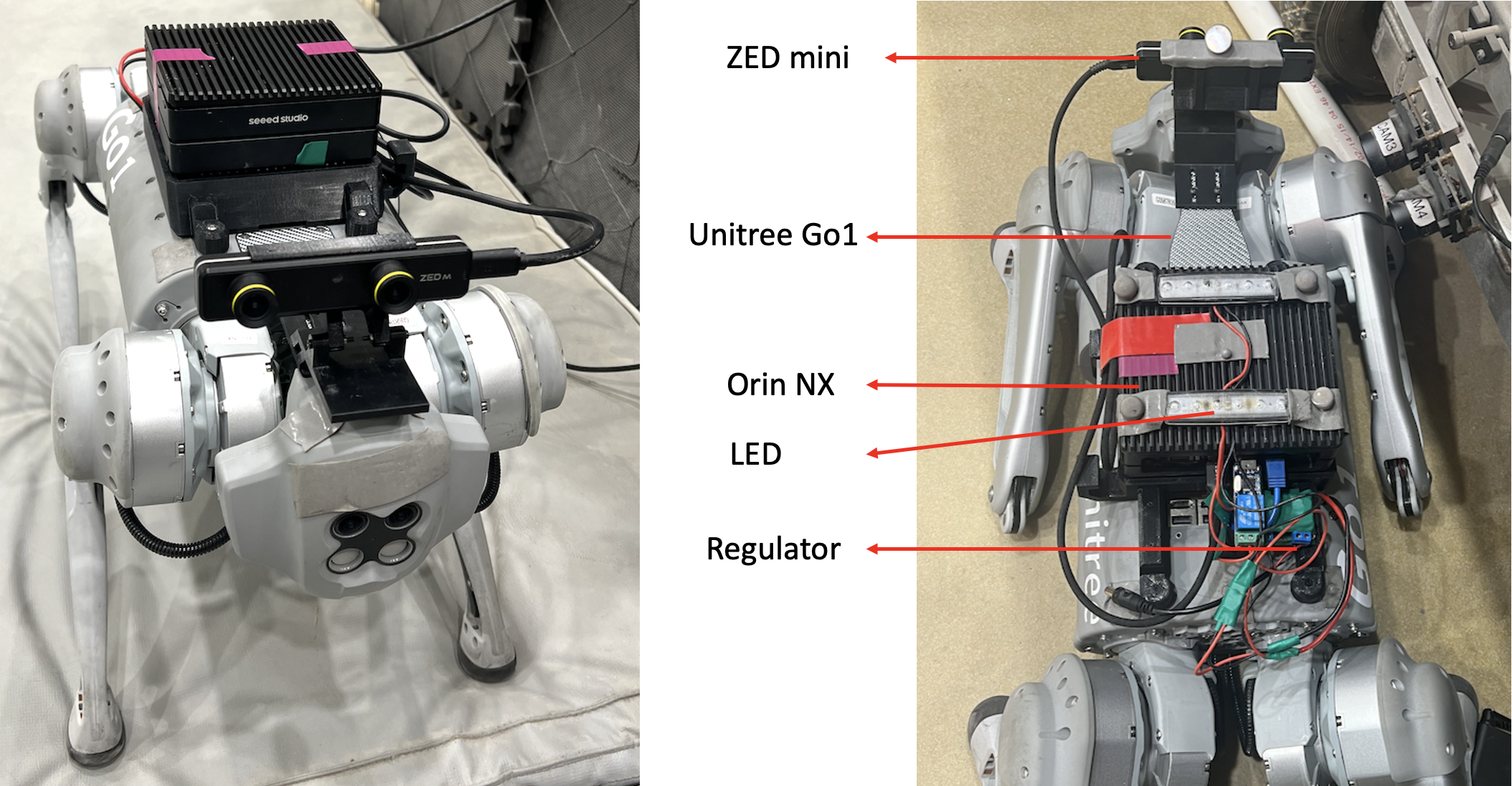

- Robot: Unitree Go1 EDU

- Perception: ZED mini Camera

- Onboard Compute: Orin NX (16GB)

- LED: PSEQT LED Lights

- Power Regulator: Pololu 12V, 15A Step-Down Voltage Regulator D24V150F12

- Orin NX mount: STL-PCMount_v2

- ZED mini mount: STL-CameraSeat and STL-ZEDMountv1

- Unitree Go1 SDK

- ZED SDK

- ROS Noetic

- Pytorch on a Python 3 environment

-

Low Level Control Mode for Unitree Go1:

L2+A->L2+B->L1+L2+Start -

Network Configuration for Orin NX:

- IP:

192.168.123.15 - Netmask:

255.255.255.0

- IP:

-

Convert the

.ptfiles of agile/recovery policy, RA value to.onnxfiles usingsrc/abs_src/onnx_model_converter.py -

Modify the path of (.onnx or .pt) models in

publisher_depthimg_linvel.pyanddepth_obstacle_depth_goal_ros.py

roscore: Activate ROS Noetic Envriomentcd src/abs_src/: Enter the ABS scripts filepython publisher_depthimg_linvel.py: Publish ray prediction results and odometry results for navigation goalspython led_control_ros.py: Control the two LED lights based on RA valuespython depth_obstacle_depth_goal_ros.py: Activate the Go1 using the agile policy and the recovery policy

B: Emergence stopDefault: Go1 Running ABS based on goal commandL2: Turn leftR2: Turn rightDown: BackUp: StandA: Turn aroundX: Back to initial position

- Deployment and Ray-Prediction: Tairan He, tairanh@andrew.cmu.edu

- Policy Learning in Sim: Chong Zhang, chozhang@ethz.ch

- PPO-Lagrangian Implementation: Wenli Xiao, randyxiao64@gmail.com

You can create an issue if you meet any bugs, except:

- If you cannot run the vanilla RSL's Legged Gym, it is expected that you first go to the vanilla Legged Gym repo for help.

- There can be CUDA-related errors when there are too many parallel environments on certain PC+GPU+driver combination: we cannot solve thiss, you can try to reduce num_envs.

- Our codebase is only for our hardware system showcased above. We are happy to make it serve as a reference for the community, but we won't tune it for your own robots.

If our work does help you, please consider citing us and the following works:

@inproceedings{AgileButSafe,

author = {He, Tairan and Zhang, Chong and Xiao, Wenli and He, Guanqi and Liu, Changliu and Shi, Guanya},

title = {Agile But Safe: Learning Collision-Free High-Speed Legged Locomotion},

booktitle = {Robotics: Science and Systems (RSS)},,

year = {2024},

}We used codes in Legged Gym and RSL RL, based on the paper:

- Rudin, Nikita, et al. "Learning to walk in minutes using massively parallel deep reinforcement learning." CoRL 2022.

Previsou works that heavily inspired the policy training designs:

- Rudin, Nikita, et al. "Advanced skills by learning locomotion and local navigation end-to-end." 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2022.

- Zhang, Chong, et al. "Learning Agile Locomotion on Risky Terrains." arXiv preprint arXiv:2311.10484 (2023).

- Zhang, Chong, et al. "Resilient Legged Local Navigation: Learning to Traverse with Compromised Perception End-to-End." ICRA 2024.

Previsou works that heavily inspired the RA value design:

- Hsu, Kai-Chieh, et al. "Safety and liveness guarantees through reach-avoid reinforcement learning." RSS 2021.

Previsou works that heavily inspired the perception design:

- Hoeller, David, et al. "Anymal parkour: Learning agile navigation for quadrupedal robots." Science Robotics 9.88 (2024): eadi7566.

- Acero, F., K. Yuan, and Z. Li. "Learning Perceptual Locomotion on Uneven Terrains using Sparse Visual Observations." IEEE Robotics and Automation Letters 7.4 (2022): 8611-8618.