Fix the Noise: Disentangling Source Feature for Controllable Domain Translation

Fix the Noise: Disentangling Source Feature for Controllable Domain Translation

Dongyeun Lee, Jae Young Lee, Doyeon Kim, Jaehyun Choi, Jaejun Yoo, Junmo Kim

https://arxiv.org/abs/2303.11545

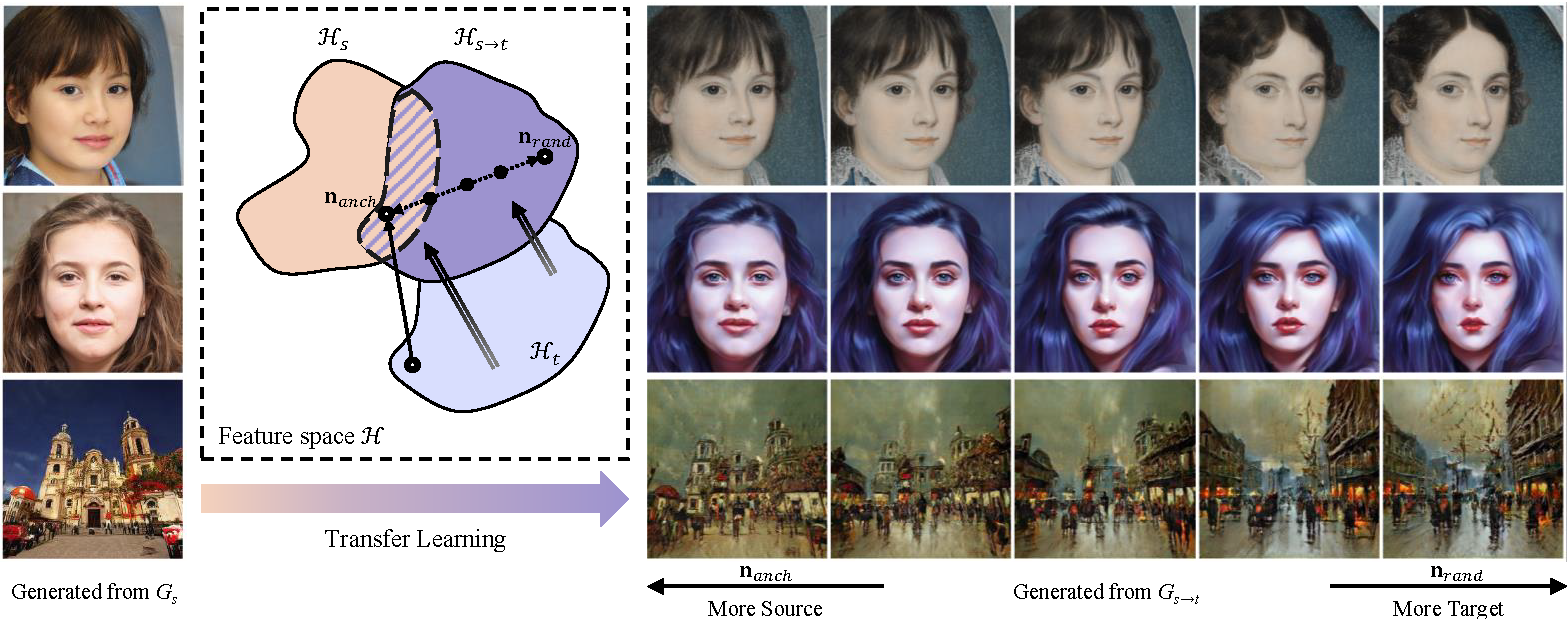

Abstract: Recent studies show strong generative performance in domain translation especially by using transfer learning techniques on the unconditional generator. However, the control between different domain features using a single model is still challenging. Existing methods often require additional models, which is computationally demanding and leads to unsatisfactory visual quality. In addition, they have restricted control steps, which prevents a smooth transition. In this paper, we propose a new approach for high-quality domain translation with better controllability. The key idea is to preserve source features within a disentangled subspace of a target feature space. This allows our method to smoothly control the degree to which it preserves source features while generating images from an entirely new domain using only a single model. Our extensive experiments show that the proposed method can produce more consistent and realistic images than previous works and maintain precise controllability over different levels of transformation.

-

2023-05-09Add several useful code for inference. For detailed usage, refer to Inference.

Our code is highly based on the official implementation of stylegan2-ada-pytorch. Please refer to requirements for detailed requirements.

- Python libraries:

pip install click requests tqdm pyspng ninja imageio-ffmpeg==0.4.3 lpips- Docker users:

docker build --tag sg2ada:latest .

docker run --gpus all --shm-size 64g -it -v /etc/localtime:/etc/localtime:ro -v /mnt:/mnt -v /data:/data --name sg2ada sg2ada /bin/bashYou can download the pre-trained checkpoints used in our paper:

| Setting | Resolution | Config | Description |

|---|---|---|---|

| FFHQ → MetFaces | 256x256 | paper256 | Trained initialized with official pre-trained model on FFHQ 256 from Pytorch implementation of stylegan2-ada-pytorch. |

| FFHQ → AAHQ | 256x256 | paper256 | Trained initialized with official pre-trained model on FFHQ 256 from Pytorch implementation of stylegan2-ada-pytorch. |

| Church → Cityscape | 256x256 | stylegan2 | Trained initialized with official pre-trained model on LSUN Church config-f from Tensorflow implementation of stylegan2. |

We provide official dataset download pages and our processing code for reproducibility. You could alse use official processing code in stylegan2-ada-pytorch. However, doing so does not guarantee reported performance.

MetFaces: Download the MetFaces dataset and unzip it.

# Resize MetFaces

python dataset_resize.py --source data/metfaces/images --dest data/metfaces/images256x256AAHQ: Download the AAHQ dataset and process it following original instruction.

# Resize AAHQ

python dataset_resize.py --source data/aahq-dataset/aligned --dest data/aahq-dataset/images256x256Wikiart Cityscape: Download cityscape from Wikiart and unzip it.

# Resize Wikiart Cityscape

python dataset_resize.py --source data/wikiart_cityscape/images --dest data/wikiart_cityscape/images256x256Using FixNoise, base command for training stylegan2-ada network as follows:

FFHQ → MetFaces

python train.py --outdir=${OUTDIR} --data=${DATADIR} --cfg=paper256 --resume=ffhq256 --fm=0.05FFHQ → AAHQ

python train.py --outdir=${OUTDIR} --data=${DATADIR} --cfg=paper256 --resume=ffhq256 --fm=0.05Church → Cityscape

python train.py --outdir=${OUTDIR} --data=${DATADIR} --cfg=stylegan2 --resume=church256 --fm=0.05Additionally, we provide detailed training scripts used in our experiments.

To generate interpolated images according to different noise, run:

# Generate MetFaces images without truncation

python generate.py --cfg=paper256 --outdir=out --trunc_psi=1 --seeds=865-1000 \\

--network=pretrained/metfaces-fm0.05-001612.pkl

# Generate MetFaces images with truncation

python generate.py --cfg=paper256 --outdir=out --trunc_psi=0.7 --trunc_cutoff=8 --seeds=865-1000 \\

--network=pretrained/metfaces-fm0.05-001612.pkl

# Generate AAHQ images with truncation

python generate.py --cfg=paper256 --outdir=out --trunc_psi=0.7 --trunc_cutoff=8 --seeds=865-1000 \\

--network=pretrained/aahq-fm0.05-010886.pkl

# Generate Wikiart images with truncation

python generate.py --cfg=stylegan2 --outdir=out --trunc_psi=0.7 --trunc_cutoff=8 --seeds=865-1000 \\

--network=pretrained/wikiart-fm0.05-004032.pklYou can change interpolation steps by modifying --interp-step.

To find the matching latent code for a given image file, run:

python projector_z.py --outdir=${OUTDIR} --target_dir=${DATADIR} \

--https://nvlabs-fi-cdn.nvidia.com/stylegan2-ada-pytorch/pretrained/transfer-learning-source-nets/ffhq-res256-mirror-paper256-noaug.pklWe modify projector.py to project image to z space of StyleGAN2. To use multiple gpus, add --gpus arguments. You can render the resulting latent vector by specifying --projected-z-dir for generate.py.

# Render an image from projected Z

python generate.py --cfg=paper256 --outdir=out --trunc_psi=0.7 --trunc_cutoff=8 \\

--projected-z-dir=./projected --network=pretrained/aahq-fm0.05-010886.pklWe provide noise interpolation example code in jupyter notebook.

@InProceedings{Lee_2023_CVPR,

author = {Lee, Dongyeun and Lee, Jae Young and Kim, Doyeon and Choi, Jaehyun and Yoo, Jaejun and Kim, Junmo},

title = {Fix the Noise: Disentangling Source Feature for Controllable Domain Translation},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2023},

pages = {14224-14234}

}

The majority of FixNoise is licensed under CC-BY-NC, however, portions of this project are available under a separate license terms: all codes used or modified from stylegan2-ada-pytorch is under the Nvidia Source Code License.