This is the official implementation of GS-LoRA (CVPR 2024). GS-LoRA is effective, parameter-efficient, data-efficient, and easy to implement continual forgetting, where selective information is expected to be continuously removed from a pre-trained model while maintaining the rest. The core idea is to use LoRA combining group Lasso to realize fast model editing. For more details, please refer to:

Continual Forgetting for Pre-trained Vision Models [paper] [video] [video in bilibili]

Hongbo Zhao, Bolin Ni, Junsong Fan, Yuxi Wang, Yuntao Chen, Gaofeng Meng, Zhaoxiang Zhang

git clone https://github.com/bjzhb666/GS-LoRA.git

cd GS-LoRAconda create -n GSlora python=3.9

pip install -r requirements.txtmkdir data

cd data

unzip data.zipYou can get our CASIA-100 in https://drive.google.com/file/d/16CaYf45UsHPff1smxkCXaHE8tZo4wTPv/view?usp=sharing, and put it in the data folder.

Note: CASIA-100 is a subdataset from CASIA-WebFace. We have already split the train/test dataset in our google drive.

mkdir result

cd resultYou can use our pre-trained Face Transformer directly. Download the pre-trained weight and put it into the result folder

Or you can train your own pre-trained models.

Your result folder should be like this:

result

└── ViT-P8S8_casia100_cosface_s1-1200-150de-depth6

├── Backbone_VIT_Epoch_1110_Batch_82100_Time_2023-10-18-18-22_checkpoint.pth

└── config.txtCode is in run_sub.sh

bash scripts/run_sub.shtest_sub.sh is the test code for our Face Transformer. You can test the pre-trained model use it.

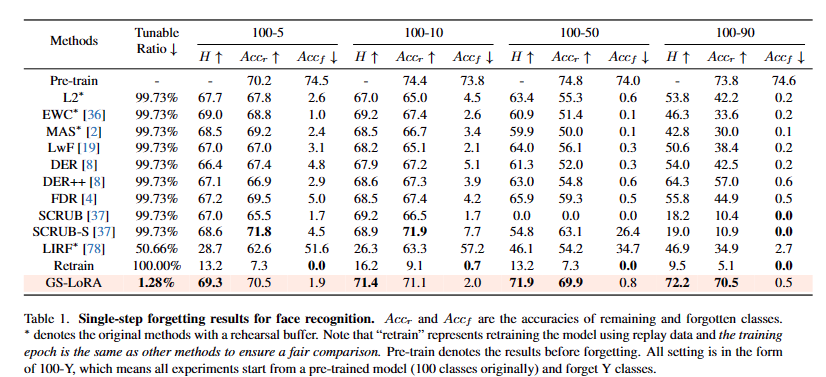

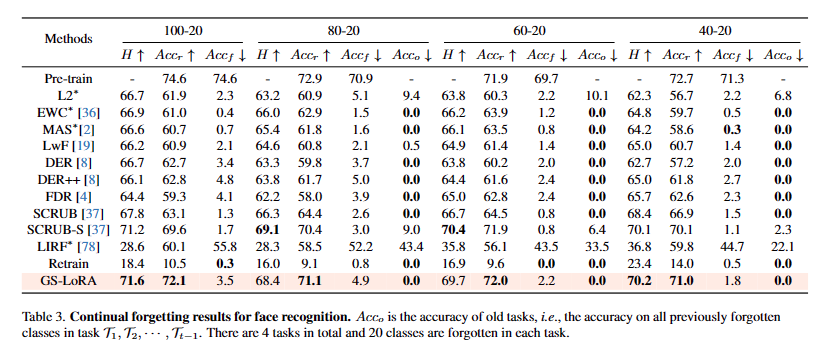

bash scripts/test_sub.shWe provide the code of all baselines and our method GS-LoRA metioned in our paper.

In run_cl_forget.sh, LIRF, SCRUB, EWC, MAS, L2, DER, DER++, FDR, LwF, Retrain and GS-LoRA (Main Table)

For baseline methods, you can still use run_cl_forget.sh and change --num_tasks to 1. (There are examples in run_cl_forget.sh.)

For GS-LoRA, we recommend you to use run_forget.sh. Some exploration Experiment like the data ratio, group strategy, scalablity,

If you find this project useful in your research, please consider citing:

@article{zhao2024continual,

title={Continual Forgetting for Pre-trained Vision Models},

author={Zhao, Hongbo and Ni, Bolin and Wang, Haochen and Fan, Junsong and Zhu, Fei and Wang, Yuxi and Chen, Yuntao and Meng, Gaofeng and Zhang, Zhaoxiang},

journal={arXiv preprint arXiv:2403.11530},

year={2024}

}

Please contact us or post an issue if you have any questions.

This work is built upon the zhongyy/Face-Transformer: Face Transformer for Recognition (github.com)

This project is released under the MIT License.