Keras Temporal Convolutional Network

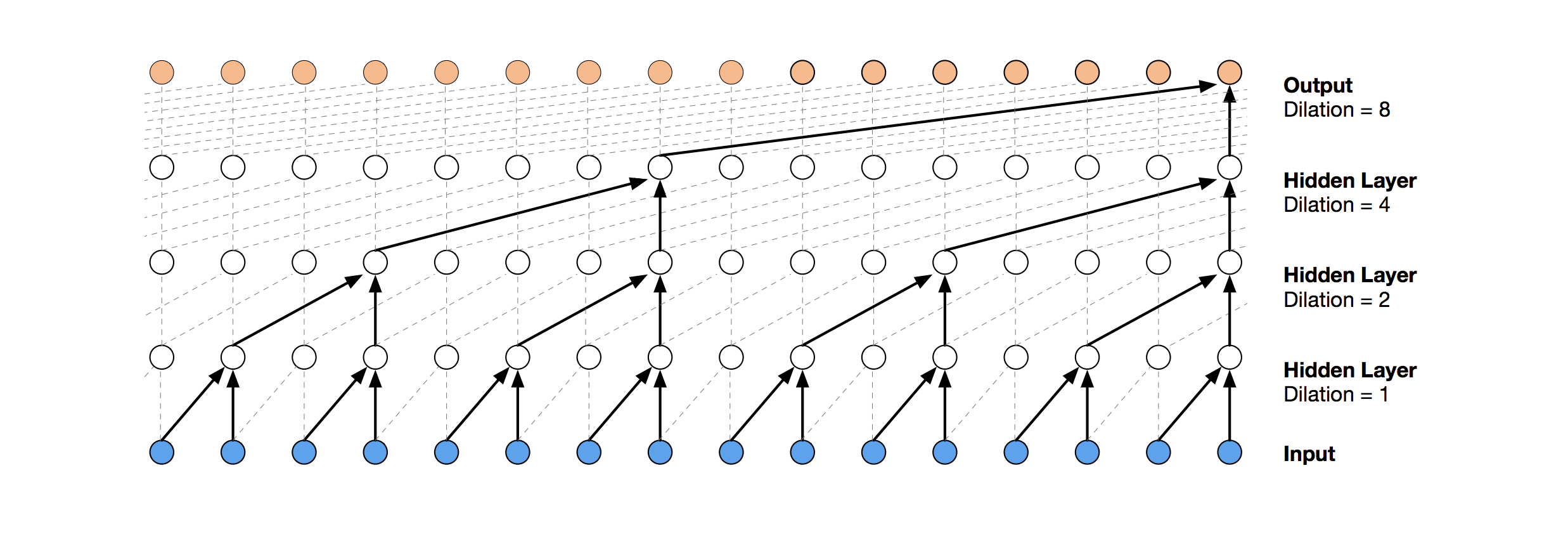

- TCNs exhibit longer memory than recurrent architectures with the same capacity.

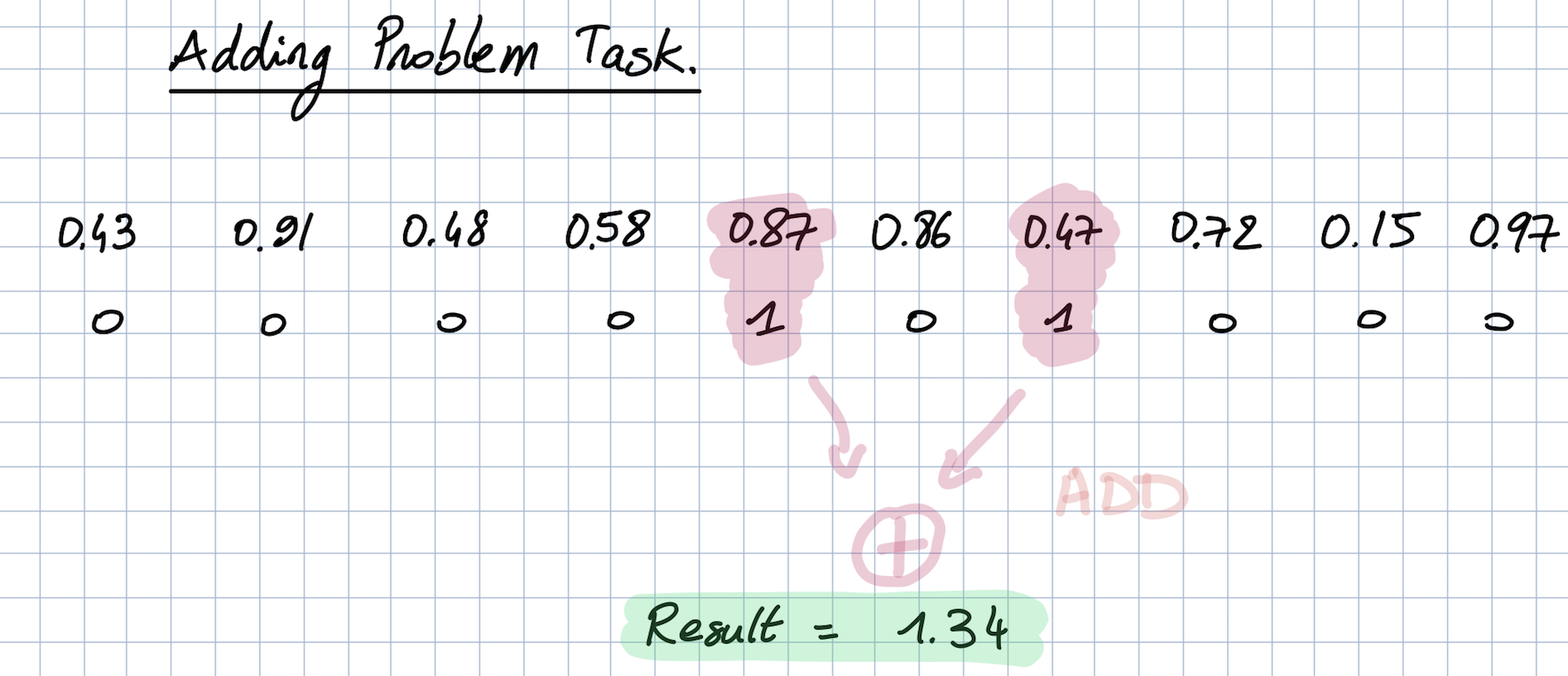

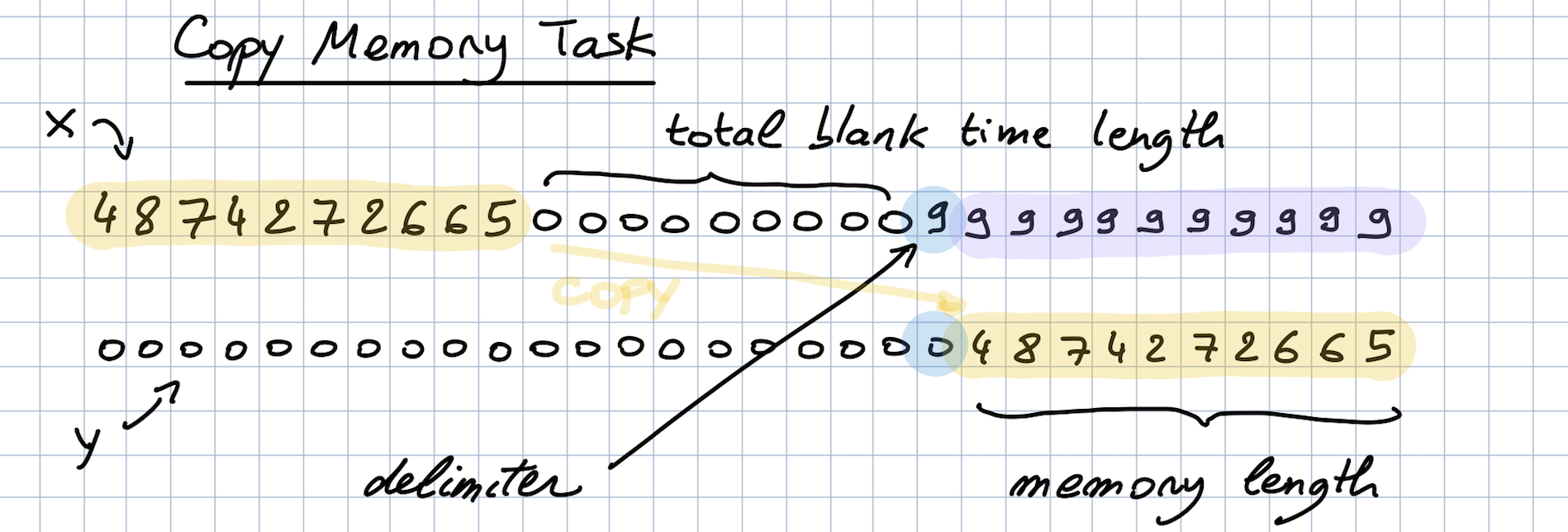

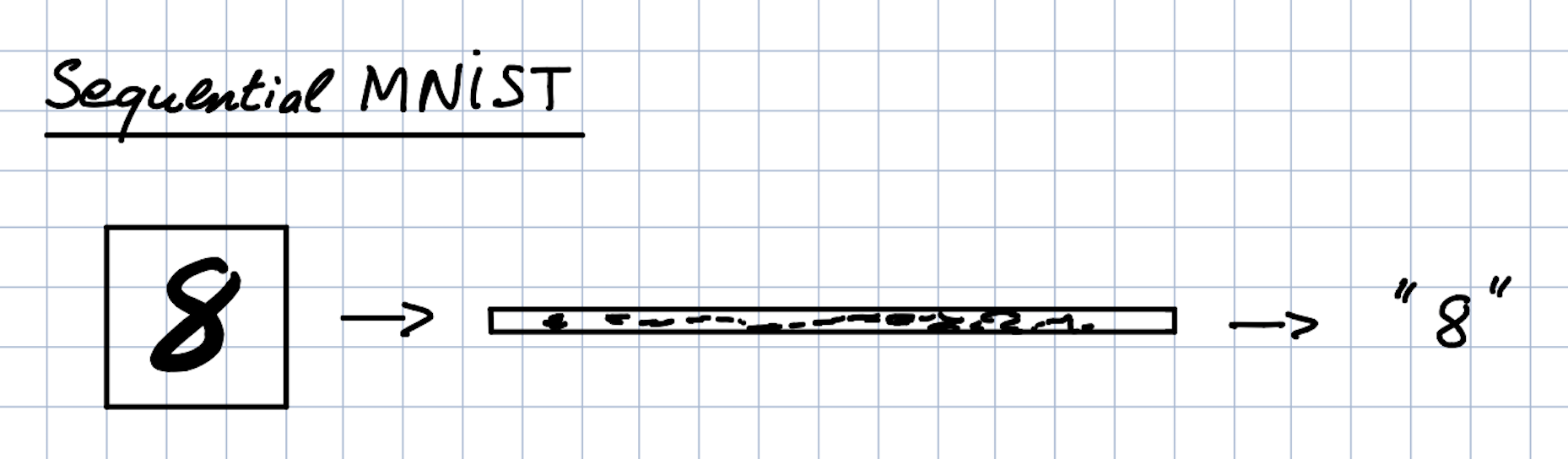

- Constantly performs better than LSTM/GRU architectures on a vast range of tasks (Seq. MNIST, Adding Problem, Copy Memory, Word-level PTB...).

- Parallelism, flexible receptive field size, stable gradients, low memory requirements for training, variable length inputs...

Visualization of a stack of dilated causal convolutional layers (Wavenet, 2016)

Visualization of a stack of dilated causal convolutional layers (Wavenet, 2016)

After installation, the model can be imported like this:

from tcn import tcn

In the following examples, we assume the input to have a shape (batch_size, timesteps, input_dim).

The model is a Keras model. The model functions (model.summary, model.fit, model.predict...) are all functional.

model = tcn.dilated_tcn(output_slice_index='last',

num_feat=input_dim,

nb_filters=24,

kernel_size=8,

dilatations=[1, 2, 4, 8],

nb_stacks=8,

max_len=timesteps,

activation='norm_relu',

regression=True)

model = tcn.dilated_tcn(num_feat=input_dim,

num_classes=10,

nb_filters=10,

kernel_size=8,

dilatations=[1, 2, 4, 8],

nb_stacks=8,

max_len=timesteps,

activation='norm_relu')

model = tcn.dilated_tcn(output_slice_index='last',

num_feat=input_dim,

num_classes=10,

nb_filters=64,

kernel_size=8,

dilatations=[1, 2, 4, 8],

nb_stacks=8,

max_len=timesteps,

activation='norm_relu')

git clone git@github.com:philipperemy/keras-tcn.git

cd keras-tcn

virtualenv -p python3.6 venv

source venv/bin/activate

pip install -r requirements.txt # change to tensorflow if you dont have a gpu.

pip install . # install keras-tcn

cd adding_problem/

python main.py # run adding problem task

cd copy_memory/

python main.py # run copy memory task

cd mnist_pixel/

python main.py # run sequential mnist pixel task

- https://github.com/locuslab/TCN/ (TCN for Pytorch)

- https://arxiv.org/pdf/1803.01271.pdf (An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling)

- https://arxiv.org/pdf/1609.03499.pdf (Original Wavenet paper)