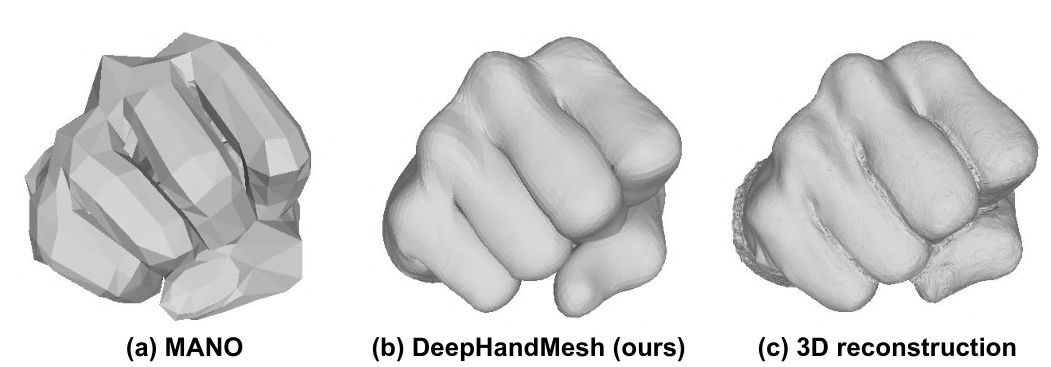

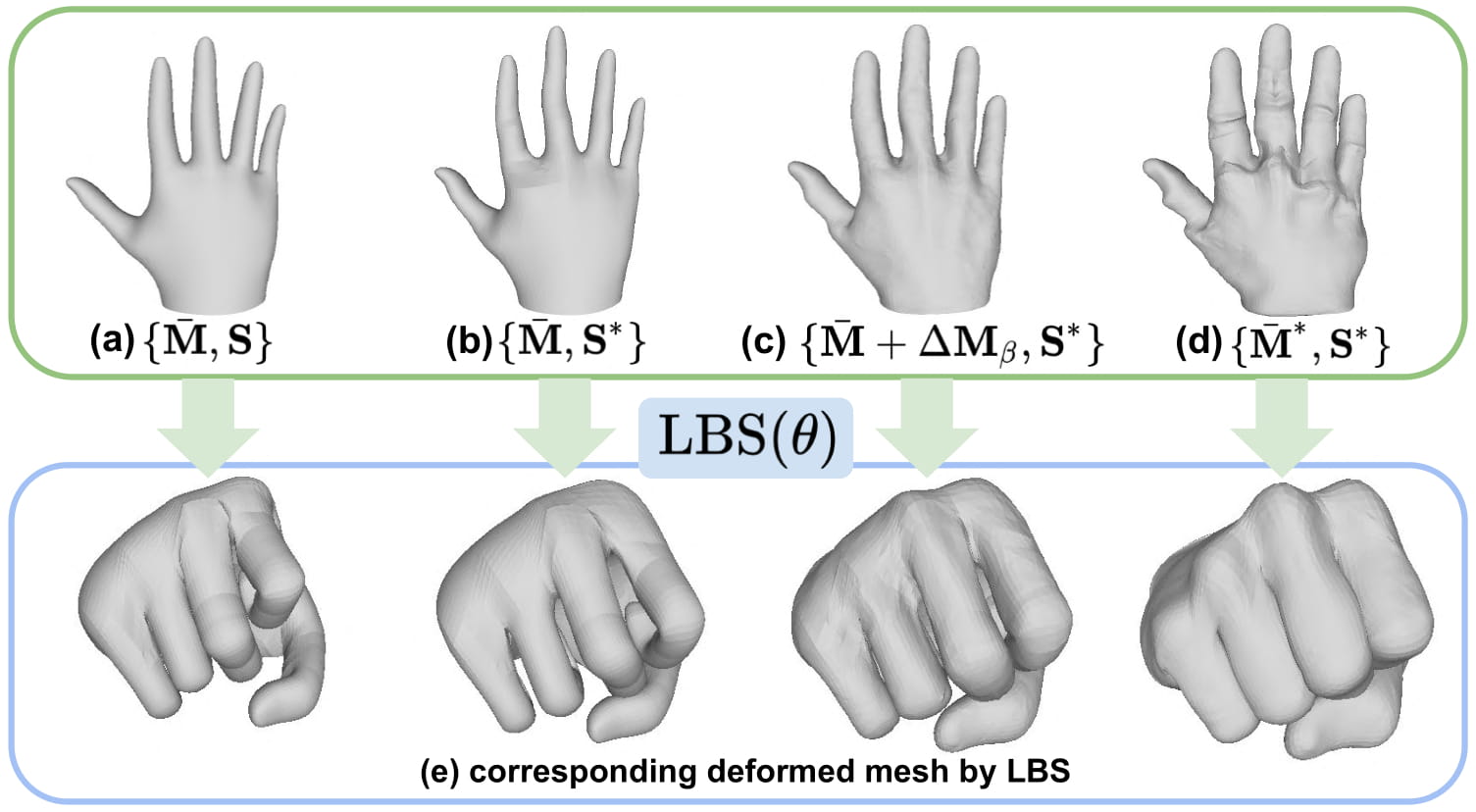

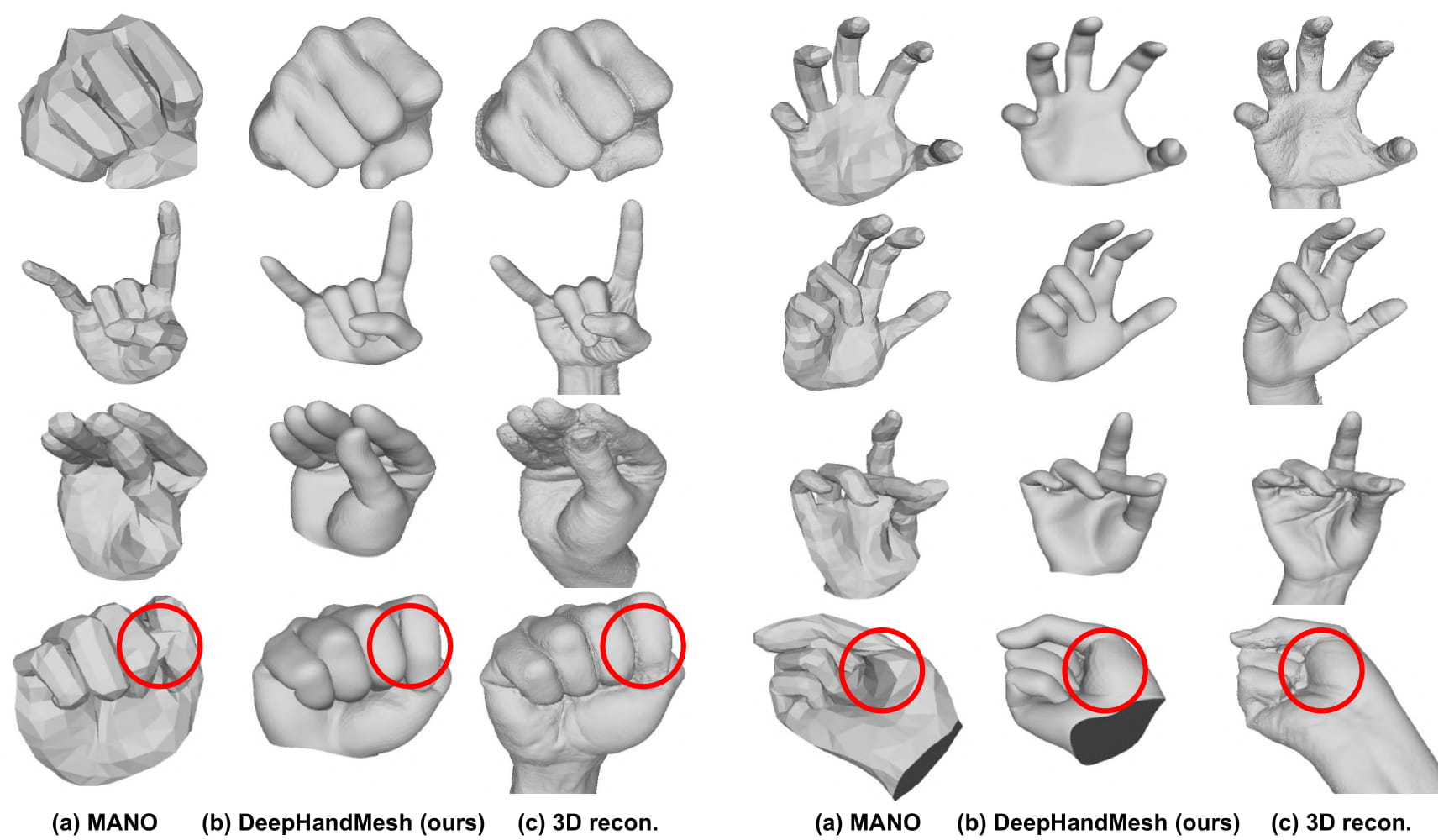

DeepHandMesh: A Weakly-Supervised Deep Encoder-Decoder Framework for High-Fidelity Hand Mesh Modeling

This repo is official PyTorch implementation of DeepHandMesh: A Weakly-Supervised Deep Encoder-Decoder Framework for High-Fidelity Hand Mesh Modeling (ECCV 2020. Oral.).

- Download pre-trained DeepHandMesh from here.

- Place the downloaded file at

demo/subject_${SUBJECT_IDX}folder, where the filename issnapshot_${EPOCH}.pth.tar. - Download hand model from here and place it at

datafolder. - Set hand joint Euler angles at here.

- Run

python demo.py --gpu 0 --subject ${SUBJECT_IDX} --test_epoch ${EPOCH}.

- For the DeepHandMesh dataset download and instructions, go to [HOMEPAGE].

- Belows are instructions for DeepHandMesh for the weakly-supervised high-fidelity 3D hand mesh modeling.

The ${ROOT} is described as below.

${ROOT}

|-- data

|-- common

|-- main

|-- output

|-- demo

datacontains data loading codes and soft links to images and annotations directories.commoncontains kernel codes.maincontains high-level codes for training or testing the network.outputcontains log, trained models, visualized outputs, and test result.democontains demo codes.

You need to follow directory structure of the data as below.

${ROOT}

|-- data

| |-- images

| | |-- subject_1

| | |-- subject_2

| | |-- subject_3

| | |-- subject_4

| |-- annotations

| | |-- 3D_scans_decimated

| | | |-- subject_4

| | |-- depthmaps

| | | |-- subject_4

| | |-- keypoints

| | | |-- subject_4

| | |-- KRT_512

| |-- hand_model

| | |-- global_pose.txt

| | |-- global_pose_inv.txt

| | |-- hand.fbx

| | |-- hand.obj

| | |-- local_pose.txt

| | |-- skeleton.txt

| | |-- skinning_weight.txt

- Download datasets and hand model from [HOMEPAGE].

You need to follow the directory structure of the output folder as below.

${ROOT}

|-- output

| |-- log

| |-- model_dump

| |-- result

| |-- vis

logfolder contains training log file.model_dumpfolder contains saved checkpoints for each epoch.resultfolder contains final estimation files generated in the testing stage.visfolder contains visualized results.

- For the training, install neural renderer from here.

- After the install, uncomment line 12 of

main/model.py(from nets.DiffableRenderer.DiffableRenderer import RenderLayer) and line 40 ofmain/model.py(self.renderer = RenderLayer()). - If you want only testing, you do not have to install it.

- In the

main/config.py, you can change settings of the model

In the main folder, run

python train.py --gpu 0-3 --subject 4to train the network on the GPU 0,1,2,3. --gpu 0,1,2,3 can be used instead of --gpu 0-3. You can use --continue to resume the training.

Only subject 4 is supported for the training.

Place trained model at the output/model_dump/subject_${SUBJECT_IDX}.

In the main folder, run

python test.py --gpu 0-3 --test_epoch 4 --subject 4to test the network on the GPU 0,1,2,3 with snapshot_4.pth.tar. --gpu 0,1,2,3 can be used instead of --gpu 0-3.

Only subject 4 is supported for the testing.

It will save images and output meshes.

Here I report results of DeepHandMesh and pre-trained DeepHandMesh.

- Pre-trained DeepHandMesh [Download]

@InProceedings{Moon_2020_ECCV_DeepHandMesh,

author = {Moon, Gyeongsik and Shiratori, Takaaki and Lee, Kyoung Mu},

title = {DeepHandMesh: A Weakly-supervised Deep Encoder-Decoder Framework for High-fidelity Hand Mesh Modeling},

booktitle = {European Conference on Computer Vision (ECCV)},

year = {2020}

}

DeepHandMesh is CC-BY-NC 4.0 licensed, as found in the LICENSE file.