This repository is the official implementation of "Diving into Underwater: Segment Anything Model Guided Underwater Salient Instance Segmentation and A Large-scale Dataset".

If you found this project useful, please give us a star ⭐️ or cite us in your paper, this is the greatest support and encouragement for us.

🚩 News (2024.05) This paper has been accepted as a paper at ICML 2024, receiving an average rating of 6 with confidence of 4.25.

-

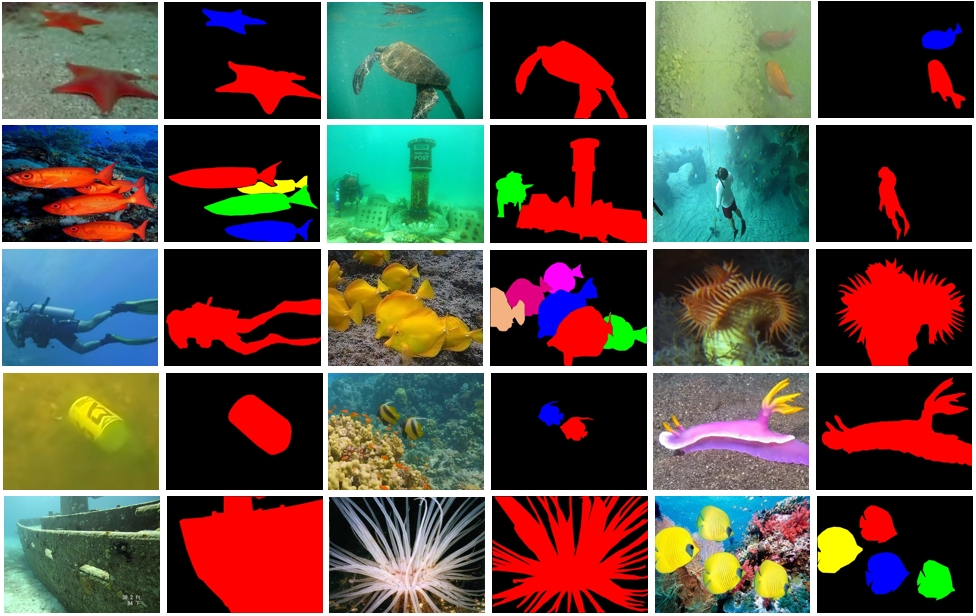

USIS10K dataset: We construct the first large-scale USIS10K dataset for the underwater salient instance segmentation task, which contains 10,632 images and pixel-level annotations of 7 categories. As far as we know, this is the largest salient instance segmentation dataset available that simultaneously includes Class-Agnostic and Multi-Class labels.

-

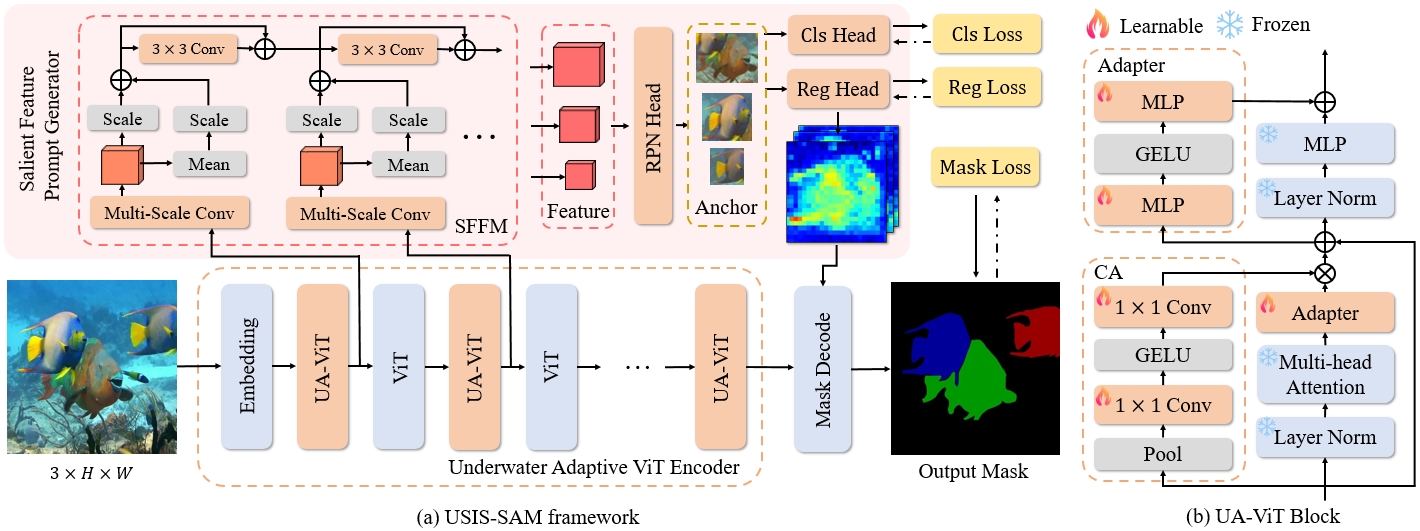

SOTA performance: We first attempt to apply SAM to underwater salient instance segmentation and propose USIS-SAM, aiming to improve the segmentation accuracy in complex underwater scenes. Extensive public evaluation criteria and large numbers of experiments verify the effectiveness of our USIS10K dataset and USIS-SAM model.

- Python 3.7+

- Pytorch 2.0+ (we use the PyTorch 2.1.2)

- CUDA 12.1 or other version

- mmengine

- mmcv>=2.0.0

- MMDetection 3.0+

Install on Environment

Step 0: Download and install Miniconda from the official website.

Step 1: Create a conda environment and activate it.

conda create -n usis python=3.9 -y

conda activate usisStep 2: Install PyTorch. If you have experience with PyTorch and have already installed it, you can skip to the next section.

Step 3: Install MMEngine, MMCV, and MMDetection using MIM.

pip install -U openmim

mim install mmengine

mim install "mmcv>=2.0.0"

mim install mmdetStep 4: Install other dependencies from requirements.txt

pip install -r requirements.txtPlease create a data folder in your working directory and put USIS10K in it for training or testing, or you can just change the dataset path in the config file. If you want to use other datasets, you can refer to MMDetection documentation to prepare the datasets.

data

├── USIS10K

| ├── foreground_annotations

│ │ ├── foreground_train_annotations.json

│ │ ├── foreground_val_annotations.json

│ │ ├── foreground_test_annotations.json

│ ├── multi_class_annotations

│ │ ├── multi_class_train_annotations.json

│ │ ├── multi_class_val_annotations.json

│ │ ├── multi_class_test_annotations.json

│ ├── train

│ │ ├── train_00001.jpg

│ │ ├── ...

│ ├── val

│ │ ├── val_00001.jpg

│ │ ├── ...

│ ├── test

│ │ ├── test_00001.jpg

│ │ ├── ...

you can get our USIS10K dataset in Baidu Disk (pwd:icml) or Google Drive.

| Model | Test | Epoch | mAP | AP50 | AP75 | config | download |

|---|---|---|---|---|---|---|---|

| USIS-SAM | Class-Agnostic | 24 | 64.3 | 84.9 | 74.0 | config | Baidu Disk (pwd:x2am) |

| USIS-SAM | Multi-Class | 24 | 43.9 | 59.6 | 50.0 | config | Baidu Disk (pwd:tv3z) |

Note: We optimized the code and training enhancement strategy of USIS-SAM without substantial changes to make it more efficient for training and inference, so the results here are slightly higher than the experiments in the paper.

We provide a simple script to download model weights from huggingface, or you can choose another source to download weights.

cd pretrain

bash download_huggingface.sh facebook/sam-vit-huge sam-vit-huge

cd ..After downloading, please modify the model weight path in the config file.

You can use the following command for single-card training.

python tools/train.py project/our/configs/multiclass_usis_train.pyOr you can use the following command for multi-card training.

bash tools/dist_train.sh project/our/configs/multiclass_usis_train.py nums_gpuFor more ways to train or test please refer to MMDetection User Guides, we provide you with their tools toolkit and test toolkit in the code!

If you find our repo or USIS10K dataset useful for your research, please cite us:

@inproceedings{

lian2024diving,

title={Diving into Underwater: Segment Anything Model Guided Underwater Salient Instance Segmentation and A Large-scale Dataset},

author={Shijie Lian and Ziyi Zhang and Hua Li and Wenjie Li and Laurence Tianruo Yang and Sam Kwong and Runmin Cong},

booktitle={Forty-first International Conference on Machine Learning},

year={2024},

url={https://openreview.net/forum?id=snhurpZt63}

}

This repository is implemented based on the MMDetection framework and Segment Anything Model. In addition, we referenced some of the code in the RSPrompter repository. Thanks to them for their excellent work.